Deploying AI Large Models on TKE

Last updated:2025-04-30 16:02:05

Overview

This document introduces how to deploy AI large models on TKE, taking DeepSeek-R1 as an example. Use tools such as Ollama, vLLM, or SGLang to run large models and expose APIs to the public, while combining with OpenWebUI to provide an interactive interface.

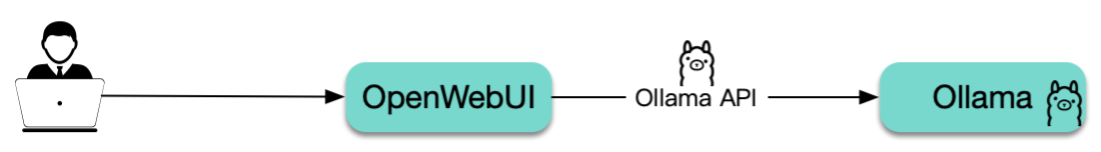

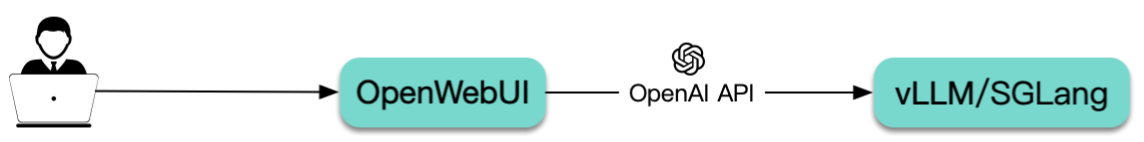

Deployment Architecture

Ollama: Provides the Ollama API.

vLLM and SGLang: Both provide OpenAI-compatible APIs.

Overview

Ollama is a tool for running large models. It can be regarded as Docker in the large model domain. It can download the required large models, expose APIs, and simplify the deployment of large models.

vLLM is similar to Ollama. It is also a tool for running large models. It has made many optimizations for reasoning, improved the operation efficiency and performance of the model, enabled large language models to run efficiently even with limited resources, and provides OpenAI-compatible APIs.

SGLang is similar to vLLM, with stronger performance. It is deeply optimized against DeepSeek and is also the official recommendation of DeepSeek.

OpenWebUI is a Web UI interaction tool for large models. It supports interacting with large models through two kinds of APIs, namely Ollama and OpenAI.

Technology Selection

Ollama, VLLM or SGLang?

Ollama: Suitable for individual developers or in local development environments for quick start. It has good GPU hardware and large model compatibility, is easy to configure, but slightly inferior to vLLM in terms of performance.

vLLM: It offers better inference performance and greater resource conservation. It is suitable for deployment on servers for multi-user collaboration. It supports distributed deployment across multiple machines and GPUs, with a higher capacity limit. However, it supports fewer types of GPU hardware compared to Ollama. Moreover, start parameters of vLLM need to be adjusted according to different GPUs and large models to enable it to run or achieve better performance.

SGLang: An emerging high-performance solution, optimized for specific models (such as DeepSeek), with higher throughput.

Selection suggestion: For users with certain technical foundation and able to invest effort in debugging, priority consideration should be given to using vLLM or SGLang for deployment in a Kubernetes cluster; if simplicity and speed are pursued, Ollama can be chosen. The text will provide detailed deployment steps for these two solutions respectively.

How to Store Data of AI Large Models?

AI large models typically occupy a large amount of storage space. Directly packaging them into container images is not practical. If the model files are automatically downloaded through

initContainers during startup, it will cause the startup time to be too long. Therefore, it is recommended to use shared storage to mount AI large models (that is, first download the model to shared storage through a Job task, and then mount the storage volume to the Pod where the large model runs). In this way, subsequent Pod startups can skip the model downloading step. Although it is still necessary to load the model from shared storage through the network, if a high-performance shared storage (such as Turbo type) is selected, this process is still rapid and effective.In Tencent Cloud, Cloud File Storage (CFS) can be used as shared storage. CFS has high performance and high availability and is suitable for storing AI large models. This document example will use CFS to store AI large models.

How to Select a GPU Model?

Different models are equipped with different GPU models. Please refer to GPU Computing Instance and GPU Rendering Instance to obtain the correspondence. Compared with vLLM, Ollama has a wider support range and better compatibility for various GPUs. It is recommended that you first clarify the needs of the selected tool and the target large model, and then select a suitable GPU model accordingly. Then, determine the GPU model to be used based on the above comparison table. In addition, be sure to pay attention to the sales status and inventory of the selected model in various regions. You can conduct a query through the Purchase CVM page (Select Instance Family GPU Model).

Image Description

The images used in the examples in this document are all official images with the image tag "latest". It is recommended that you replace these images with the image tag of a specified version as needed. You can access the following links to view the list of image tags:

SGLang:lmsysorg/sglang

Ollama:ollama/ollama

vLLM:vllm/vllm-openai

These official images are hosted in Docker Hub and have a large volume. In the TKE environment, a free Docker Hub image acceleration service is provided by default. Users in the Chinese mainland can also directly pull images from Docker Hub, but the speed may be slow, especially for larger images, and the waiting time will tend to be longer. To improve the image pull speed, it is advisable to synchronize the images to Tencent Container Registry (TCR) and replace the corresponding image addresses in the YAML file. This way, the image pull speed can be significantly improved.

Operation Steps

Step 1: Prepare cluster

Log in to the TKE console, create a cluster, and select TKE standard cluster for cluster type selection. For details, see Creating a Cluster.

Step 2: Prepare CFS Storage

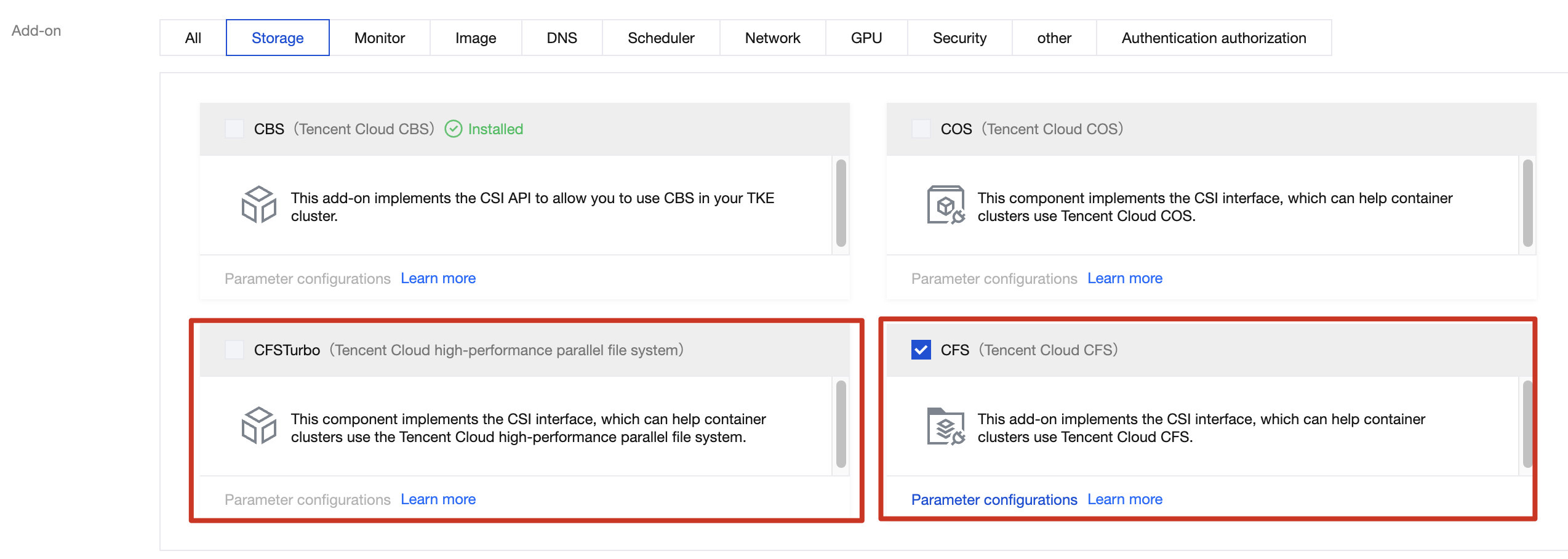

Installing CFS Component

1. In the cluster list, click the tke cluster ID to enter the cluster details page.

2. Select Component Management in the left menu bar, and click Create on the component page.

3. On the page of creating component management, check CFS (Cloud File Storage).

Notes:

Support selecting CFS (Cloud File Storage) or CFSTurbo (Tencent Cloud high-performance parallel file system). This document takes CFS (Cloud File Storage) as an example.

CFS-Turbo has stronger performance, faster read-write speed, but higher cost. If you want to run large models faster, CFS-Turbo can be considered for use.

4. Click Complete to create a component.

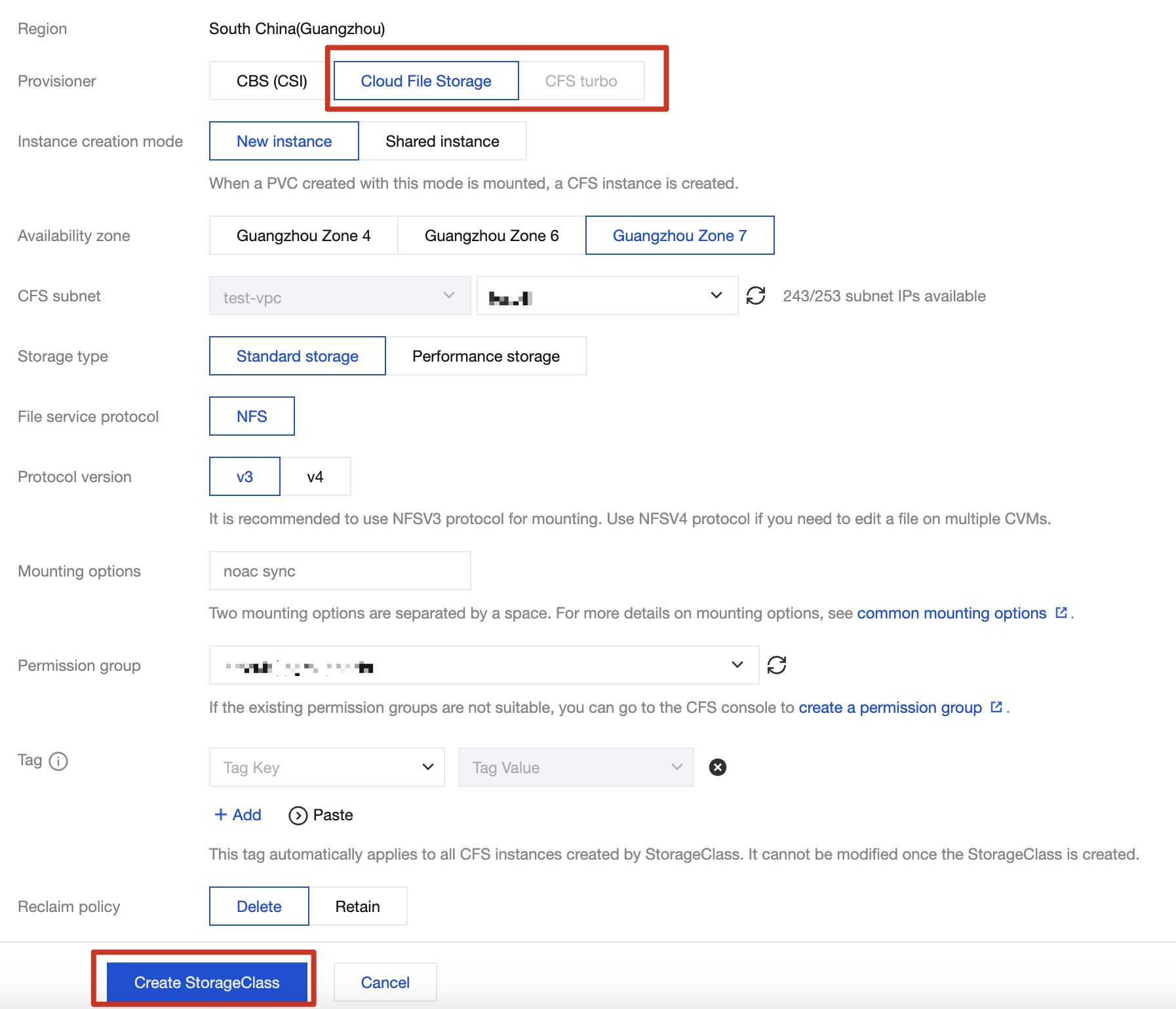

Create a StorageClass

Notes:

This step has many selection items. Therefore, this document uses the TKE console to create a PVC in the example. If you wish to create one through a YAML file, you can first use the console to create a test PVC and then copy the generated YAML file.

1. In the cluster list, click the tke cluster ID to enter the cluster details page.

2. Select the Storage in the left menu bar, and click Create on the StorageClass page.

3. On the Create Storage page, create a CFS-type StorageClass according to actual needs. As shown below:

Notes:

If you are creating a new CFS-Turbo StorageClass, you need to create a CFS-Turbo file system in the CFS console, and then, when creating the StorageClass, refer to the corresponding CFS-Turbo instance.

Name: Enter the StorageClass name. This document uses "cfs-ai" as an example.

Provisioner: Select "Cloud File Storage".

Storage type: It is recommended to choose "Performance Storage", which has a faster read-write speed than "Standard Storage".

4. Click Create StorageClass to complete the creation.

Creating a PVC

1. Log in to the TKE console, on the cluster management page, select the cluster ID, and enter the basic information page of the cluster.

2. Click YAML Creation in the top right corner of the page to enter the page for creating resources via Yaml.

3. Copy the following code to create a CFS type PVC used for storing AI large models:

Notes:

Replace storageClassName according to the actual situation.

For CFS, the storage size can be specified arbitrarily because the fee is calculated based on the actual occupied space.

apiVersion: v1kind: PersistentVolumeClaimmetadata:name: ai-modellabels:app: ai-modelspec:storageClassName: cfs-aiaccessModes:- ReadWriteManyresources:requests:storage: 100Gi

4. Create another PVC for OpenWebUI. You can use the same

storageClassName:apiVersion: v1kind: PersistentVolumeClaimmetadata:name: webuilabels:app: webuispec:accessModes:- ReadWriteManystorageClassName: cfs-airesources:requests:storage: 100Gi

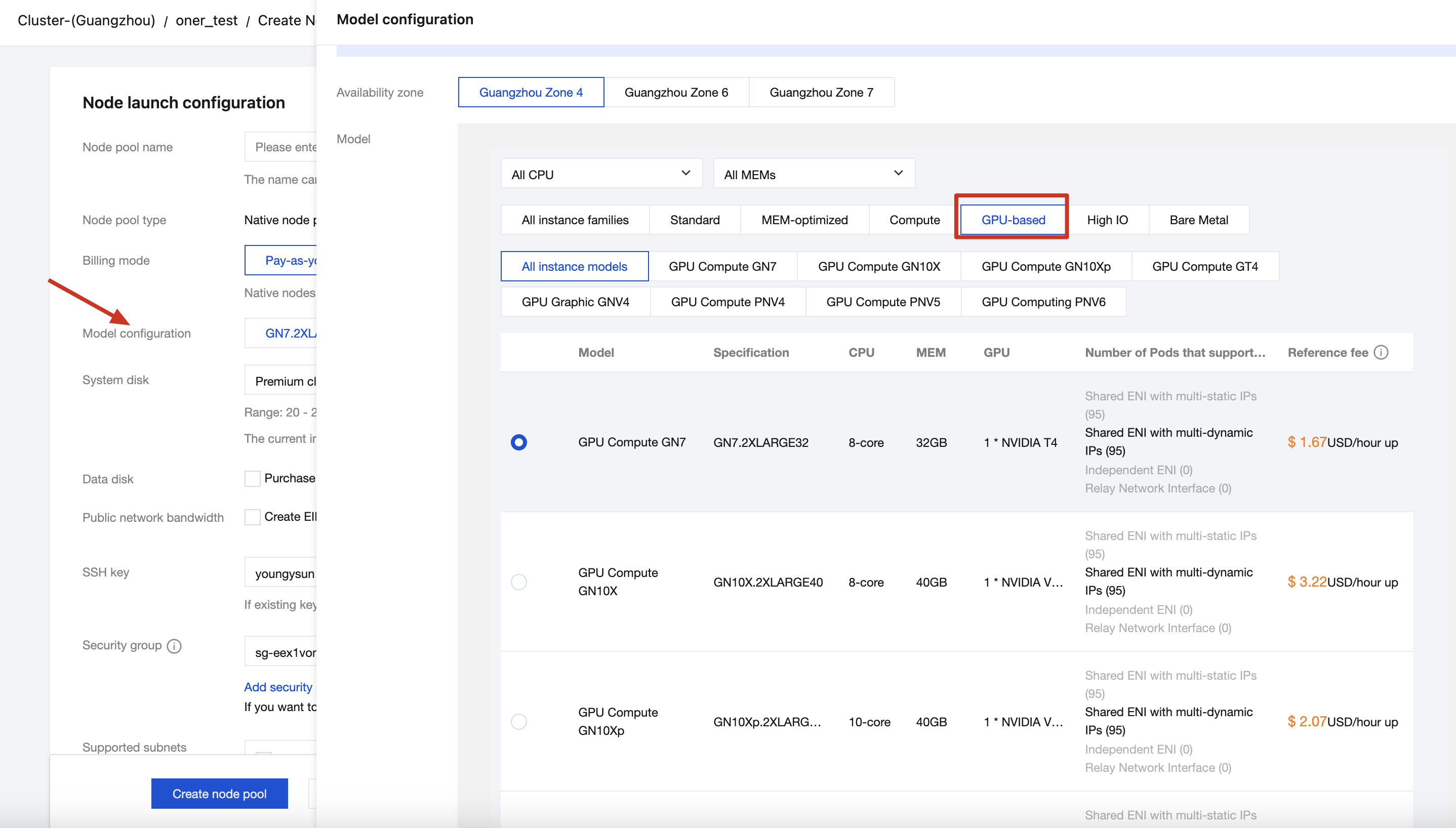

Step 3: Creating a GPU Node Pool

1. On the cluster management page, select the cluster ID and enter the basic information page of the cluster.

2. Select Node Management in the left menu bar, and click Create on the Node Pool page.

3. Select the node type. For configuration details, please see creation of node pool.

If selected native node or regular node:

Recommend choose a newer operating system.

The default size of the system disk and data disk is 50 GB. It is recommended to increase it, for example, to 200 GB, to avoid high disk space pressure on the node due to large AI-related mirrors.

Select a GPU model that meets the requirement and is not sold out from the GPU models in the model configuration. If there is a GPU driver option, select the latest version.

If Super Node is selected: A Super Node is a virtual node. Each Pod exclusively occupies a lightweight virtual machine. Therefore, there is no need to select a model. The GPU card model can be specified through Pod annotations at deployment time (the example below will provide an explanation).

4. Click Create Node Pool.

Notes:

No explicit installation is required for the GPU plug-in. For regular nodes or native nodes, after configuring the GPU model, the system will automatically install the GPU plug-in. For super nodes, no installation of the GPU plug-in is needed.

Step 4: Use a Job to Download an AI Large Model

Launch a Job to download the required AI large model to CFS shared storage. Below are the Job examples for vLLM, SGLang, and Ollama respectively:

Notes:

Use the previous Ollama or vLLM mirror to execute the script and download the required AI large model. In this example, the DeepSeek - R1 model is downloaded. The required large language model can be replaced by modifying the

LLM_MODEL environment variable.If using Ollama, you can query and search for the required models in the Ollama Model Library.

If using vLLM, you can query and search for the required models in the Hugging Face Model Library and ModelScope Model Library. In the Chinese mainland environment, it is recommended to use the ModelScope Model Library to avoid download failures caused by network issues. Use the

USE_MODELSCOPE environment variable to control whether to download from ModelScope.vllm-download-model-job.yaml

apiVersion: batch/v1kind: Jobmetadata:name: vllm-download-modellabels:app: vllm-download-modelspec:template:metadata:name: vllm-download-modellabels:app: vllm-download-modelannotations:eks.tke.cloud.tencent.com/root-cbs-size: '100' # If using a super node, the default system disk is only 20Gi. After decompressing the vllm mirror, the disk will be full. Use this annotation to customize the system disk capacity (charges will apply for the part exceeding 20Gi).spec:containers:- name: vllmimage: vllm/vllm-openai:latestenv:- name: LLM_MODELvalue: deepseek-ai/DeepSeek-R1-Distill-Qwen-7B- name: USE_MODELSCOPEvalue: "1"command:- bash- -c- |set -exif [[ "$USE_MODELSCOPE" == "1" ]]; thenexec modelscope download --local_dir=/data/$LLM_MODEL --model="$LLM_MODEL"elseexec huggingface-cli download --local-dir=/data/$LLM_MODEL $LLM_MODELfivolumeMounts:- name: datamountPath: /datavolumes:- name: datapersistentVolumeClaim:claimName: ai-modelrestartPolicy: OnFailure

sglang-download-model-job.yaml

apiVersion: batch/v1kind: Jobmetadata:name: sglang-download-modellabels:app: sglang-download-modelspec:template:metadata:name: sglang-download-modellabels:app: sglang-download-modelannotations:eks.tke.cloud.tencent.com/root-cbs-size: '100' # If using a super node, the default system disk is only 20Gi. After decompressing the sglang mirror, the disk will be full. Use this annotation to customize the system disk capacity (charges will apply for the part exceeding 20Gi).spec:containers:- name: sglangimage: lmsysorg/sglang:latestenv:- name: LLM_MODELvalue: deepseek-ai/DeepSeek-R1-Distill-Qwen-32B- name: USE_MODELSCOPEvalue: "1"command:- bash- -c- |set -exif [[ "$USE_MODELSCOPE" == "1" ]]; thenexec modelscope download --local_dir=/data/$LLM_MODEL --model="$LLM_MODEL"elseexec huggingface-cli download --local-dir=/data/$LLM_MODEL $LLM_MODELfivolumeMounts:- name: datamountPath: /datavolumes:- name: datapersistentVolumeClaim:claimName: ai-modelrestartPolicy: OnFailure

ollama-download-model-job.yaml

apiVersion: batch/v1kind: Jobmetadata:name: ollama-download-modellabels:app: ollama-download-modelspec:template:metadata:name: ollama-download-modellabels:app: ollama-download-modelspec:containers:- name: ollamaimage: ollama/ollama:latestenv:- name: LLM_MODELvalue: deepseek-r1:7bcommand:- bash- -c- |set -exollama serve &sleep 5 # sleep 5 seconds to wait for ollama to startexec ollama pull $LLM_MODELvolumeMounts:- name: datamountPath: /root/.ollama # Model data of ollama is stored in the /root/.ollama directory. Mount the PVC of type CFS to this path.volumes:- name: datapersistentVolumeClaim:claimName: ai-modelrestartPolicy: OnFailure

Step 5: Deploy Ollama, VLLM or SGLang

Deploy vLLM via Deployment

apiVersion: apps/v1kind: Deploymentmetadata:name: vllmlabels:app: vllmspec:selector:matchLabels:app: vllmreplicas: 1template:metadata:labels:app: vllmspec:containers:- name: vllmimage: vllm/vllm-openai:latestimagePullPolicy: Alwaysenv:- name: PYTORCH_CUDA_ALLOC_CONFvalue: expandable_segments:True- name: LLM_MODELvalue: deepseek-ai/DeepSeek-R1-Distill-Qwen-7Bcommand:- bash- -c- |vllm serve /data/$LLM_MODEL \\--served-model-name $LLM_MODEL \\--host 0.0.0.0 \\--port 8000 \\--trust-remote-code \\--enable-chunked-prefill \\--max_num_batched_tokens 1024 \\--max_model_len 1024 \\--enforce-eager \\--tensor-parallel-size 1securityContext:runAsNonRoot: falseports:- containerPort: 8000readinessProbe:failureThreshold: 3httpGet:path: /healthport: 8000initialDelaySeconds: 5periodSeconds: 5resources:requests:cpu: 2000mmemory: 2Ginvidia.com/gpu: "1"limits:nvidia.com/gpu: "1"volumeMounts:- name: datamountPath: /data- name: shmmountPath: /dev/shmvolumes:- name: datapersistentVolumeClaim:claimName: ai-model# vLLM needs to access the host's shared memory for tensor parallel inference.- name: shmemptyDir:medium: MemorysizeLimit: "2Gi"restartPolicy: Always---apiVersion: v1kind: Servicemetadata:name: vllm-apispec:selector:app: vllmtype: ClusterIPports:- name: apiprotocol: TCPport: 8000targetPort: 8000

apiVersion: apps/v1kind: Deploymentmetadata:name: vllmlabels:app: vllmspec:selector:matchLabels:app: vllmreplicas: 1template:metadata:labels:app: vllmannotations:eks.tke.cloud.tencent.com/gpu-type: V100 # Specify the GPU card modeleks.tke.cloud.tencent.com/root-cbs-size: '100' # For a super node, the default system disk capacity is only 20Gi. After decompressing the vllm mirror, the disk will be full. Use this annotation to customize the system disk capacity (charges will apply for the part exceeding 20Gi).spec:containers:- name: vllmimage: vllm/vllm-openai:latestimagePullPolicy: Alwaysenv:- name: PYTORCH_CUDA_ALLOC_CONFvalue: expandable_segments:True- name: LLM_MODELvalue: deepseek-ai/DeepSeek-R1-Distill-Qwen-7Bcommand:- bash- -c- |vllm serve /data/$LLM_MODEL \\--served-model-name $LLM_MODEL \\--host 0.0.0.0 \\--port 8000 \\--trust-remote-code \\--enable-chunked-prefill \\--max_num_batched_tokens 1024 \\--max_model_len 1024 \\--enforce-eager \\--tensor-parallel-size 1securityContext:runAsNonRoot: falseports:- containerPort: 8000readinessProbe:failureThreshold: 3httpGet:path: /healthport: 8000initialDelaySeconds: 5periodSeconds: 5resources:requests:cpu: 2000mmemory: 2Ginvidia.com/gpu: "1"limits:nvidia.com/gpu: "1"volumeMounts:- name: datamountPath: /data- name: shmmountPath: /dev/shmvolumes:- name: datapersistentVolumeClaim:claimName: ai-model# vLLM needs to access the host's shared memory for tensor parallel inference.- name: shmemptyDir:medium: MemorysizeLimit: "2Gi"restartPolicy: Always---apiVersion: v1kind: Servicemetadata:name: vllm-apispec:selector:app: vllmtype: ClusterIPports:- name: apiprotocol: TCPport: 8000targetPort: 8000

1. Specify the large model name with the --served-model-name parameter. It should be consistent with the name specified in the previous download Job.

2. The model data refers to the PVC downloaded in the previous Job and mounts it under the

/data directory.3. vLLM listens on port 8000 to expose the API. Define the Service so that it can be called by OpenWebUI subsequently.

Deploy SGLang through Deployment

apiVersion: apps/v1kind: Deploymentmetadata:name: sglanglabels:app: sglangspec:selector:matchLabels:app: sglangreplicas: 1template:metadata:labels:app: sglangspec:containers:- name: sglangimage: lmsysorg/sglang:latestenv:- name: LLM_MODELvalue: deepseek-ai/DeepSeek-R1-Distill-Qwen-32Bcommand:- bash- -c- |set -xexec python3 -m sglang.launch_server \\--host 0.0.0.0 \\--port 30000 \\--model-path /data/$LLM_MODELresources:limits:nvidia.com/gpu: "1"ports:- containerPort: 30000volumeMounts:- name: datamountPath: /data- name: shmmountPath: /dev/shmvolumes:- name: datapersistentVolumeClaim:claimName: ai-model- name: shmemptyDir:medium: MemorysizeLimit: 40GirestartPolicy: Always---apiVersion: v1kind: Servicemetadata:name: sglangspec:selector:app: sglangtype: ClusterIPports:- name: apiprotocol: TCPport: 30000targetPort: 30000

apiVersion: apps/v1kind: Deploymentmetadata:name: sglanglabels:app: sglangspec:selector:matchLabels:app: sglangreplicas: 1template:metadata:labels:app: sglangannotations:eks.tke.cloud.tencent.com/gpu-type: V100 # Specify the GPU card modeleks.tke.cloud.tencent.com/root-cbs-size: '100' # For a super node, the default system disk capacity is only 20Gi. After decompressing the sglang mirror, the disk will be full. Use this annotation to customize the system disk capacity (charges will apply for the part exceeding 20Gi).spec:containers:- name: sglangimage: lmsysorg/sglang:latestenv:- name: LLM_MODELvalue: deepseek-ai/DeepSeek-R1-Distill-Qwen-32Bcommand:- bash- -c- |set -xexec python3 -m sglang.launch_server \\--host 0.0.0.0 \\--port 30000 \\--model-path /data/$LLM_MODELresources:limits:nvidia.com/gpu: "1"ports:- containerPort: 30000volumeMounts:- name: datamountPath: /data- name: shmmountPath: /dev/shmvolumes:- name: datapersistentVolumeClaim:claimName: ai-model- name: shmemptyDir:medium: MemorysizeLimit: 40GirestartPolicy: Always---apiVersion: v1kind: Servicemetadata:name: sglangspec:selector:app: sglangtype: ClusterIPports:- name: apiprotocol: TCPport: 30000targetPort: 30000

1.

LLM_MODEL environment variable specifies the large model name, which should be consistent with the name specified in the previous Job.2. The model data refers to the PVC downloaded in the previous Job and mounts it under the

/data directory.3. SGLang listens on port 30000 to expose the API and defines a Service so that it can be called by OpenWebUI subsequently.

Deploy Ollama through Deployment

apiVersion: apps/v1kind: Deploymentmetadata:name: ollamalabels:app: ollamaspec:selector:matchLabels:app: ollamareplicas: 1template:metadata:labels:app: ollamaspec:containers:- name: ollamaimage: ollama/ollama:latestimagePullPolicy: IfNotPresentcommand: ["ollama", "serve"]env:- name: OLLAMA_HOSTvalue: ":11434"resources:requests:cpu: 2000mmemory: 2Ginvidia.com/gpu: "1"limits:cpu: 4000mmemory: 4Ginvidia.com/gpu: "1"ports:- containerPort: 11434name: ollamavolumeMounts:- name: datamountPath: /root/.ollamavolumes:- name: datapersistentVolumeClaim:claimName: ai-modelrestartPolicy: Always---apiVersion: v1kind: Servicemetadata:name: ollamaspec:selector:app: ollamatype: ClusterIPports:- name: serverprotocol: TCPport: 11434targetPort: 11434

apiVersion: apps/v1kind: Deploymentmetadata:name: ollamalabels:app: ollamaspec:selector:matchLabels:app: ollamareplicas: 1template:metadata:labels:app: ollamaannotations:eks.tke.cloud.tencent.com/gpu-type: V100spec:containers:- name: ollamaimage: ollama/ollama:latestimagePullPolicy: IfNotPresentcommand: ["ollama", "serve"]env:- name: OLLAMA_HOSTvalue: ":11434"resources:requests:cpu: 2000mmemory: 2Ginvidia.com/gpu: "1"limits:cpu: 4000mmemory: 4Ginvidia.com/gpu: "1"ports:- containerPort: 11434name: ollamavolumeMounts:- name: datamountPath: /root/.ollamavolumes:- name: datapersistentVolumeClaim:claimName: ai-modelrestartPolicy: Always---apiVersion: v1kind: Servicemetadata:name: ollamaspec:selector:app: ollamatype: ClusterIPports:- name: serverprotocol: TCPport: 11434targetPort: 11434

1. The model data of Ollama is stored in the

/root/.ollama directory. Therefore, it needs to mount the CFS - type PVC with the downloaded AI large model to this path.2. Ollama listens on port 11434 to expose the API and defines a Service so that it can be called by OpenWebUI subsequently.

3. Ollama defaults to listening on the loopback address (

127.0.0.1). By specifying the OLLAMA_HOST environment variable, it forces the exposure of port 11434 to the public.Notes:

Running large models requires the use of GPUs. Therefore, the

nvidia.com/gpu resource is specified in requests/limits so that Pods can be scheduled to GPU models and allocated with GPU cards for use.If you want to run large models on super nodes, you can specify the GPU type through the Pod annotation

eks.tke.cloud.tencent.com/gpu-type. The options include V100, T4, A10*PNV4, A10*GNV4. For details, see GPU specification.Step 6: Configure GPU Auto Scaling (AS)

To implement auto scaling for GPU resources, follow the steps below to configure. The GPU Pod provides multiple monitoring metrics. For details, see GPU Monitoring Metrics. You can configure HPA based on these metrics to achieve auto scaling for GPU Pods. For example, the configuration example based on GPU utilization rate is as follows:

apiVersion: autoscaling/v2kind: HorizontalPodAutoscalermetadata:name: vllmspec:minReplicas: 1maxReplicas: 2scaleTargetRef:apiVersion: apps/v1kind: Deploymentname: vllmmetrics: # See more GPU metrics at https://www.tencentcloud.com/document/product/457/38929?from_cn_redirect=1#gpu- pods:metric:name: k8s_pod_rate_gpu_used_request # gpu utilization (as a percentage of request)target:averageValue: "80"type: AverageValuetype: Podsbehavior:scaleDown:policies:- periodSeconds: 15type: Percentvalue: 100selectPolicy: MaxstabilizationWindowSeconds: 300scaleUp:policies:- periodSeconds: 15type: Percentvalue: 100- periodSeconds: 15type: Podsvalue: 4selectPolicy: MaxstabilizationWindowSeconds: 0

Notes:

Since GPU resources are usually tense, it may not be possible to reobtain them after scale-down. If you do not want to trigger the scale-down operation, you can configure the HPA to forbid scale-down through the following code:

behavior:scaleDown:selectPolicy: Disabled

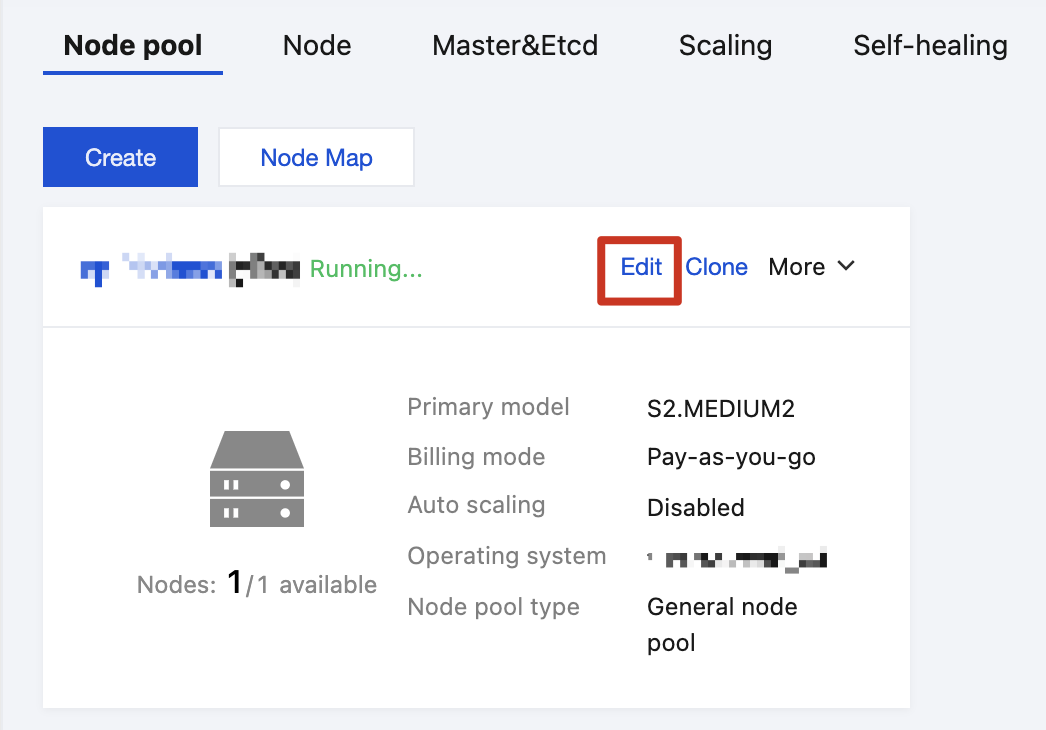

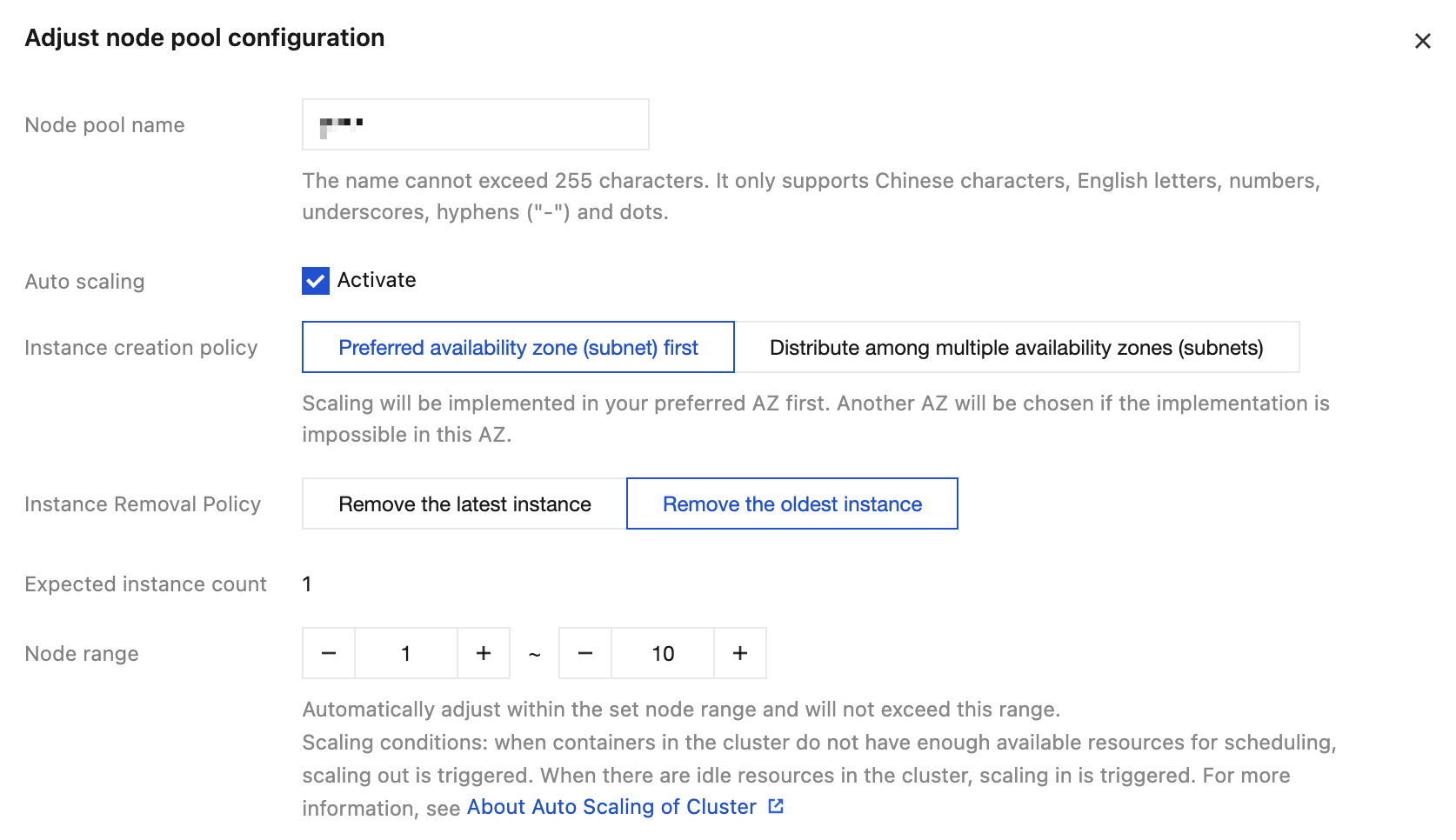

If using native nodes or regular nodes, you also need to enable auto scaling for the node pool. Otherwise, after GPU Pod scale-out, the Pod will remain in Pending status due to lack of available GPU nodes. The method to enable auto scaling for the node pool is as follows:

1. Log in to the TKE console, on the cluster management page, select the cluster ID, and enter the basic information page of the cluster.

2. Select Node Management in the left menu bar. On the Node Pool page, select Edit on the right of the node pool. This document takes a regular node pool as an example.

3. In adjusting node pool configuration, check to enable AS and set the node quantity range.

4. Click OK.

Step 7: Deploy OpenWebUI

Deploy OpenWebUI using Deployment and define a Service for future external exposure. The backend API can be provided by vLLM, SGLang, and Ollama. The following are OpenWebUI deployment examples in various scenarios:

apiVersion: apps/v1kind: Deploymentmetadata:name: webuispec:replicas: 1selector:matchLabels:app: webuitemplate:metadata:labels:app: webuispec:containers:- name: webuiimage: imroc/open-webui:main # mirror image from docker hub with long-term automatic synchronization, safe to useenv:- name: OPENAI_API_BASE_URLvalue: http://vllm-api:8000/v1 # domain name or IP address of vLLM- name: ENABLE_OLLAMA_API # Disable Ollama API, keep only OpenAI APIvalue: "False"tty: trueports:- containerPort: 8080resources:requests:cpu: "500m"memory: "500Mi"limits:cpu: "1000m"memory: "1Gi"volumeMounts:- name: webui-volumemountPath: /app/backend/datavolumes:- name: webui-volumepersistentVolumeClaim:claimName: webui---apiVersion: v1kind: Servicemetadata:name: webuilabels:app: webuispec:type: ClusterIPports:- port: 8080protocol: TCPtargetPort: 8080selector:app: webui

apiVersion: apps/v1kind: Deploymentmetadata:name: webuispec:replicas: 1selector:matchLabels:app: webuitemplate:metadata:labels:app: webuispec:containers:- name: webuiimage: imroc/open-webui:main # mirror image from docker hub with long-term automatic synchronization, safe to useenv:- name: OPENAI_API_BASE_URLvalue: http://sglang:30000/v1 # domain name or IP address of sglang- name: ENABLE_OLLAMA_API # Disable Ollama API, keep only OpenAI APIvalue: "False"tty: trueports:- containerPort: 8080resources:requests:cpu: "500m"memory: "500Mi"limits:cpu: "1000m"memory: "1Gi"volumeMounts:- name: webui-volumemountPath: /app/backend/datavolumes:- name: webui-volumepersistentVolumeClaim:claimName: webui---apiVersion: v1kind: Servicemetadata:name: webuilabels:app: webuispec:type: ClusterIPports:- port: 8080protocol: TCPtargetPort: 8080selector:app: webui

apiVersion: apps/v1kind: Deploymentmetadata:name: webuispec:replicas: 1selector:matchLabels:app: webuitemplate:metadata:labels:app: webuispec:containers:- name: webuiimage: imroc/open-webui:main # mirror image from docker hub with long-term automatic synchronization, safe to useenv:- name: OLLAMA_BASE_URLvalue: http://ollama:11434 # domain name or IP address of ollama- name: ENABLE_OPENAI_API # Disable OpenAI API, reserve only Ollama APIvalue: "False"tty: trueports:- containerPort: 8080resources:requests:cpu: "500m"memory: "500Mi"limits:cpu: "1000m"memory: "1Gi"volumeMounts:- name: webui-volumemountPath: /app/backend/datavolumes:- name: webui-volumepersistentVolumeClaim:claimName: webui---apiVersion: v1kind: Servicemetadata:name: webuilabels:app: webuispec:type: ClusterIPports:- port: 8080protocol: TCPtargetPort: 8080selector:app: webui

Notes:

The data storage of OpenWebUI is in the

/app/backend/data directory (such as account password, chat history and other data). This document mounts the PVC to this path.Step 8: Expose OpenWebUI and Have a Dialogue with the Model

Local Testing

If merely performing local testing, you can use the

kubectl port-forward command to expose services:Notes:

The premise is that kubectl can be used locally to connect to the cluster. See Connecting to Cluster.

kubectl port-forward service/webui 8080:8080

Then visit

http://127.0.0.1:8080 in the browser.Expose Services Via Ingress or Gateway API

You can also expose services through Ingress or Gateway API. The following are related examples:

Notes:

Use Gateway API. Ensure that your cluster has an implementation of Gateway API, such as EnvoyGateway in the TKE Application Market. For specific Gateway API usage, please see Gateway API Official Documentation.

apiVersion: gateway.networking.k8s.io/v1kind: HTTPRoutemetadata:name: aispec:parentRefs:- group: gateway.networking.k8s.iokind: Gatewaynamespace: envoy-gateway-systemname: ai-gatewayhostnames:- "ai.your.domain"rules:- backendRefs:- group: ""kind: Servicename: webuiport: 8080

Note:

1.

parentRefs refer to a well-defined Gateway (normally, one Gateway corresponds to one CLB).2.

hostnames: Replace with your own domain name and ensure the domain name can be resolved normally to the CLB address corresponding to the Gateway.3.

backendRefs: Specify the Service for OpenWebUI.apiVersion: networking.k8s.io/v1kind: Ingressmetadata:name: webuispec:rules:- host: "ai.your.domain"http:paths:- path: /pathType: Prefixbackend:service:name: webuiport:number: 8080

Note:

1.

host field: Enter your custom domain name and ensure the domain name can be resolved normally to the CLB address corresponding to the Ingress.2.

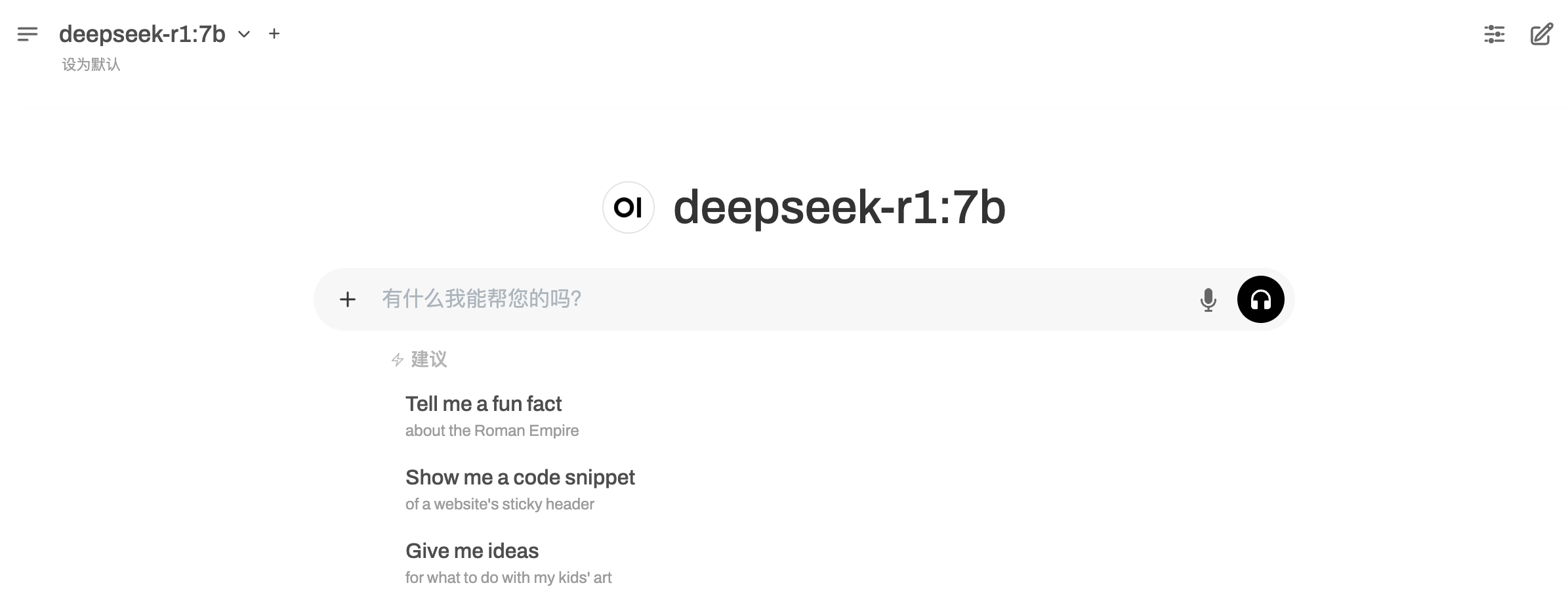

backend.service: It needs to be specified as the Service for OpenWebUI.Once configured, visit the corresponding address in the browser to enter the OpenWebUI page.

Log in for the First Time

When you first enter OpenWebUI, you will be prompted to create an administrator account password. After creation, you can log in. Then, by default, it will load the previously deployed large language model (LLM) for dialogue.

Was this page helpful?

You can also Contact Sales or Submit a Ticket for help.

Yes

No

Feedback