- Release Notes and Announcements

- Product Introduction

- Purchase Guide

- Getting Started

- Operation Guide

- Instance Management

- Creating Instance

- Naming with Consecutive Numeric Suffixes or Designated Pattern String

- Viewing Instance

- Upgrading Instance

- Downgrading Instance Configuration

- Terminating/Returning Instances

- Change from Pay-as-You-Go to Monthly Subscription

- Upgrading Instance Version

- Adding Routing Policy

- Public Network Bandwidth Management

- Connecting to Prometheus

- AZ Migration

- Setting Maintenance Time

- Setting Message Size

- Topic Management

- Consumer Group

- Monitoring and Alarms

- Smart Ops

- Permission Management

- Tag Management

- Querying Message

- Event Center

- Migration to Cloud

- Data Compression

- Instance Management

- CKafka Connector

- Best Practices

- Connector Best Practices

- Connecting Flink to CKafka

- Connecting Schema Registry to CKafka

- Connecting Spark Streaming to CKafka

- Connecting Flume to CKafka

- Connecting Kafka Connect to CKafka

- Connecting Storm to CKafka

- Connecting Logstash to CKafka

- Connecting Filebeat to CKafka

- Multi-AZ Deployment

- Production and Consumption

- Log Access

- Replacing Supportive Route (Old)

- Troubleshooting

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- DataHub APIs

- ACL APIs

- Topic APIs

- BatchModifyGroupOffsets

- BatchModifyTopicAttributes

- CreateConsumer

- CreateDatahubTopic

- CreatePartition

- CreateTopic

- CreateTopicIpWhiteList

- DeleteTopic

- DeleteTopicIpWhiteList

- DescribeDatahubTopic

- DescribeTopic

- DescribeTopicAttributes

- DescribeTopicDetail

- DescribeTopicProduceConnection

- DescribeTopicSubscribeGroup

- FetchMessageByOffset

- FetchMessageListByOffset

- ModifyDatahubTopic

- ModifyTopicAttributes

- DescribeTopicSyncReplica

- Instance APIs

- Route APIs

- Other APIs

- Data Types

- Error Codes

- SDK Documentation

- General References

- FAQs

- Service Level Agreement (New Version)

- Contact Us

- Glossary

- Release Notes and Announcements

- Product Introduction

- Purchase Guide

- Getting Started

- Operation Guide

- Instance Management

- Creating Instance

- Naming with Consecutive Numeric Suffixes or Designated Pattern String

- Viewing Instance

- Upgrading Instance

- Downgrading Instance Configuration

- Terminating/Returning Instances

- Change from Pay-as-You-Go to Monthly Subscription

- Upgrading Instance Version

- Adding Routing Policy

- Public Network Bandwidth Management

- Connecting to Prometheus

- AZ Migration

- Setting Maintenance Time

- Setting Message Size

- Topic Management

- Consumer Group

- Monitoring and Alarms

- Smart Ops

- Permission Management

- Tag Management

- Querying Message

- Event Center

- Migration to Cloud

- Data Compression

- Instance Management

- CKafka Connector

- Best Practices

- Connector Best Practices

- Connecting Flink to CKafka

- Connecting Schema Registry to CKafka

- Connecting Spark Streaming to CKafka

- Connecting Flume to CKafka

- Connecting Kafka Connect to CKafka

- Connecting Storm to CKafka

- Connecting Logstash to CKafka

- Connecting Filebeat to CKafka

- Multi-AZ Deployment

- Production and Consumption

- Log Access

- Replacing Supportive Route (Old)

- Troubleshooting

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- DataHub APIs

- ACL APIs

- Topic APIs

- BatchModifyGroupOffsets

- BatchModifyTopicAttributes

- CreateConsumer

- CreateDatahubTopic

- CreatePartition

- CreateTopic

- CreateTopicIpWhiteList

- DeleteTopic

- DeleteTopicIpWhiteList

- DescribeDatahubTopic

- DescribeTopic

- DescribeTopicAttributes

- DescribeTopicDetail

- DescribeTopicProduceConnection

- DescribeTopicSubscribeGroup

- FetchMessageByOffset

- FetchMessageListByOffset

- ModifyDatahubTopic

- ModifyTopicAttributes

- DescribeTopicSyncReplica

- Instance APIs

- Route APIs

- Other APIs

- Data Types

- Error Codes

- SDK Documentation

- General References

- FAQs

- Service Level Agreement (New Version)

- Contact Us

- Glossary

Migrating Data from External Kafka Cluster to CKafka

Last updated: 2021-05-28 17:01:49

This document is currently invalid. Please refer to the documentation page of the product.

You can choose the most appropriate migration method based on your actual business scenarios to migrate your business data to the cloud by following the steps below. This guide is mainly for data migration from CVM to CKafka, which can be done over the private network. If access to data stream over the public network is required, you need to activate the public IP access for your CKafka instance first.

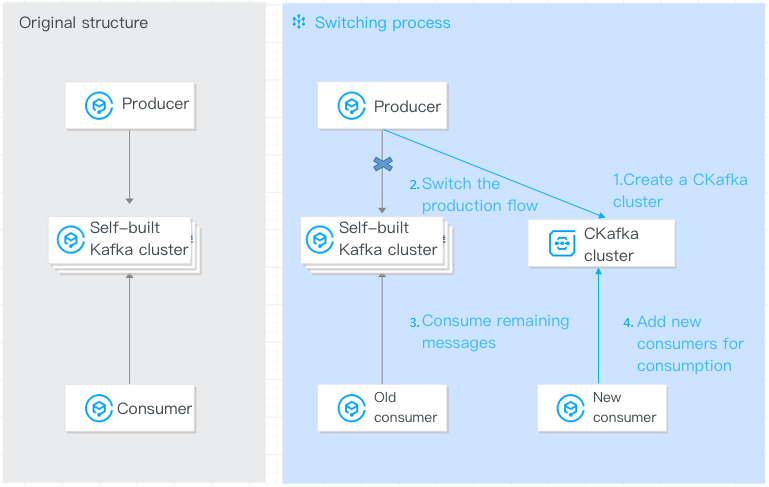

Migrating Data to CKafka with Message Ordering Guaranteed

The prerequisite for guaranteeing message ordering is to strictly limit data consumption to only one consumer. Therefore, timing of the migration is vital. The migration steps are as follows:

Detailed directions:

- Log in to the CKafka Console and create a CKafka instance and a corresponding topic.

For detailed directions, please see Creating Instances and Creating Topics. - Switch the production flow so that the producer produces data to the CKafka instance.

Change the IP in thebroker-listto the VIP of the CKafka instance andtopicNameto the topic name in the CKafka instance:./kafka-console-producer.sh --broker-list xxx.xxx.xxx.xxx:9092 --topic topicName - The original consumer does not need to be configured and can continue to consume the data in your self-built Kafka cluster. When the consumption is completed, make new consumers consume data in the CKafka cluster through the following configurations (let only one consumer consume the data to guarantee message ordering).

To add a new consumer, configure the IP in--bootstrap-serverto the VIP of the CKafka instance:./kafka-console-consumer.sh --bootstrap-server xxx.xxx.xxx.xxx:9092 --from-beginning --new-consumer --topic topicName --consumer.config ../config/consumer.properties - The new consumer continues to consume data in the CKafka cluster as the migration is completed (if the original consumer is a CVM instance, it can continue to consume the data).

Note:The above commands are test commands. In actual business operations, just modify the broker address configured for the corresponding application and then restart the application.

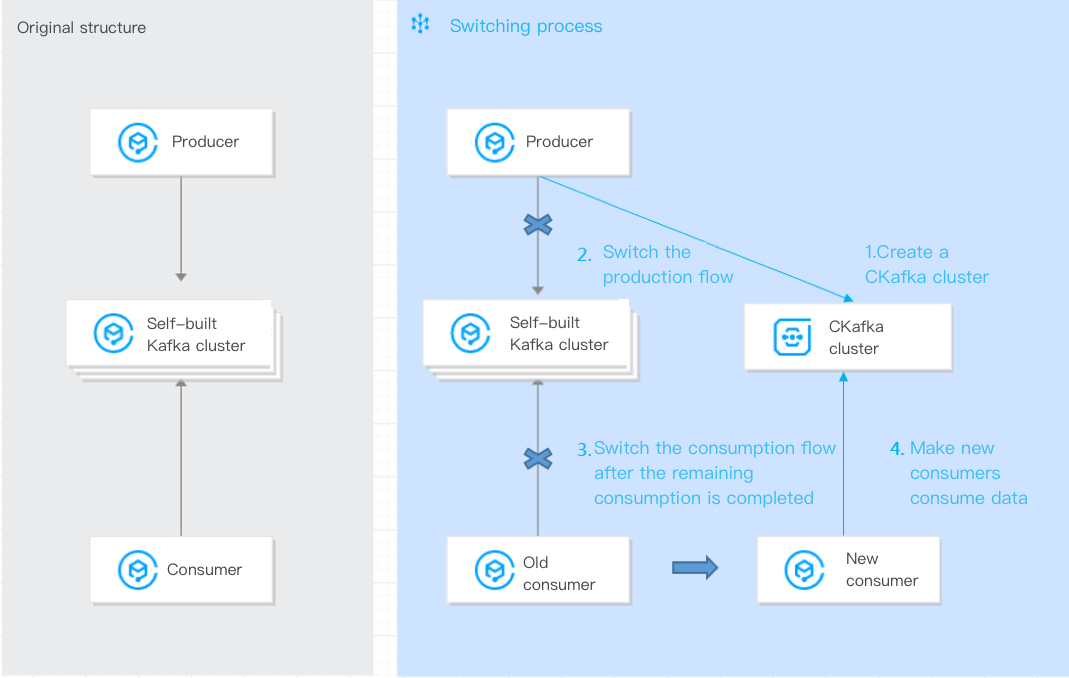

Migrating Data to CKafka Without Message Ordering Guaranteed

If the requirement for message ordering is not high, it is possible to migrate the data while it is consumed by multiple consumers in parallel. The migration steps are as follows:

Detailed directions:

- Log in to the CKafka Console and create a CKafka instance and a corresponding topic.

For detailed directions, please see Creating Instances and Creating Topics. - Switch the production flow so that the producer produces data to the CKafka instance.

Change the IP in thebroker-listto the VIP of the CKafka instance andtopicNameto the topic name in the CKafka instance:./kafka-console-producer.sh --broker-list xxx.xxx.xxx.xxx:9092 --topic topicName - The original consumer does not need to be configured and can continue to consume the data in your self-built Kafka cluster. Meanwhile, new consumers can be added to consume data in the CKafka cluster. After the data in the original self-built cluster is all consumed, the migration is completed (suitable for scenarios that do not require message ordering).

Configure the IP in--bootstrap-serverto the VIP of the CKafka instance:./kafka-console-consumer.sh --bootstrap-server xxx.xxx.xxx.xxx:9092 --from-beginning --new-consumer --topic topicName --consumer.config ../config/consumer.properties

Note:The above commands are test commands. In actual business operations, just modify the broker address configured for the corresponding application and then restart the application.

Yes

Yes

No

No

Was this page helpful?