- Release Notes and Announcements

- Announcements

- Notification on Service Suspension Policy Change in Case of Overdue Payment for COS Pay-As-You-Go (Postpaid)

- Implementation Notice for Security Management of COS Bucket Domain (Effective January 2024)

- Notification of Price Reduction for COS Retrieval and Storage Capacity Charges

- Daily Billing for COS Storage Usage, Request, and Data Retrieval

- COS Will Stop Supporting New Default CDN Acceleration Domains

- Release Notes

- Announcements

- Product Introduction

- Purchase Guide

- Getting Started

- Console Guide

- Console Overview

- Bucket Management

- Bucket Overview

- Creating Bucket

- Deleting Buckets

- Querying Bucket

- Clearing Bucket

- Setting Access Permission

- Setting Bucket Encryption

- Setting Hotlink Protection

- Setting Origin-Pull

- Setting Cross-Origin Resource Sharing (CORS)

- Setting Versioning

- Setting Static Website

- Setting Lifecycle

- Setting Logging

- Accessing Bucket List Using Sub-Account

- Adding Bucket Policies

- Setting Log Analysis

- Setting INTELLIGENT TIERING

- Setting Inventory

- Domain Name Management

- Setting Bucket Tags

- Setting Log Retrieval

- Setting Cross-Bucket Replication

- Enabling Global Acceleration

- Setting Object Lock

- Object Management

- Uploading an Object

- Downloading Objects

- Copying Object

- Previewing or Editing Object

- Viewing Object Information

- Searching for Objects

- Sorting and Filtering Objects

- Direct Upload to ARCHIVE

- Modifying Storage Class

- Deleting Incomplete Multipart Uploads

- Setting Object Access Permission

- Setting Object Encryption

- Custom Headers

- Deleting Objects

- Restoring Archived Objects

- Folder Management

- Data Extraction

- Setting Object Tag

- Exporting Object URLs

- Restoring Historical Object Version

- Batch Operation

- Monitoring Reports

- Data Processing

- Content Moderation

- Smart Toolbox User Guide

- Data Processing Workflow

- Application Integration

- User Tools

- Tool Overview

- Installation and Configuration of Environment

- COSBrowser

- COSCLI (Beta)

- COSCLI Overview

- Download and Installation Configuration

- Common Options

- Common Commands

- Generating and Modifying Configuration Files - config

- Creating Buckets - mb

- Deleting Buckets - rb

- Tagging Bucket - bucket-tagging

- Querying Bucket/Object List - ls

- Obtaining Statistics on Different Types of Objects - du

- Uploading/Downloading/Copying Objects - cp

- Syncing Upload/Download/Copy - sync

- Deleting Objects - rm

- Getting File Hash Value - hash

- Listing Incomplete Multipart Uploads - lsparts

- Clearing Incomplete Multipart Uploads - abort

- Retrieving Archived Files - restore

- Getting Pre-signed URL - signurl

- FAQs

- COSCMD

- COS Migration

- FTP Server

- Hadoop

- COSDistCp

- Hadoop-cos-DistChecker

- HDFS TO COS

- Online Auxiliary Tools

- Diagnostic Tool

- Best Practices

- Overview

- Access Control and Permission Management

- ACL Practices

- CAM Practices

- Granting Sub-Accounts Access to COS

- Authorization Cases

- Working with COS API Authorization Policies

- Security Guidelines for Using Temporary Credentials for Direct Upload from Frontend to COS

- Generating and Using Temporary Keys

- Authorizing Sub-Account to Get Buckets by Tag

- Descriptions and Use Cases of Condition Keys

- Granting Bucket Permissions to a Sub-Account that is Under Another Root Account

- Performance Optimization

- Data Migration

- Accessing COS with AWS S3 SDK

- Data Disaster Recovery and Backup

- Domain Name Management Practice

- Image Processing

- Audio/Video Practices

- Workflow

- Direct Data Upload

- Content Moderation

- Data Security

- Data Verification

- Big Data Practice

- Using COS in the Third-party Applications

- Use the general configuration of COS in third-party applications compatible with S3

- Storing Remote WordPress Attachments to COS

- Storing Ghost Attachment to COS

- Backing up Files from PC to COS

- Using Nextcloud and COS to Build Personal Online File Storage Service

- Mounting COS to Windows Server as Local Drive

- Setting up Image Hosting Service with PicGo, Typora, and COS

- Managing COS Resource with CloudBerry Explorer

- Developer Guide

- Creating Request

- Bucket

- Object

- Data Management

- Data Disaster Recovery

- Data Security

- Cloud Access Management

- Batch Operation

- Global Acceleration

- Data Workflow

- Monitoring and Alarms

- Data Lake Storage

- Cloud Native Datalake Storage

- Metadata Accelerator

- Metadata Acceleration Overview

- Migrating HDFS Data to Metadata Acceleration-Enabled Bucket

- Using HDFS to Access Metadata Acceleration-Enabled Bucket

- Mounting a COS Bucket in a Computing Cluster

- Accessing COS over HDFS in CDH Cluster

- Using Hadoop FileSystem API Code to Access COS Metadata Acceleration Bucket

- Using DataX to Sync Data Between Buckets with Metadata Acceleration Enabled

- Big Data Security

- GooseFS

- Data Processing

- Troubleshooting

- API Documentation

- Introduction

- Common Request Headers

- Common Response Headers

- Error Codes

- Request Signature

- Action List

- Service APIs

- Bucket APIs

- Basic Operations

- Access Control List (acl)

- Cross-Origin Resource Sharing (cors)

- Lifecycle

- Bucket Policy (policy)

- Hotlink Protection (referer)

- Tag (tagging)

- Static Website (website)

- Intelligent Tiering

- Bucket inventory(inventory)

- Versioning

- Cross-Bucket Replication(replication)

- Log Management(logging)

- Global Acceleration (Accelerate)

- Bucket Encryption (encryption)

- Custom Domain Name (Domain)

- Object Lock (ObjectLock)

- Origin-Pull (Origin)

- Object APIs

- Batch Operation APIs

- Data Processing APIs

- Image Processing

- Basic Image Processing

- Scaling

- Cropping

- Rotation

- Converting Format

- Quality Change

- Gaussian Blurring

- Adjusting Brightness

- Adjusting Contrast

- Sharpening

- Grayscale Image

- Image Watermark

- Text Watermark

- Obtaining Basic Image Information

- Getting Image EXIF

- Obtaining Image’s Average Hue

- Metadata Removal

- Quick Thumbnail Template

- Limiting Output Image Size

- Pipeline Operators

- Image Advanced Compression

- Persistent Image Processing

- Image Compression

- Blind Watermark

- Basic Image Processing

- AI-Based Content Recognition

- Media Processing

- File Processing

- File Processing

- Image Processing

- Job and Workflow

- Common Request Headers

- Common Response Headers

- Error Codes

- Workflow APIs

- Workflow Instance

- Job APIs

- Media Processing

- Canceling Media Processing Job

- Querying Media Processing Job

- Media Processing Job Callback

- Video-to-Animated Image Conversion

- Audio/Video Splicing

- Adding Digital Watermark

- Extracting Digital Watermark

- Getting Media Information

- Noise Cancellation

- Video Quality Scoring

- SDRtoHDR

- Remuxing (Audio/Video Segmentation)

- Intelligent Thumbnail

- Frame Capturing

- Stream Separation

- Super Resolution

- Audio/Video Transcoding

- Text to Speech

- Video Montage

- Video Enhancement

- Video Tagging

- Voice/Sound Separation

- Image Processing

- Multi-Job Processing

- AI-Based Content Recognition

- Sync Media Processing

- Media Processing

- Template APIs

- Media Processing

- Creating Media Processing Template

- Creating Animated Image Template

- Creating Splicing Template

- Creating Top Speed Codec Transcoding Template

- Creating Screenshot Template

- Creating Super Resolution Template

- Creating Audio/Video Transcoding Template

- Creating Professional Transcoding Template

- Creating Text-to-Speech Template

- Creating Video Montage Template

- Creating Video Enhancement Template

- Creating Voice/Sound Separation Template

- Creating Watermark Template

- Creating Intelligent Thumbnail Template

- Deleting Media Processing Template

- Querying Media Processing Template

- Updating Media Processing Template

- Updating Animated Image Template

- Updating Splicing Template

- Updating Top Speed Codec Transcoding Template

- Updating Screenshot Template

- Updating Super Resolution Template

- Updating Audio/Video Transcoding Template

- Updating Professional Transcoding Template

- Updating Text-to-Speech Template

- Updating Video Montage Template

- Updating Video Enhancement Template

- Updating Voice/Sound Separation Template

- Updating Watermark Template

- Updating Intelligent Thumbnail Template

- Creating Media Processing Template

- AI-Based Content Recognition

- Media Processing

- Batch Job APIs

- Callback Content

- Appendix

- Content Moderation APIs

- Submitting Virus Detection Job

- SDK Documentation

- SDK Overview

- Preparations

- Android SDK

- Getting Started

- Android SDK FAQs

- Quick Experience

- Bucket Operations

- Object Operations

- Uploading an Object

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Restoring Archived Objects

- Querying Object Metadata

- Generating Pre-Signed URLs

- Configuring Preflight Requests for Cross-origin Access

- Server-Side Encryption

- Single-Connection Bandwidth Limit

- Extracting Object Content

- Remote Disaster Recovery

- Data Management

- Cloud Access Management

- Data Verification

- Image Processing

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- C SDK

- C++ SDK

- .NET(C#) SDK

- Getting Started

- .NET (C#) SDK

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Restoring Archived Objects

- Querying Object Metadata

- Object Access URL

- Getting Pre-Signed URLs

- Configuring Preflight Requests for Cross-Origin Access

- Server-Side Encryption

- Single-URL Speed Limits

- Extracting Object Content

- Cross-Region Disaster Recovery

- Data Management

- Cloud Access Management

- Image Processing

- Content Moderation

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Backward Compatibility

- SDK for Flutter

- Go SDK

- iOS SDK

- Getting Started

- iOS SDK

- Quick Experience

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Listing Objects

- Copying and Moving Objects

- Extracting Object Content

- Checking Whether an Object Exists

- Deleting Objects

- Restoring Archived Objects

- Querying Object Metadata

- Server-Side Encryption

- Object Access URL

- Generating Pre-Signed URL

- Configuring CORS Preflight Requests

- Cross-region Disaster Recovery

- Data Management

- Cloud Access Management

- Image Processing

- Content Recognition

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Java SDK

- Getting Started

- FAQs

- Bucket Operations

- Object Operations

- Uploading Object

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Querying Object Metadata

- Modifying Object Metadata

- Object Access URL

- Generating Pre-Signed URLs

- Restoring Archived Objects

- Server-Side Encryption

- Client-Side Encryption

- Single-URL Speed Limits

- Extracting Object Content

- Uploading/Downloading Object at Custom Domain Name

- Data Management

- Cross-Region Disaster Recovery

- Cloud Access Management

- Image Processing

- Content Moderation

- File Processing

- Media Processing

- AI-Based Content Recognition

- Troubleshooting

- Setting Access Domain Names (CDN/Global Acceleration)

- JavaScript SDK

- Node.js SDK

- PHP SDK

- Python SDK

- Getting Started

- Python SDK FAQs

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Querying Object Metadata

- Modifying Object Metadata

- Object Access URL

- Getting Pre-Signed URLs

- Restoring Archived Objects

- Extracting Object Content

- Server-Side Encryption

- Client-Side Encryption

- Single-URL Speed Limits

- Cross-Region Disaster Recovery

- Data Management

- Cloud Access Management

- Content Recognition

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Image Processing

- React Native SDK

- Mini Program SDK

- Getting Started

- FAQs

- Bucket Operations

- Object Operations

- Uploading an Object

- Downloading Objects

- Listing Objects

- Deleting Objects

- Copying and Moving Objects

- Restoring Archived Objects

- Querying Object Metadata

- Checking Whether an Object Exists

- Object Access URL

- Generating Pre-Signed URL

- Configuring CORS Preflight Requests

- Single-URL Speed Limits

- Server-Side Encryption

- Remote disaster-tolerant

- Data Management

- Cloud Access Management

- Data Verification

- Content Moderation

- Setting Access Domain Names (CDN/Global Acceleration)

- Image Processing

- Troubleshooting

- Error Codes

- FAQs

- Service Level Agreement

- Appendices

- Glossary

- Release Notes and Announcements

- Announcements

- Notification on Service Suspension Policy Change in Case of Overdue Payment for COS Pay-As-You-Go (Postpaid)

- Implementation Notice for Security Management of COS Bucket Domain (Effective January 2024)

- Notification of Price Reduction for COS Retrieval and Storage Capacity Charges

- Daily Billing for COS Storage Usage, Request, and Data Retrieval

- COS Will Stop Supporting New Default CDN Acceleration Domains

- Release Notes

- Announcements

- Product Introduction

- Purchase Guide

- Getting Started

- Console Guide

- Console Overview

- Bucket Management

- Bucket Overview

- Creating Bucket

- Deleting Buckets

- Querying Bucket

- Clearing Bucket

- Setting Access Permission

- Setting Bucket Encryption

- Setting Hotlink Protection

- Setting Origin-Pull

- Setting Cross-Origin Resource Sharing (CORS)

- Setting Versioning

- Setting Static Website

- Setting Lifecycle

- Setting Logging

- Accessing Bucket List Using Sub-Account

- Adding Bucket Policies

- Setting Log Analysis

- Setting INTELLIGENT TIERING

- Setting Inventory

- Domain Name Management

- Setting Bucket Tags

- Setting Log Retrieval

- Setting Cross-Bucket Replication

- Enabling Global Acceleration

- Setting Object Lock

- Object Management

- Uploading an Object

- Downloading Objects

- Copying Object

- Previewing or Editing Object

- Viewing Object Information

- Searching for Objects

- Sorting and Filtering Objects

- Direct Upload to ARCHIVE

- Modifying Storage Class

- Deleting Incomplete Multipart Uploads

- Setting Object Access Permission

- Setting Object Encryption

- Custom Headers

- Deleting Objects

- Restoring Archived Objects

- Folder Management

- Data Extraction

- Setting Object Tag

- Exporting Object URLs

- Restoring Historical Object Version

- Batch Operation

- Monitoring Reports

- Data Processing

- Content Moderation

- Smart Toolbox User Guide

- Data Processing Workflow

- Application Integration

- User Tools

- Tool Overview

- Installation and Configuration of Environment

- COSBrowser

- COSCLI (Beta)

- COSCLI Overview

- Download and Installation Configuration

- Common Options

- Common Commands

- Generating and Modifying Configuration Files - config

- Creating Buckets - mb

- Deleting Buckets - rb

- Tagging Bucket - bucket-tagging

- Querying Bucket/Object List - ls

- Obtaining Statistics on Different Types of Objects - du

- Uploading/Downloading/Copying Objects - cp

- Syncing Upload/Download/Copy - sync

- Deleting Objects - rm

- Getting File Hash Value - hash

- Listing Incomplete Multipart Uploads - lsparts

- Clearing Incomplete Multipart Uploads - abort

- Retrieving Archived Files - restore

- Getting Pre-signed URL - signurl

- FAQs

- COSCMD

- COS Migration

- FTP Server

- Hadoop

- COSDistCp

- Hadoop-cos-DistChecker

- HDFS TO COS

- Online Auxiliary Tools

- Diagnostic Tool

- Best Practices

- Overview

- Access Control and Permission Management

- ACL Practices

- CAM Practices

- Granting Sub-Accounts Access to COS

- Authorization Cases

- Working with COS API Authorization Policies

- Security Guidelines for Using Temporary Credentials for Direct Upload from Frontend to COS

- Generating and Using Temporary Keys

- Authorizing Sub-Account to Get Buckets by Tag

- Descriptions and Use Cases of Condition Keys

- Granting Bucket Permissions to a Sub-Account that is Under Another Root Account

- Performance Optimization

- Data Migration

- Accessing COS with AWS S3 SDK

- Data Disaster Recovery and Backup

- Domain Name Management Practice

- Image Processing

- Audio/Video Practices

- Workflow

- Direct Data Upload

- Content Moderation

- Data Security

- Data Verification

- Big Data Practice

- Using COS in the Third-party Applications

- Use the general configuration of COS in third-party applications compatible with S3

- Storing Remote WordPress Attachments to COS

- Storing Ghost Attachment to COS

- Backing up Files from PC to COS

- Using Nextcloud and COS to Build Personal Online File Storage Service

- Mounting COS to Windows Server as Local Drive

- Setting up Image Hosting Service with PicGo, Typora, and COS

- Managing COS Resource with CloudBerry Explorer

- Developer Guide

- Creating Request

- Bucket

- Object

- Data Management

- Data Disaster Recovery

- Data Security

- Cloud Access Management

- Batch Operation

- Global Acceleration

- Data Workflow

- Monitoring and Alarms

- Data Lake Storage

- Cloud Native Datalake Storage

- Metadata Accelerator

- Metadata Acceleration Overview

- Migrating HDFS Data to Metadata Acceleration-Enabled Bucket

- Using HDFS to Access Metadata Acceleration-Enabled Bucket

- Mounting a COS Bucket in a Computing Cluster

- Accessing COS over HDFS in CDH Cluster

- Using Hadoop FileSystem API Code to Access COS Metadata Acceleration Bucket

- Using DataX to Sync Data Between Buckets with Metadata Acceleration Enabled

- Big Data Security

- GooseFS

- Data Processing

- Troubleshooting

- API Documentation

- Introduction

- Common Request Headers

- Common Response Headers

- Error Codes

- Request Signature

- Action List

- Service APIs

- Bucket APIs

- Basic Operations

- Access Control List (acl)

- Cross-Origin Resource Sharing (cors)

- Lifecycle

- Bucket Policy (policy)

- Hotlink Protection (referer)

- Tag (tagging)

- Static Website (website)

- Intelligent Tiering

- Bucket inventory(inventory)

- Versioning

- Cross-Bucket Replication(replication)

- Log Management(logging)

- Global Acceleration (Accelerate)

- Bucket Encryption (encryption)

- Custom Domain Name (Domain)

- Object Lock (ObjectLock)

- Origin-Pull (Origin)

- Object APIs

- Batch Operation APIs

- Data Processing APIs

- Image Processing

- Basic Image Processing

- Scaling

- Cropping

- Rotation

- Converting Format

- Quality Change

- Gaussian Blurring

- Adjusting Brightness

- Adjusting Contrast

- Sharpening

- Grayscale Image

- Image Watermark

- Text Watermark

- Obtaining Basic Image Information

- Getting Image EXIF

- Obtaining Image’s Average Hue

- Metadata Removal

- Quick Thumbnail Template

- Limiting Output Image Size

- Pipeline Operators

- Image Advanced Compression

- Persistent Image Processing

- Image Compression

- Blind Watermark

- Basic Image Processing

- AI-Based Content Recognition

- Media Processing

- File Processing

- File Processing

- Image Processing

- Job and Workflow

- Common Request Headers

- Common Response Headers

- Error Codes

- Workflow APIs

- Workflow Instance

- Job APIs

- Media Processing

- Canceling Media Processing Job

- Querying Media Processing Job

- Media Processing Job Callback

- Video-to-Animated Image Conversion

- Audio/Video Splicing

- Adding Digital Watermark

- Extracting Digital Watermark

- Getting Media Information

- Noise Cancellation

- Video Quality Scoring

- SDRtoHDR

- Remuxing (Audio/Video Segmentation)

- Intelligent Thumbnail

- Frame Capturing

- Stream Separation

- Super Resolution

- Audio/Video Transcoding

- Text to Speech

- Video Montage

- Video Enhancement

- Video Tagging

- Voice/Sound Separation

- Image Processing

- Multi-Job Processing

- AI-Based Content Recognition

- Sync Media Processing

- Media Processing

- Template APIs

- Media Processing

- Creating Media Processing Template

- Creating Animated Image Template

- Creating Splicing Template

- Creating Top Speed Codec Transcoding Template

- Creating Screenshot Template

- Creating Super Resolution Template

- Creating Audio/Video Transcoding Template

- Creating Professional Transcoding Template

- Creating Text-to-Speech Template

- Creating Video Montage Template

- Creating Video Enhancement Template

- Creating Voice/Sound Separation Template

- Creating Watermark Template

- Creating Intelligent Thumbnail Template

- Deleting Media Processing Template

- Querying Media Processing Template

- Updating Media Processing Template

- Updating Animated Image Template

- Updating Splicing Template

- Updating Top Speed Codec Transcoding Template

- Updating Screenshot Template

- Updating Super Resolution Template

- Updating Audio/Video Transcoding Template

- Updating Professional Transcoding Template

- Updating Text-to-Speech Template

- Updating Video Montage Template

- Updating Video Enhancement Template

- Updating Voice/Sound Separation Template

- Updating Watermark Template

- Updating Intelligent Thumbnail Template

- Creating Media Processing Template

- AI-Based Content Recognition

- Media Processing

- Batch Job APIs

- Callback Content

- Appendix

- Content Moderation APIs

- Submitting Virus Detection Job

- SDK Documentation

- SDK Overview

- Preparations

- Android SDK

- Getting Started

- Android SDK FAQs

- Quick Experience

- Bucket Operations

- Object Operations

- Uploading an Object

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Restoring Archived Objects

- Querying Object Metadata

- Generating Pre-Signed URLs

- Configuring Preflight Requests for Cross-origin Access

- Server-Side Encryption

- Single-Connection Bandwidth Limit

- Extracting Object Content

- Remote Disaster Recovery

- Data Management

- Cloud Access Management

- Data Verification

- Image Processing

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- C SDK

- C++ SDK

- .NET(C#) SDK

- Getting Started

- .NET (C#) SDK

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Restoring Archived Objects

- Querying Object Metadata

- Object Access URL

- Getting Pre-Signed URLs

- Configuring Preflight Requests for Cross-Origin Access

- Server-Side Encryption

- Single-URL Speed Limits

- Extracting Object Content

- Cross-Region Disaster Recovery

- Data Management

- Cloud Access Management

- Image Processing

- Content Moderation

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Backward Compatibility

- SDK for Flutter

- Go SDK

- iOS SDK

- Getting Started

- iOS SDK

- Quick Experience

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Listing Objects

- Copying and Moving Objects

- Extracting Object Content

- Checking Whether an Object Exists

- Deleting Objects

- Restoring Archived Objects

- Querying Object Metadata

- Server-Side Encryption

- Object Access URL

- Generating Pre-Signed URL

- Configuring CORS Preflight Requests

- Cross-region Disaster Recovery

- Data Management

- Cloud Access Management

- Image Processing

- Content Recognition

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Java SDK

- Getting Started

- FAQs

- Bucket Operations

- Object Operations

- Uploading Object

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Querying Object Metadata

- Modifying Object Metadata

- Object Access URL

- Generating Pre-Signed URLs

- Restoring Archived Objects

- Server-Side Encryption

- Client-Side Encryption

- Single-URL Speed Limits

- Extracting Object Content

- Uploading/Downloading Object at Custom Domain Name

- Data Management

- Cross-Region Disaster Recovery

- Cloud Access Management

- Image Processing

- Content Moderation

- File Processing

- Media Processing

- AI-Based Content Recognition

- Troubleshooting

- Setting Access Domain Names (CDN/Global Acceleration)

- JavaScript SDK

- Node.js SDK

- PHP SDK

- Python SDK

- Getting Started

- Python SDK FAQs

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Querying Object Metadata

- Modifying Object Metadata

- Object Access URL

- Getting Pre-Signed URLs

- Restoring Archived Objects

- Extracting Object Content

- Server-Side Encryption

- Client-Side Encryption

- Single-URL Speed Limits

- Cross-Region Disaster Recovery

- Data Management

- Cloud Access Management

- Content Recognition

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Image Processing

- React Native SDK

- Mini Program SDK

- Getting Started

- FAQs

- Bucket Operations

- Object Operations

- Uploading an Object

- Downloading Objects

- Listing Objects

- Deleting Objects

- Copying and Moving Objects

- Restoring Archived Objects

- Querying Object Metadata

- Checking Whether an Object Exists

- Object Access URL

- Generating Pre-Signed URL

- Configuring CORS Preflight Requests

- Single-URL Speed Limits

- Server-Side Encryption

- Remote disaster-tolerant

- Data Management

- Cloud Access Management

- Data Verification

- Content Moderation

- Setting Access Domain Names (CDN/Global Acceleration)

- Image Processing

- Troubleshooting

- Error Codes

- FAQs

- Service Level Agreement

- Appendices

- Glossary

Using Apache Ranger to Manage GooseFS Access Permissions

Terakhir diperbarui:2024-03-25 16:04:01

Overview

Apache Ranger is a standardized authentication component that manages access permissions across the big data ecosystem. GooseFS, as an acceleration storage system used for big data and data lakes, can be integrated into the comprehensive Apache Ranger authentication platform. This document describes how to use Apache Ranger to manage access permissions for GooseFS.

Advantages

GooseFS is a cloud-native accelerated storage system that has supported Apache Range access permission management nearly in the same way as it supported HDFS. Therefore, HDFS big data users can easily migrate to GooseFS and reuse HDFS Ranger permission policies.

Compared with HDFS with Ranger, GooseFS with Ranger offers an authentication option of “Ranger + native ACL” that allows you to use the native ACL authentication when Ranger authentication fails, which solves the problem of imperfect Ranger authentication policy configurations.

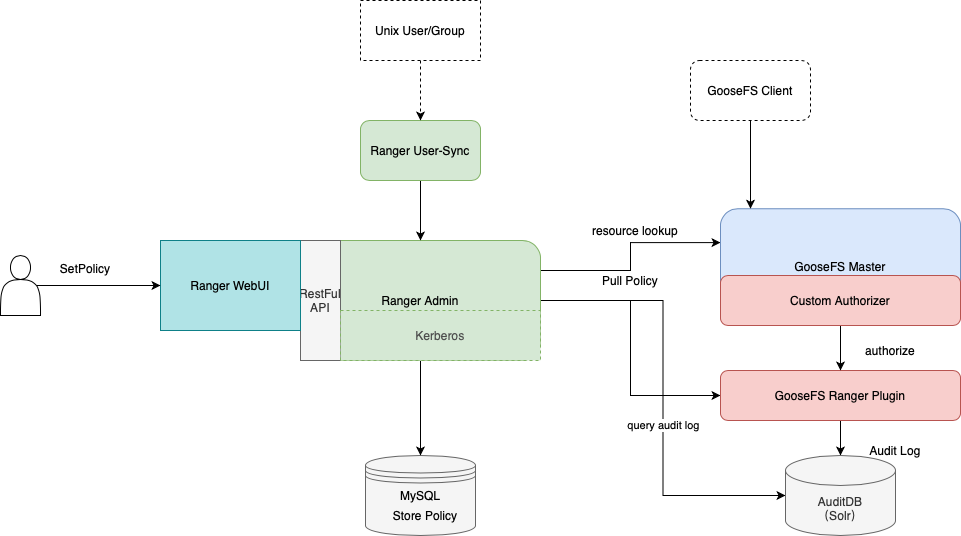

Framework of GooseFS with Ranger

To integrate GooseFS into Ranger, we developed the GooseFS Ranger plugin that should be deployed on the GooseFS master node and Ranger Admin. The plugin does the following operations:

On the GooseFS master node:

Provides the

Authorizer API to authenticate each metadata request on the GooseFS master node.Connects to Ranger Admin to obtain user-configured authentication policies.

On the Ranger Admin:

Supports GooseFS resource lookup for Ranger Admin.

Verifies configurations.

Deployment

Preparations

Before you start, ensure that you have deployed and configured Ranger components (including Ranger Admin and Ranger UserSync) in the environment and can open and use the Ranger web UI normally.

Component Deployment

Deploying GooseFS Ranger plugin to Ranger Admin and registering service

Note:

Deploy as follows:

1. Create a GooseFS directory in the Ranger service definition directory. Note that you should at least have execute and read permissions on the directory.

1. If you use a Tencent Cloud EMR cluster, the Ranger service definition directory is

/usr/local/service/ranger/ews/webapp/WEB-INF/classes/ranger-plugins.2. If you use a self-built Hadoop cluster, you can search for the path of Ranger-integrated components (such as HDFS) in Ranger to locate the path.

3. Put

goosefs-ranger-plugin-${version}.jar and ranger-servicedef-goosefs.json in the GooseFS directory. Note that you should have read permission.4. Restart Ranger.

5. In Ranger, run the following commands to register the GooseFS service:

# Create the service. The Ranger admin account and password, as well as the Ranger service address should be specified.# For the Tencent Cloud EMR cluster, the admin is the root, and the password is the root account’s password that is set when the EMR cluster is created. The Ranger service IP is the master node IP of the EMR.adminUser=rootadminPasswd=xxxxrangerServerAddr=10.0.0.1:6080curl -v -u${adminUser}:${adminPasswd} -X POST -H "Accept:application/json" -H "Content-Type:application/json" -d @./ranger-servicedef-goosefs.json http://${rangerServerAddr}/service/plugins/definitions# When the service is successfully registered, a service ID will be returned, which should be remembered.# To delete the GooseFS service, pass the service ID returned to run the following command:serviceId=104curl -v -u${adminUser}:${adminPasswd} -X DELETE -H "Accept:application/json" -H "Content-Type:application/json" http://${rangerServerAddr}/service/plugins/definitions/${serviceId}

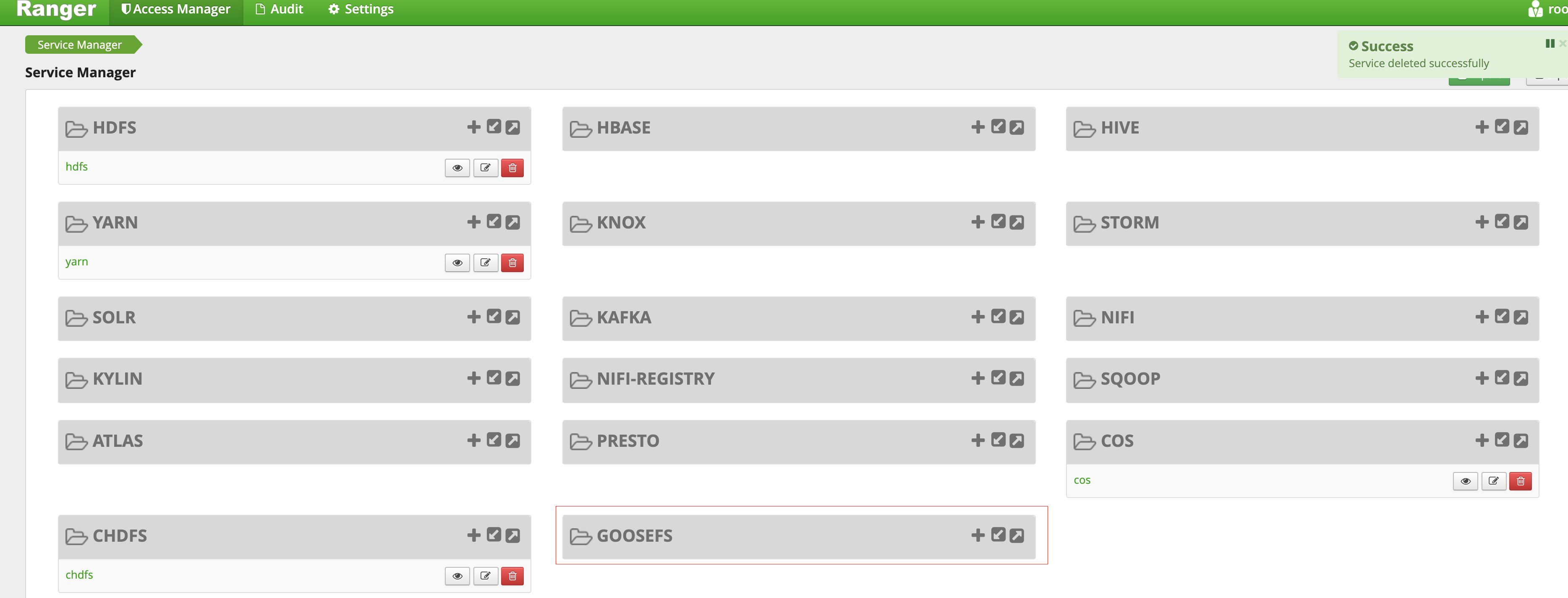

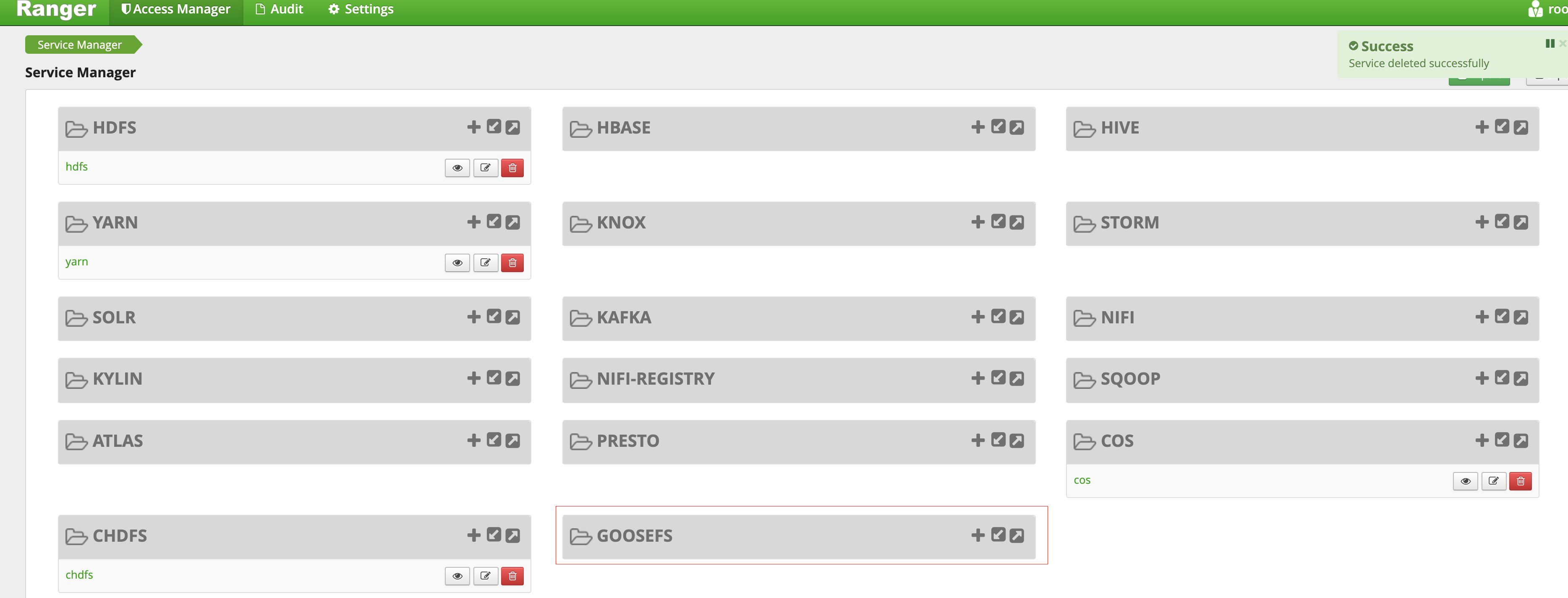

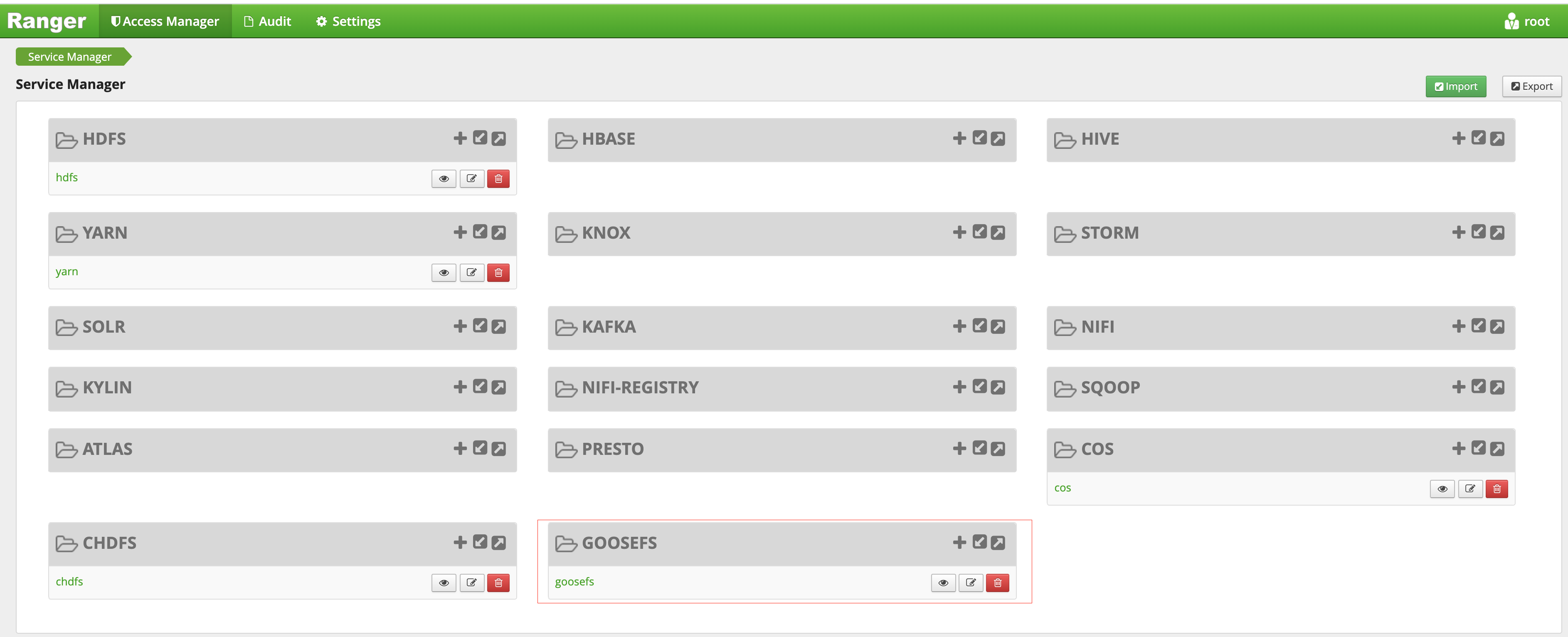

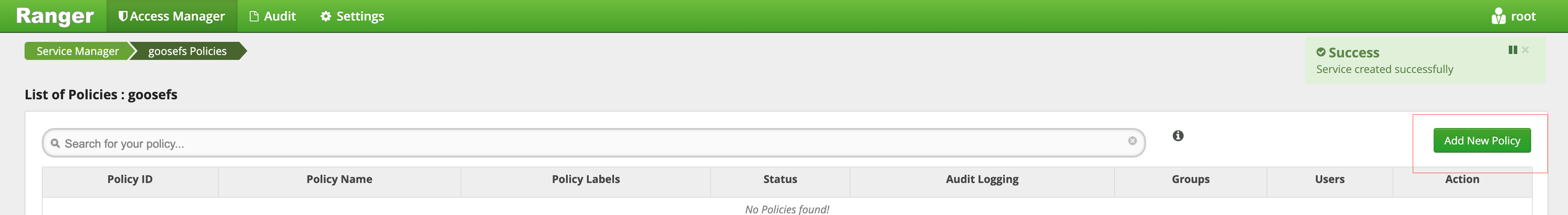

6. After the GooseFS service is created, you can view it in the Ranger console.

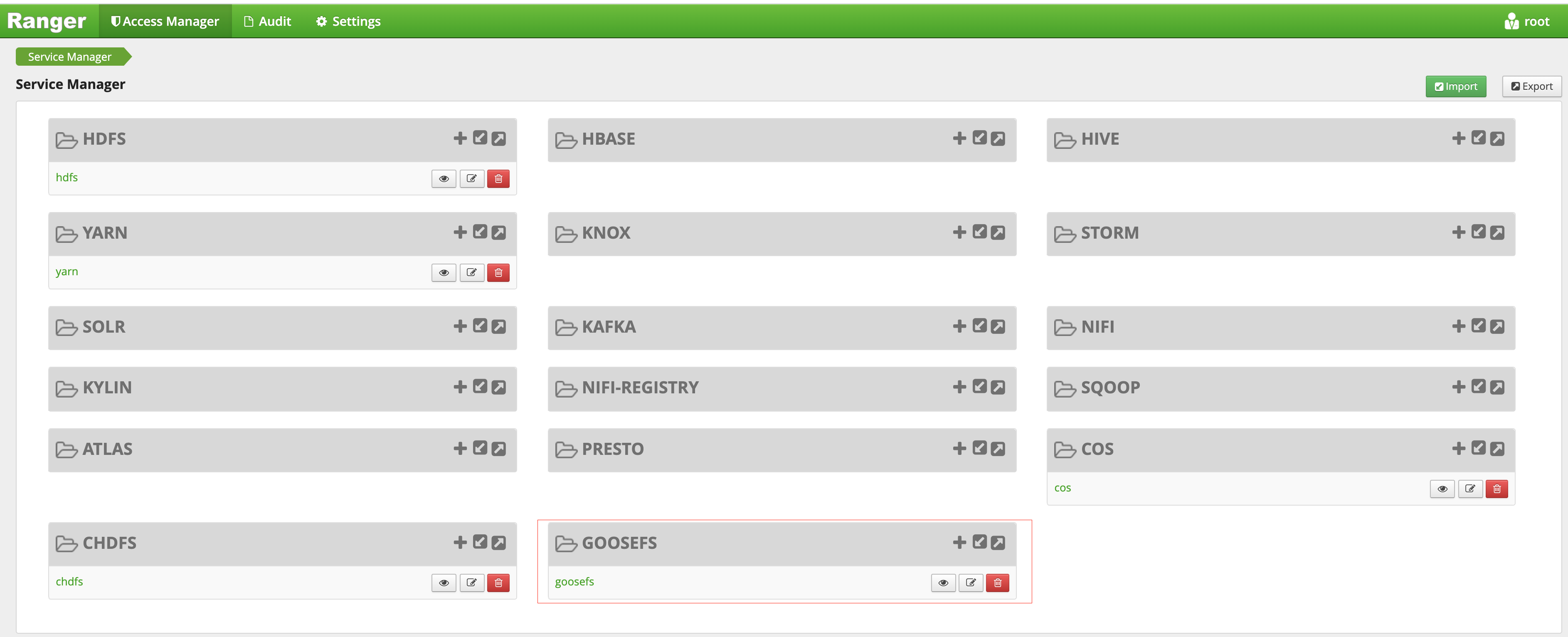

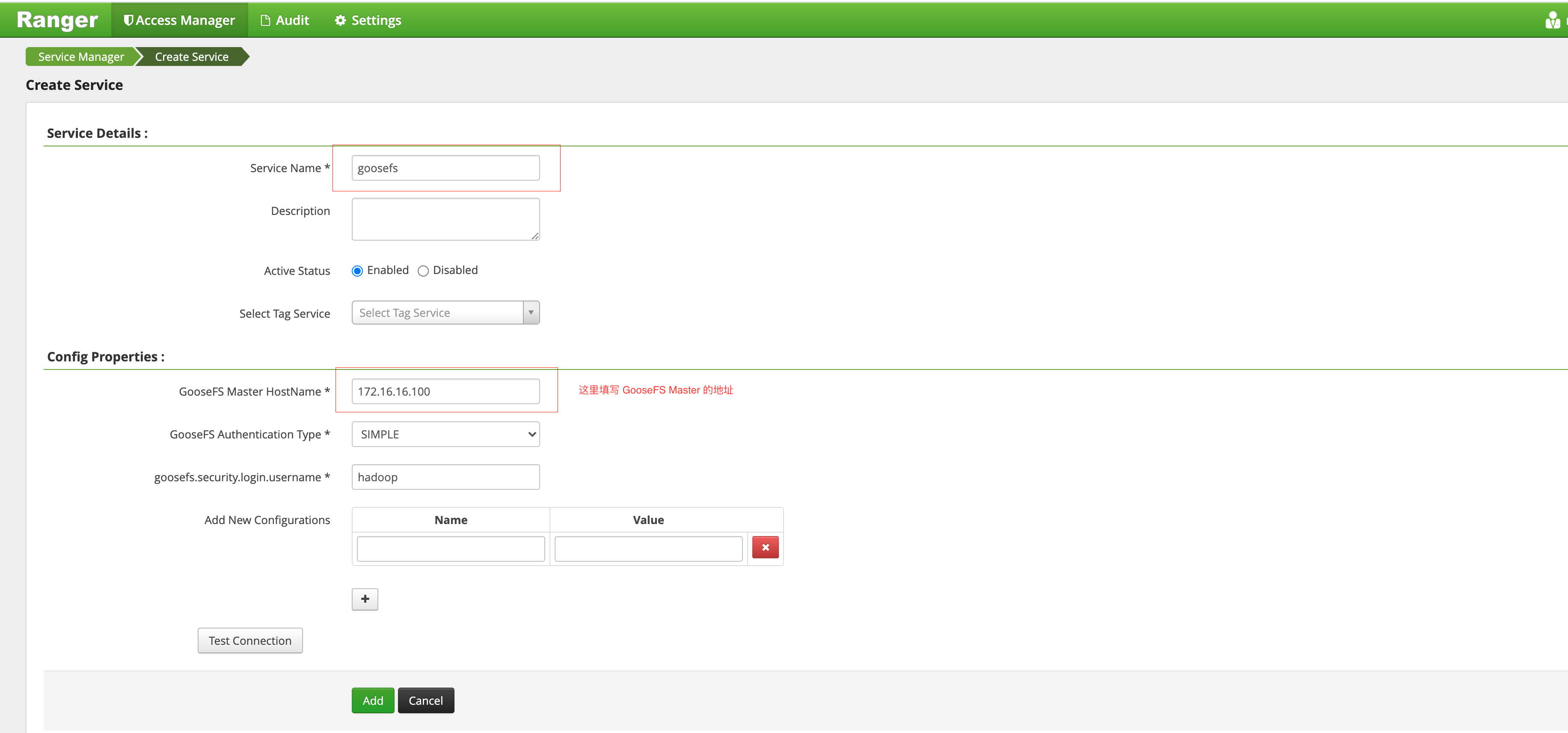

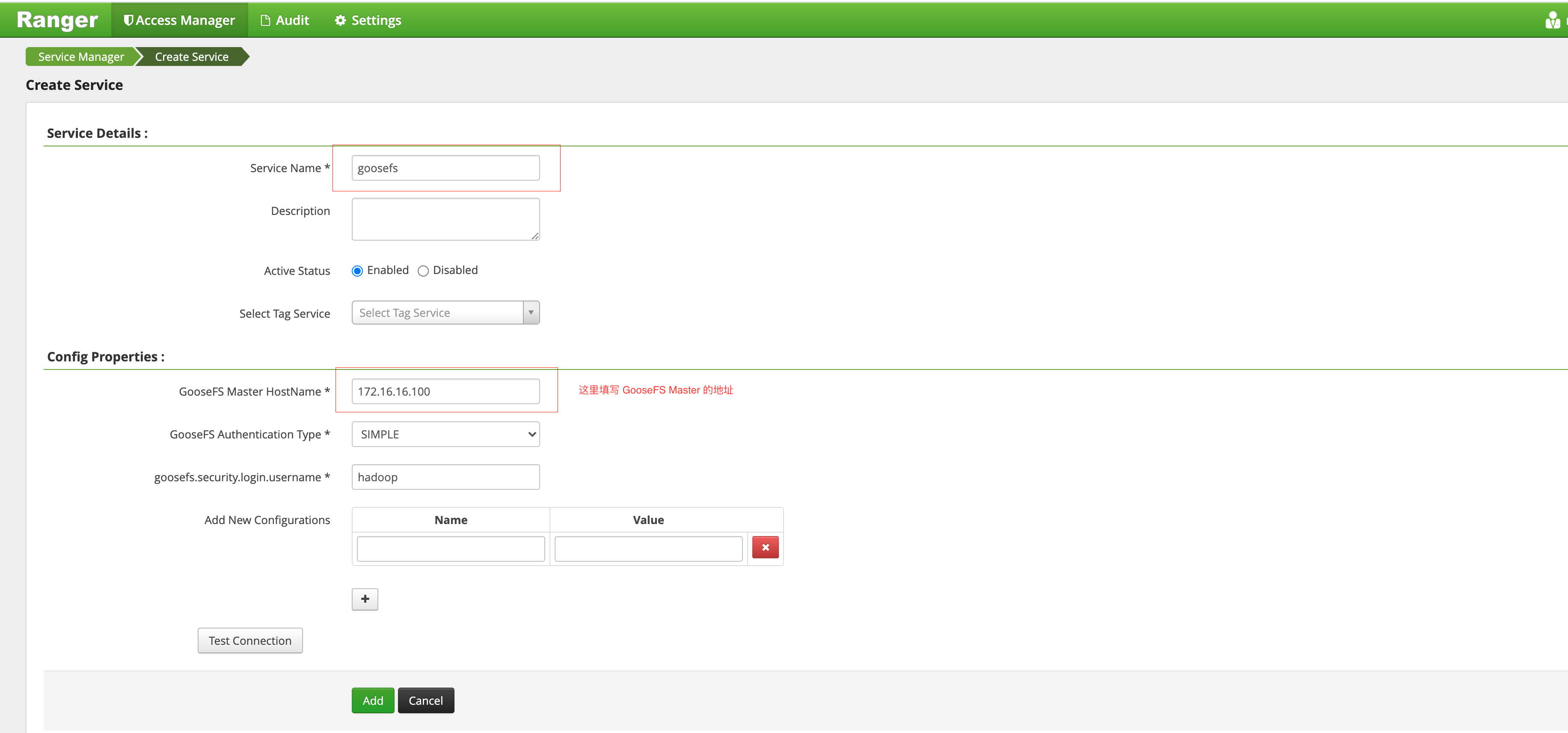

7. Click + to define the GooseFS service instance.

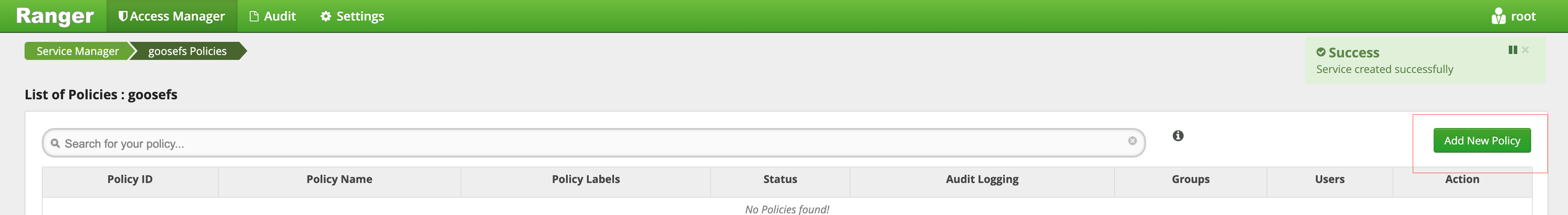

8. Click the created GooseFS instance and add a policy.

Deploying GooseFS Ranger plugin and enabling Ranger authentication

1. Put

goosefs-ranger-plugin-${version}.jar in the \\${GOOSEFS_HOME}/lib directory. You should at least have read permission.2. Put

ranger-goosefs-audit.xml, ranger-goosefs-security.xml, and ranger-policymgr-ssl.xml to the \\${GOOSEFS_HOME}/conf directory and configure the required parameters as follows:ranger-goosefs-security.xml:<configuration xmlns:xi="http://www.w3.org/2001/XInclude"><property><name>ranger.plugin.goosefs.service.name</name><value>goosefs</value></property><property><name>ranger.plugin.goosefs.policy.source.impl</name><value>org.apache.ranger.admin.client.RangerAdminRESTClient</value></property><property><name>ranger.plugin.goosefs.policy.rest.url</name><value>http://10.0.0.1:6080</value></property><property><name>ranger.plugin.goosefs.policy.pollIntervalMs</name><value>30000</value></property><property><name>ranger.plugin.goosefs.policy.rest.client.connection.timeoutMs</name><value>1200</value></property><property><name>ranger.plugin.goosefs.policy.rest.client.read.timeoutMs</name><value>30000</value></property></configuration>

ranger-goosefs-audit.xml (you can skip it if audit is disabled):<configuration><property><name>xasecure.audit.is.enabled</name><value>false</value></property><property><name>xasecure.audit.db.is.async</name><value>true</value></property><property><name>xasecure.audit.db.async.max.queue.size</name><value>10240</value></property><property><name>xasecure.audit.db.async.max.flush.interval.ms</name><value>30000</value></property><property><name>xasecure.audit.db.batch.size</name><value>100</value></property><property><name>xasecure.audit.jpa.javax.persistence.jdbc.url</name><value>jdbc:mysql://localhost:3306/ranger_audit</value></property><property><name>xasecure.audit.jpa.javax.persistence.jdbc.user</name><value>rangerLogger</value></property><property><name>xasecure.audit.jpa.javax.persistence.jdbc.password</name><value>none</value></property><property><name>xasecure.audit.jpa.javax.persistence.jdbc.driver</name><value>com.mysql.jdbc.Driver</value></property><property><name>xasecure.audit.credential.provider.file</name><value>jceks://file/etc/ranger/hadoopdev/auditcred.jceks</value></property><property><name>xasecure.audit.hdfs.is.enabled</name><value>true</value></property><property><name>xasecure.audit.hdfs.is.async</name><value>true</value></property><property><name>xasecure.audit.hdfs.async.max.queue.size</name><value>1048576</value></property><property><name>xasecure.audit.hdfs.async.max.flush.interval.ms</name><value>30000</value></property><property><name>xasecure.audit.hdfs.config.encoding</name><value></value></property><!-- hdfs audit provider config--><property><name>xasecure.audit.hdfs.config.destination.directory</name><value>hdfs://NAMENODE_HOST:8020/ranger/audit/</value></property><property><name>xasecure.audit.hdfs.config.destination.file</name><value>%hostname%-audit.log</value></property><proeprty><name>xasecure.audit.hdfs.config.destination.flush.interval.seconds</name><value>900</value></proeprty><property><name>xasecure.audit.hdfs.config.destination.rollover.interval.seconds</name><value>86400</value></property><property><name>xasecure.audit.hdfs.config.destination.open.retry.interval.seconds</name><value>60</value></property><property><name>xasecure.audit.hdfs.config.local.buffer.directory</name><value>/var/log/hadoop/%app-type%/audit</value></property><property><name>xasecure.audit.hdfs.config.local.buffer.file</name><value>%time:yyyyMMdd-HHmm.ss%.log</value></property><property><name>xasecure.audit.hdfs.config.local.buffer.file.buffer.size.bytes</name><value>8192</value></property><property><name>xasecure.audit.hdfs.config.local.buffer.flush.interval.seconds</name><value>60</value></property><property><name>xasecure.audit.hdfs.config.local.buffer.rollover.interval.seconds</name><value>600</value></property><property><name>xasecure.audit.hdfs.config.local.archive.directory</name><value>/var/log/hadoop/%app-type%/audit/archive</value></property><property><name>xasecure.audit.hdfs.config.local.archive.max.file.count</name><value>10</value></property><!-- log4j audit provider config --><property><name>xasecure.audit.log4j.is.enabled</name><value>false</value></property><property><name>xasecure.audit.log4j.is.async</name><value>false</value></property><property><name>xasecure.audit.log4j.async.max.queue.size</name><value>10240</value></property><property><name>xasecure.audit.log4j.async.max.flush.interval.ms</name><value>30000</value></property><!-- kafka audit provider config --><property><name>xasecure.audit.kafka.is.enabled</name><value>false</value></property><property><name>xasecure.audit.kafka.async.max.queue.size</name><value>1</value></property><property><name>xasecure.audit.kafka.async.max.flush.interval.ms</name><value>1000</value></property><property><name>xasecure.audit.kafka.broker_list</name><value>localhost:9092</value></property><property><name>xasecure.audit.kafka.topic_name</name><value>ranger_audits</value></property><!-- ranger audit solr config --><property><name>xasecure.audit.solr.is.enabled</name><value>false</value></property><property><name>xasecure.audit.solr.async.max.queue.size</name><value>1</value></property><property><name>xasecure.audit.solr.async.max.flush.interval.ms</name><value>1000</value></property><property><name>xasecure.audit.solr.solr_url</name><value>http://localhost:6083/solr/ranger_audits</value></property></configuration>

ranger-policymgr-ssl.xml<configuration><property><name>xasecure.policymgr.clientssl.keystore</name><value>hadoopdev-clientcert.jks</value></property><property><name>xasecure.policymgr.clientssl.truststore</name><value>cacerts-xasecure.jks</value></property><property><name>xasecure.policymgr.clientssl.keystore.credential.file</name><value>jceks://file/tmp/keystore-hadoopdev-ssl.jceks</value></property><property><name>xasecure.policymgr.clientssl.truststore.credential.file</name><value>jceks://file/tmp/truststore-hadoopdev-ssl.jceks</value></property></configuration>

3. Add the following configurations to

goosefs-site.properties:...goosefs.security.authorization.permission.type=CUSTOMgoosefs.security.authorization.custom.provider.class=org.apache.ranger.authorization.goosefs.RangerGooseFSAuthorizer...

4. In

\\${GOOSEFS_HOME}/libexec/goosefs-config.sh, add goosefs-ranger-plugin-${version}.jar to the GooseFS class paths:...GOOSEFS_RANGER_CLASSPATH="${GOOSEFS_HOME}/lib/ranger-goosefs-plugin-${version}.jar"GOOSEFS_SERVER_CLASSPATH=${GOOSEFS_SERVER_CLASSPATH}:${GOOSEFS_RANGER_CLASSPATH}...

After these, the configuration is complete.

Verification

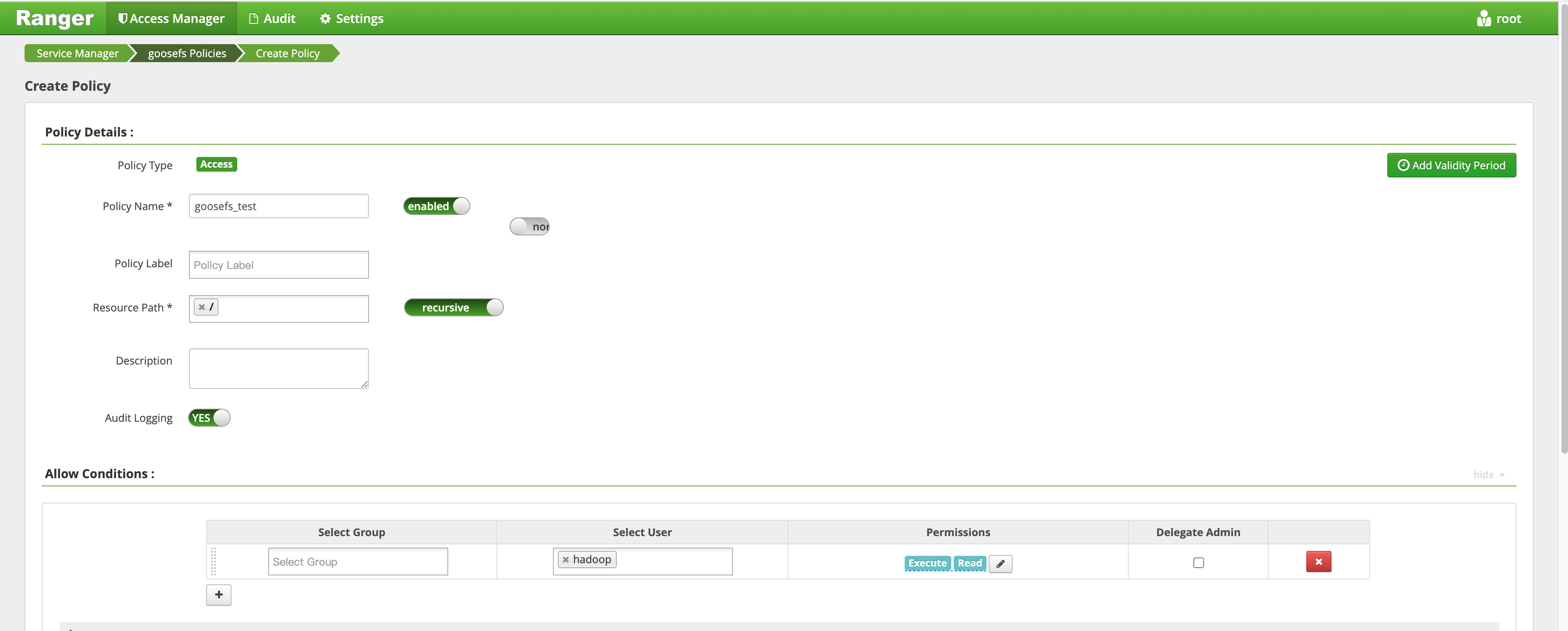

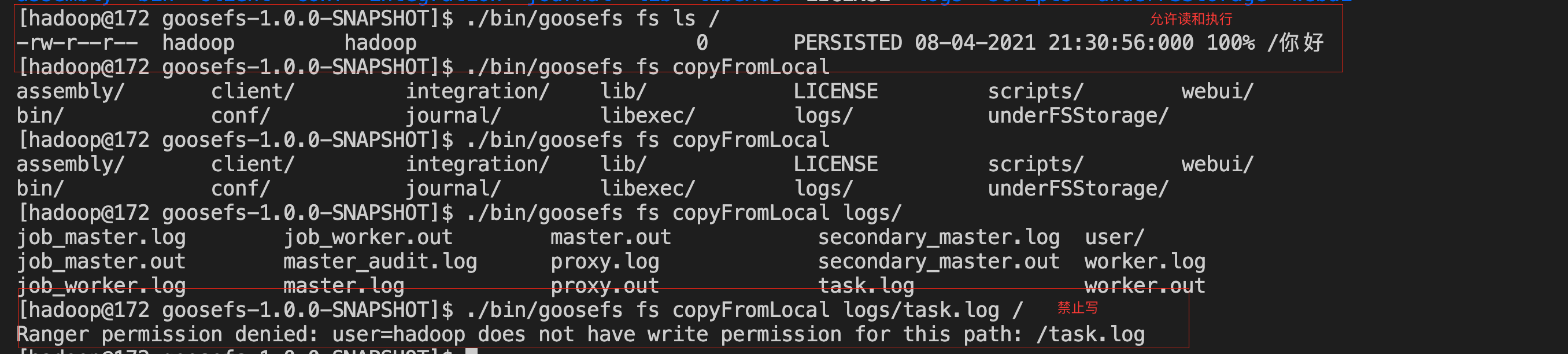

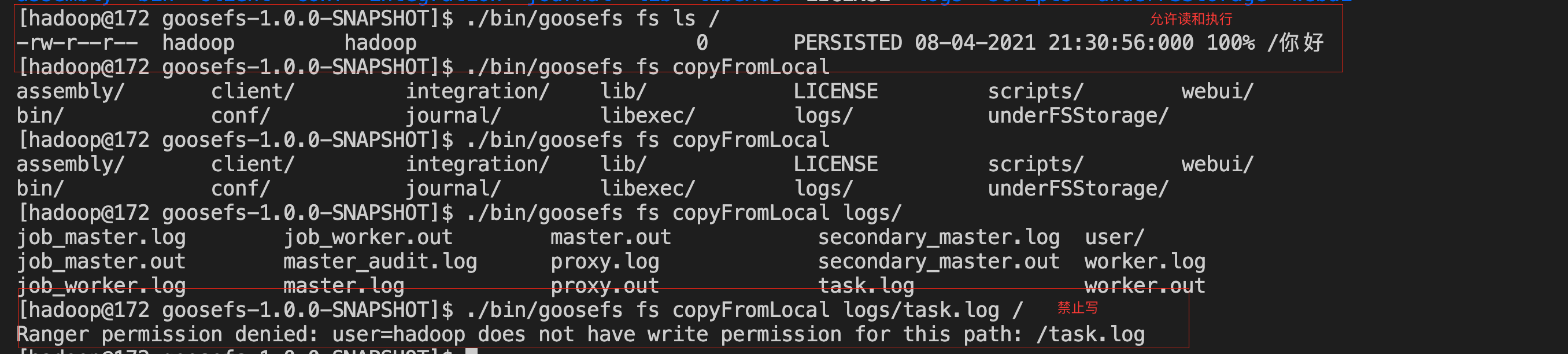

You can add a policy that allows Hadoop users to read and execute but not write to the GooseFS root directory as follows:

1. Add a policy.

2. Verify the policy to see if the policy takes effect.

Ya

Ya

Tidak

Tidak

Apakah halaman ini membantu?