- Release Notes and Announcements

- Product Introduction

- Purchase Guide

- Getting Started

- Operation Guide

- Instance Management

- Creating Instance

- Naming with Consecutive Numeric Suffixes or Designated Pattern String

- Viewing Instance

- Upgrading Instance

- Downgrading Instance Configuration

- Terminating/Returning Instances

- Change from Pay-as-You-Go to Monthly Subscription

- Upgrading Instance Version

- Adding Routing Policy

- Public Network Bandwidth Management

- Connecting to Prometheus

- AZ Migration

- Setting Maintenance Time

- Setting Message Size

- Topic Management

- Consumer Group

- Monitoring and Alarms

- Smart Ops

- Permission Management

- Tag Management

- Querying Message

- Event Center

- Migration to Cloud

- Data Compression

- Instance Management

- CKafka Connector

- Best Practices

- Connector Best Practices

- Connecting Flink to CKafka

- Connecting Schema Registry to CKafka

- Connecting Spark Streaming to CKafka

- Connecting Flume to CKafka

- Connecting Kafka Connect to CKafka

- Connecting Storm to CKafka

- Connecting Logstash to CKafka

- Connecting Filebeat to CKafka

- Multi-AZ Deployment

- Production and Consumption

- Log Access

- Replacing Supportive Route (Old)

- Troubleshooting

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- DataHub APIs

- ACL APIs

- Topic APIs

- BatchModifyGroupOffsets

- BatchModifyTopicAttributes

- CreateConsumer

- CreateDatahubTopic

- CreatePartition

- CreateTopic

- CreateTopicIpWhiteList

- DeleteTopic

- DeleteTopicIpWhiteList

- DescribeDatahubTopic

- DescribeTopic

- DescribeTopicAttributes

- DescribeTopicDetail

- DescribeTopicProduceConnection

- DescribeTopicSubscribeGroup

- FetchMessageByOffset

- FetchMessageListByOffset

- ModifyDatahubTopic

- ModifyTopicAttributes

- DescribeTopicSyncReplica

- Instance APIs

- Route APIs

- Other APIs

- Data Types

- Error Codes

- SDK Documentation

- General References

- FAQs

- Service Level Agreement (New Version)

- Contact Us

- Glossary

- Release Notes and Announcements

- Product Introduction

- Purchase Guide

- Getting Started

- Operation Guide

- Instance Management

- Creating Instance

- Naming with Consecutive Numeric Suffixes or Designated Pattern String

- Viewing Instance

- Upgrading Instance

- Downgrading Instance Configuration

- Terminating/Returning Instances

- Change from Pay-as-You-Go to Monthly Subscription

- Upgrading Instance Version

- Adding Routing Policy

- Public Network Bandwidth Management

- Connecting to Prometheus

- AZ Migration

- Setting Maintenance Time

- Setting Message Size

- Topic Management

- Consumer Group

- Monitoring and Alarms

- Smart Ops

- Permission Management

- Tag Management

- Querying Message

- Event Center

- Migration to Cloud

- Data Compression

- Instance Management

- CKafka Connector

- Best Practices

- Connector Best Practices

- Connecting Flink to CKafka

- Connecting Schema Registry to CKafka

- Connecting Spark Streaming to CKafka

- Connecting Flume to CKafka

- Connecting Kafka Connect to CKafka

- Connecting Storm to CKafka

- Connecting Logstash to CKafka

- Connecting Filebeat to CKafka

- Multi-AZ Deployment

- Production and Consumption

- Log Access

- Replacing Supportive Route (Old)

- Troubleshooting

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- DataHub APIs

- ACL APIs

- Topic APIs

- BatchModifyGroupOffsets

- BatchModifyTopicAttributes

- CreateConsumer

- CreateDatahubTopic

- CreatePartition

- CreateTopic

- CreateTopicIpWhiteList

- DeleteTopic

- DeleteTopicIpWhiteList

- DescribeDatahubTopic

- DescribeTopic

- DescribeTopicAttributes

- DescribeTopicDetail

- DescribeTopicProduceConnection

- DescribeTopicSubscribeGroup

- FetchMessageByOffset

- FetchMessageListByOffset

- ModifyDatahubTopic

- ModifyTopicAttributes

- DescribeTopicSyncReplica

- Instance APIs

- Route APIs

- Other APIs

- Data Types

- Error Codes

- SDK Documentation

- General References

- FAQs

- Service Level Agreement (New Version)

- Contact Us

- Glossary

Logstash is an open-source log processing tool that can be used to collect data from multiple sources, filters it, and then stores it for other uses.

Logstash is highly flexible and has powerful syntax analysis capabilities. With a variety of plugins, it supports multiple types of inputs and outputs. In addition, as a horizontally scalable data pipeline, it has powerful log collection and retrieval features that work with Elasticsearch and Kibana.

How Logstash Works

The Logstash data processing pipeline can be divided into three stages: inputs → filters → outputs.

- Inputs: Collect data from multiple sources like file, syslog, redis, and beats.

- Filters: Modify and filter the collected data. Filters are intermediate processing components in the Logstash data pipeline. They can modify events based on specific conditions. Some commonly used filters are grok, mutate, drop, and clone.

- Outputs: Transfer the processed data to other destinations. An event can be transferred to multiple outputs, and the event ends when the transfer is completed. Elasticsearch is the most commonly-used output.

In addition, Logstash supports encoding and decoding data, so you can specify data formats on the input and output ends.

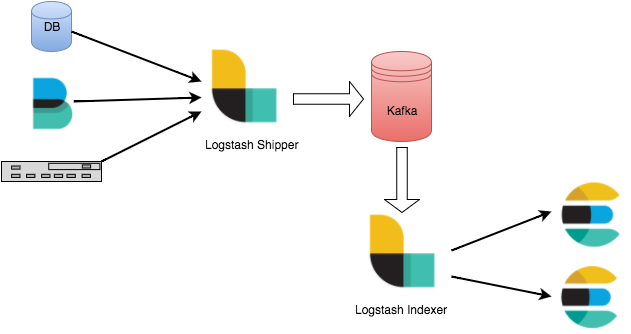

Strengths of Connecting Logstash to Kafka

- Data can be asynchronously processed to prevent traffic spikes.

- Components are decoupled, so when an exception occurs in Elasticsearch, the upstream work will not be affected.

Note:Logstash consumes resources when processing data. If you deploy Logstash on a production server, the performance of the server may be affected.

Directions

Preparations

- Download and install Logstash as instructed in Installing Logstash.

- Download and install JDK 8 as instructed in Java SE Development Kit 8u341.

- Create a CKafka instance as instructed in Creating Instance.

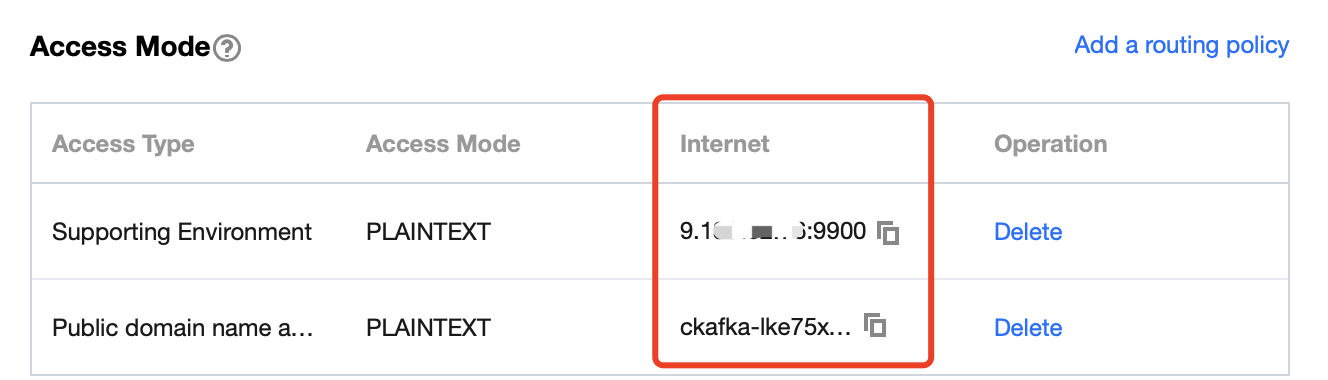

Step 1. Get the CKafka instance access address

- Log in to the CKafka console.

- Select Instance List on the left sidebar and click the ID of the target instance to enter its basic information page.

- On the instance's basic information page, get the instance access address in the Access Mode module.

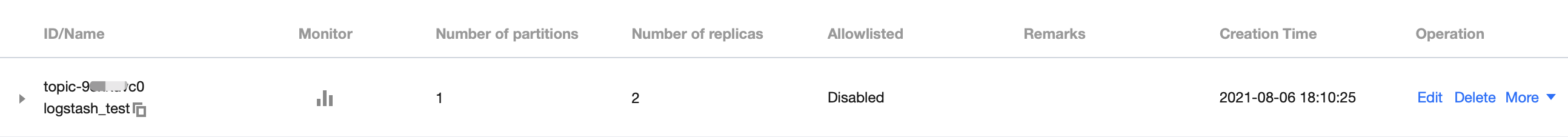

Step 2. Create a topic

- On the instance's basic information page, select the Topic Management tab at the top.

- On the topic management page, click Create to create a topic named

logstash_test.

Step 3. Connect to CKafka

Note:You can click the following tabs to view the detailed directions for using CKafka as

inputsoroutputs.

Run

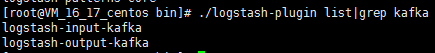

bin/logstash-plugin listto check whetherlogstash-input-kafkais included in the supported plugins.

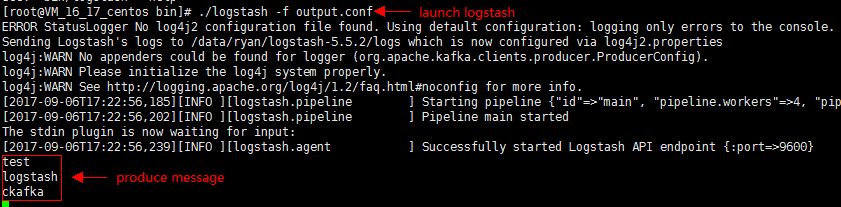

Write the configuration file

input.confin the.bin/directory.

In the following example, Kafka is used as the data source, and the standard output is taken as the data destination.input { kafka { bootstrap_servers => "xx.xx.xx.xx:xxxx" // CKafka instance access address group_id => "logstash_group" // CKafka group ID topics => ["logstash_test"] // CKafka topic name consumer_threads => 3 // Number of consumer threads, which is generally the same as the number of CKafka partitions auto_offset_reset => "earliest" } } output { stdout{codec=>rubydebug} }Run the following command to start Logstash and consume messages.

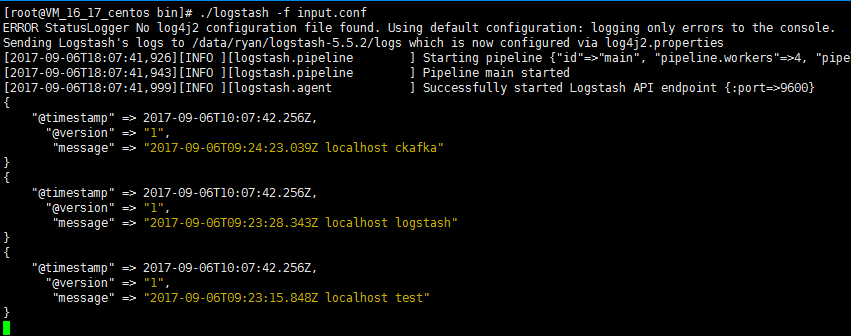

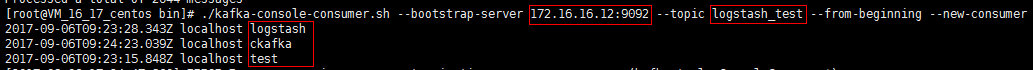

./logstash -f input.confThe returned result is as follows:

You can see that the data in the topic above has been consumed now.

Yes

Yes

No

No

Was this page helpful?