- Release Notes and Announcements

- Product Introduction

- Purchase Guide

- Getting Started

- Operation Guide

- Instance Management

- Creating Instance

- Naming with Consecutive Numeric Suffixes or Designated Pattern String

- Viewing Instance

- Upgrading Instance

- Downgrading Instance Configuration

- Terminating/Returning Instances

- Change from Pay-as-You-Go to Monthly Subscription

- Upgrading Instance Version

- Adding Routing Policy

- Public Network Bandwidth Management

- Connecting to Prometheus

- AZ Migration

- Setting Maintenance Time

- Setting Message Size

- Topic Management

- Consumer Group

- Monitoring and Alarms

- Smart Ops

- Permission Management

- Tag Management

- Querying Message

- Event Center

- Migration to Cloud

- Data Compression

- Instance Management

- CKafka Connector

- Best Practices

- Connector Best Practices

- Connecting Flink to CKafka

- Connecting Schema Registry to CKafka

- Connecting Spark Streaming to CKafka

- Connecting Flume to CKafka

- Connecting Kafka Connect to CKafka

- Connecting Storm to CKafka

- Connecting Logstash to CKafka

- Connecting Filebeat to CKafka

- Multi-AZ Deployment

- Production and Consumption

- Log Access

- Replacing Supportive Route (Old)

- Troubleshooting

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- DataHub APIs

- ACL APIs

- Topic APIs

- BatchModifyGroupOffsets

- BatchModifyTopicAttributes

- CreateConsumer

- CreateDatahubTopic

- CreatePartition

- CreateTopic

- CreateTopicIpWhiteList

- DeleteTopic

- DeleteTopicIpWhiteList

- DescribeDatahubTopic

- DescribeTopic

- DescribeTopicAttributes

- DescribeTopicDetail

- DescribeTopicProduceConnection

- DescribeTopicSubscribeGroup

- FetchMessageByOffset

- FetchMessageListByOffset

- ModifyDatahubTopic

- ModifyTopicAttributes

- DescribeTopicSyncReplica

- Instance APIs

- Route APIs

- Other APIs

- Data Types

- Error Codes

- SDK Documentation

- General References

- FAQs

- Service Level Agreement (New Version)

- Contact Us

- Glossary

- Release Notes and Announcements

- Product Introduction

- Purchase Guide

- Getting Started

- Operation Guide

- Instance Management

- Creating Instance

- Naming with Consecutive Numeric Suffixes or Designated Pattern String

- Viewing Instance

- Upgrading Instance

- Downgrading Instance Configuration

- Terminating/Returning Instances

- Change from Pay-as-You-Go to Monthly Subscription

- Upgrading Instance Version

- Adding Routing Policy

- Public Network Bandwidth Management

- Connecting to Prometheus

- AZ Migration

- Setting Maintenance Time

- Setting Message Size

- Topic Management

- Consumer Group

- Monitoring and Alarms

- Smart Ops

- Permission Management

- Tag Management

- Querying Message

- Event Center

- Migration to Cloud

- Data Compression

- Instance Management

- CKafka Connector

- Best Practices

- Connector Best Practices

- Connecting Flink to CKafka

- Connecting Schema Registry to CKafka

- Connecting Spark Streaming to CKafka

- Connecting Flume to CKafka

- Connecting Kafka Connect to CKafka

- Connecting Storm to CKafka

- Connecting Logstash to CKafka

- Connecting Filebeat to CKafka

- Multi-AZ Deployment

- Production and Consumption

- Log Access

- Replacing Supportive Route (Old)

- Troubleshooting

- API Documentation

- History

- Introduction

- API Category

- Making API Requests

- DataHub APIs

- ACL APIs

- Topic APIs

- BatchModifyGroupOffsets

- BatchModifyTopicAttributes

- CreateConsumer

- CreateDatahubTopic

- CreatePartition

- CreateTopic

- CreateTopicIpWhiteList

- DeleteTopic

- DeleteTopicIpWhiteList

- DescribeDatahubTopic

- DescribeTopic

- DescribeTopicAttributes

- DescribeTopicDetail

- DescribeTopicProduceConnection

- DescribeTopicSubscribeGroup

- FetchMessageByOffset

- FetchMessageListByOffset

- ModifyDatahubTopic

- ModifyTopicAttributes

- DescribeTopicSyncReplica

- Instance APIs

- Route APIs

- Other APIs

- Data Types

- Error Codes

- SDK Documentation

- General References

- FAQs

- Service Level Agreement (New Version)

- Contact Us

- Glossary

Overview

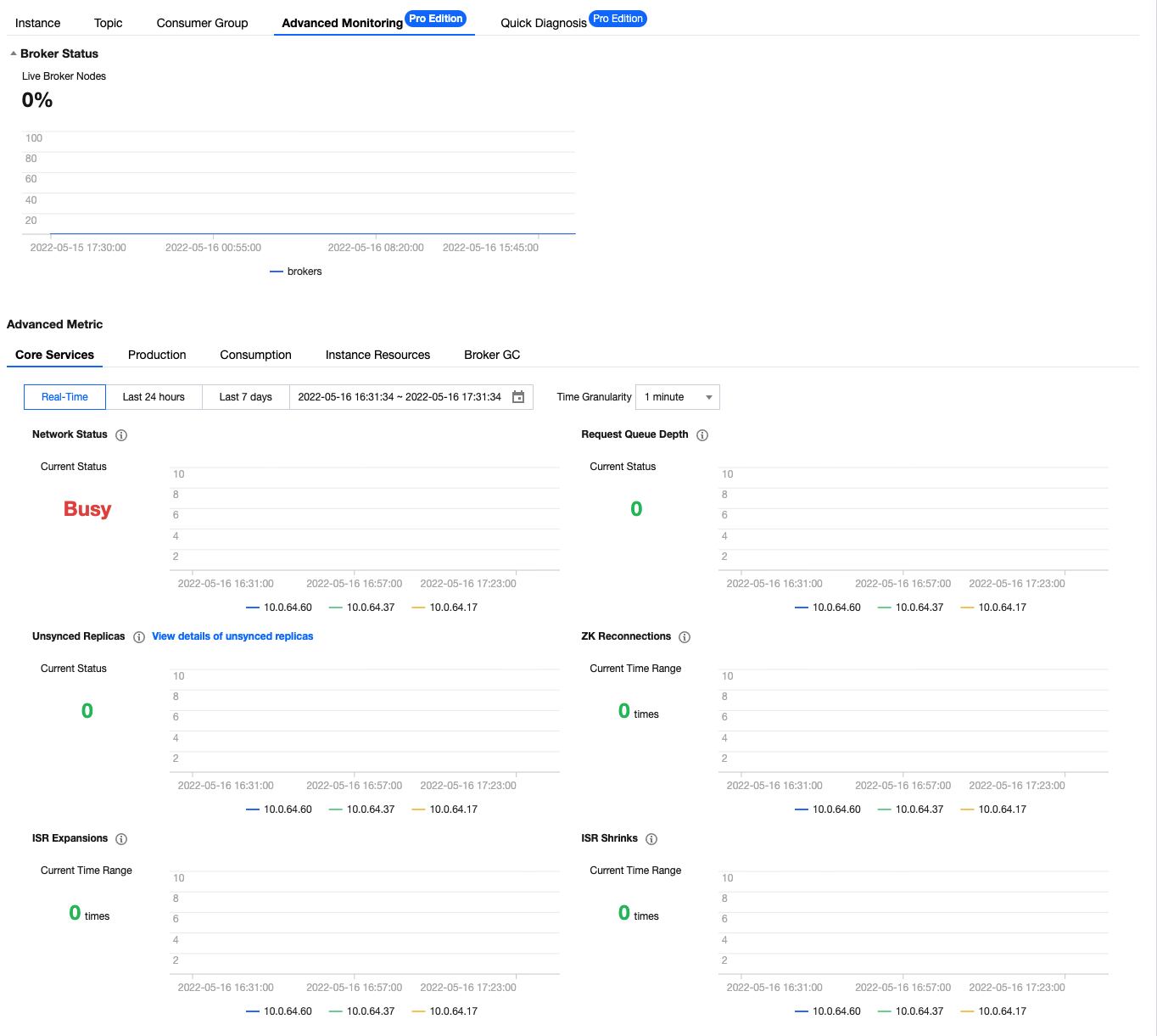

CKafka Pro Edition supports advanced monitoring. You can view metrics such as core services, production, consumption, and broker GC in the console, making it easier for you to troubleshoot CKafka issues.

This document describes how to view advanced monitoring metrics in the console and explains their meanings.

Directions

1. Log in to the CKafka console.

2. In the instance list, click the ID/Name of the target instance to enter the instance details page.

3. At the top of the instance details page, click Monitoring > Advanced Monitoring, select the metric to be viewed, and set the time range to view the monitoring data.

Monitoring information display

Note:

You can click the following tabs to view the monitoring information of the core service, production, consumption, instance resource, and broker GC.

Monitoring metric description

Note:

You can click the following tabs to view the detailed descriptions of monitoring metrics of the core service, production, consumption, instance resource, and broker GC.

Monitoring Metric | Description | Normal Range |

Network Status | This value is used to measure the current remaining I/O resources for concurrent request processing. The closer to 1 it is, the idler the instance is. | This value generally fluctuates between 0.5 and 1. If it falls below 0.3, the load is considered high. |

Request Queue Depth | This value indicates the number of production requests that have not been processed. If this value is too large, it may be because that the number of concurrent requests is high, the CPU load is high, or the disk I/O hits a bottleneck. | If this value stays at 2000, the cluster load is considered high. If it is below 2000, it can be ignored. |

Unsynced Replicas | This value indicates the number of unsynced replicas in the cluster. When there are unsynced replicas of an instance, there may be a health problem with the cluster. | If this value stays above 5 (this is because that some built-in topic partitions of Tencent Cloud may be offline and has nothing to do with the business), the cluster needs to be fixed. In case that the broker occasionally fluctuates, this value may surge and then become stable, which is normal. |

ZK Reconnections | This value indicates the number of reconnections of the persistent connection between the broker and ZooKeeper. Network fluctuations and high cluster loads may cause disconnections and reconnections, thus leading to a leader switch. For more information, see FAQs > Client >What is leader switch?. | There is no normal range for this metric. The number of ZK reconnections is cumulative, so a large number does not necessarily mean that there is a problem with the cluster. This metric is for reference only. |

ISR Expansions | This value is the number of Kafka ISR expansions. It will increase by 1 when an unsynced replica catches up with the data from the leader and rejoins the ISR. | There is no normal range for this metric. Expansions occur when the cluster fluctuates. No action is required unless this value stays above 0. |

ISR Shrinks | This value is the number of Kafka ISR shrinks. It is counted when the broker is down and ZooKeeper reconnects. | There is no normal range for this metric. Shrinks occur when the cluster fluctuates. No action is required unless this value stays above 0. |

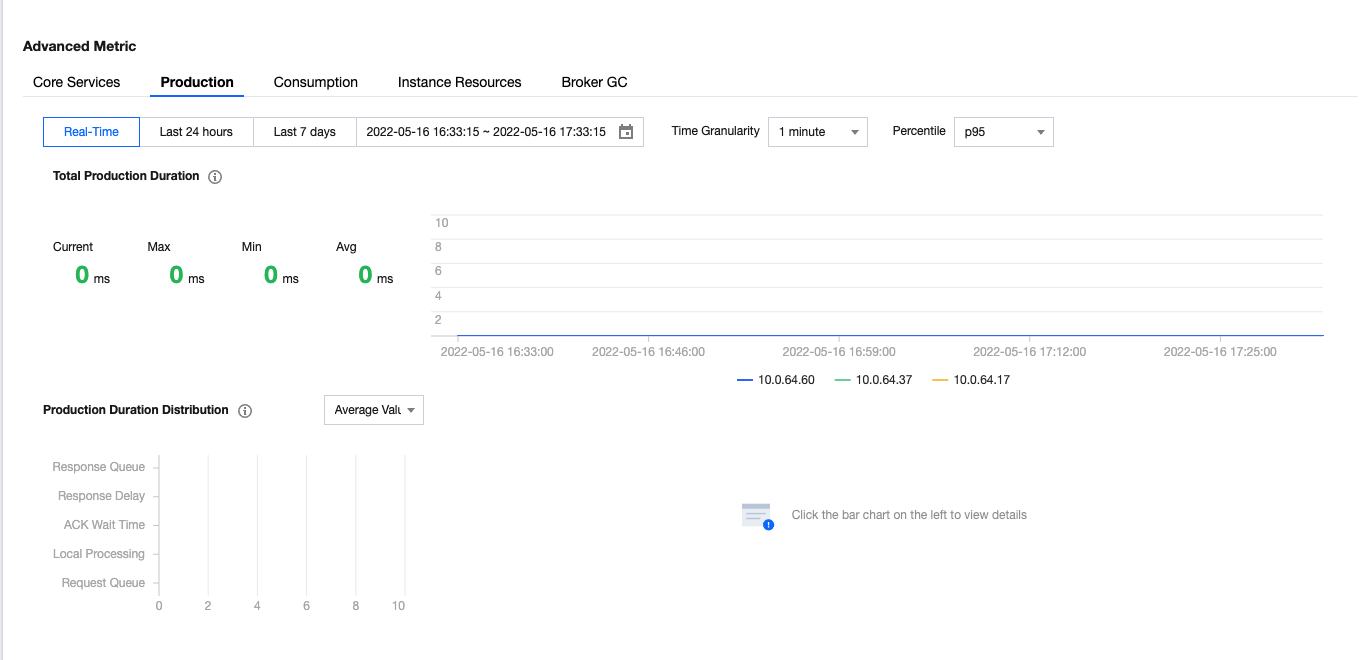

Monitoring Metric | Description | Normal Range |

Total Production Duration | This value indicates the total duration of a production request, which is based on metrics such as the request queue duration, local processing duration, and delayed response duration. At each point in time, the total duration is not equal to the sum of the following five metrics, because each metric is averaged. | This value generally ranges between 0 and 100 ms. It is normal to have a value falling in the range of up to 1000 ms when the data volume is high. No action is required unless this value stays above 1000 ms. |

Request Queue Duration | This value indicates the amount of time a production request waits in the queue of requests to be received. It means that the request packet waits for subsequent processing. | This value generally ranges between 0 and 50 ms. It is normal to have a value falling in the range of up to 200 ms when the data volume is high. No action is required unless this value stays above 200 ms. |

Local Processing Duration | This value indicates the amount of time a production request is processed by the leader broker, i.e., the duration between the request packet is obtained from the request queue and it is written to the local page cache. | This value generally ranges between 0 and 50 ms. It is normal to have a value falling in the range of up to 200 ms when the data volume is high. No action is required unless this value stays above 200 ms. |

ACK Wait Time | This value indicates the amount of time a production request waits for data to be synced. It is greater than 0 only when the client ack is -1; in other words, it is 0 as long as ack is 1 or 0. | This value generally ranges between 0 and 200 ms. It is normal to have a value falling in the range of up to 500 ms when the data volume is high. No action is required unless this value stays above 500 ms. This value for a multi-AZ instance is greater than that for a single-AZ instance when ack is -1. For more information, see Multi-AZ Deployment. |

Response Delay | This value indicates the amount of time it takes the system to delay returning a packet to a production request. This value will always be 0 as long as the traffic of the instance does not exceed the purchased traffic, and it will be greater than 0 if the traffic is throttled. | This value will be 0 as long as the instance does not exceed the limit. If the limit is exceeded, there will be a delay of 0–5 minutes proportional to the excess; in other words, the maximum value is 5 minutes. |

Response Queue Duration | This value indicates the amount of time a production request waits in the response queue. It means that the request packet waits to be sent to the client. | This value generally ranges between 0 and 50 ms. It is normal to have a value falling in the range of up to 200 ms when the data volume is high. No action is required unless this value stays above 200 ms. |

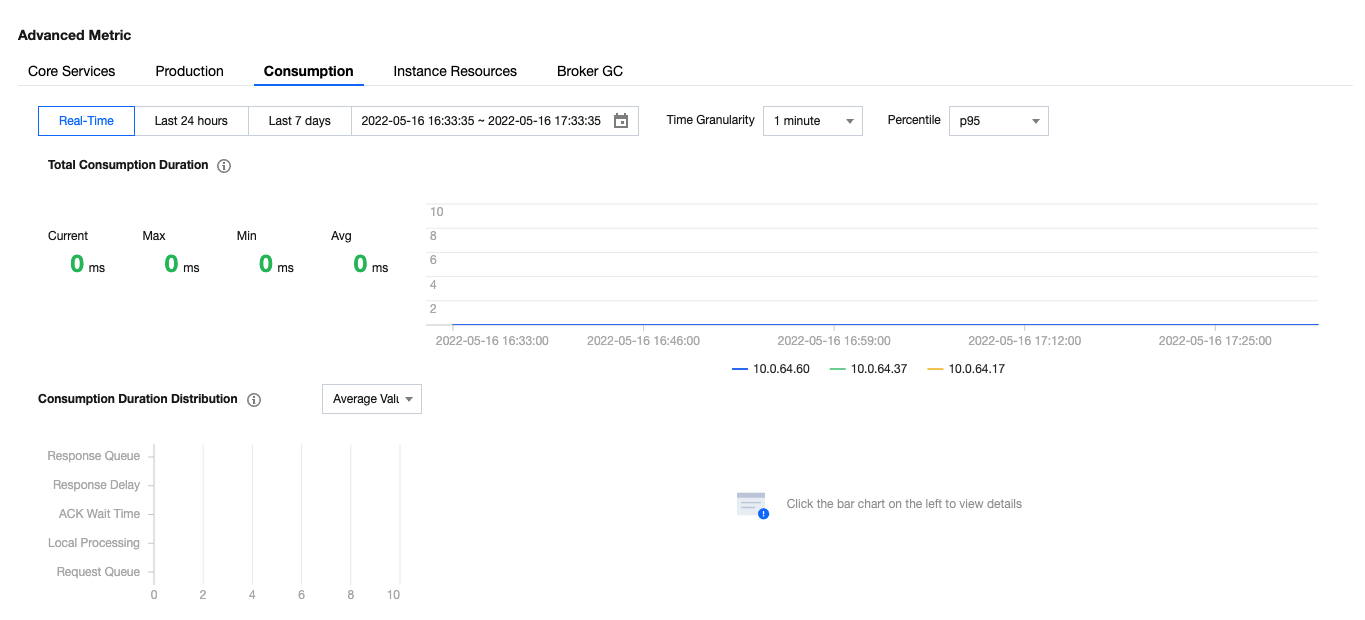

Monitoring Metric | Description | Normal Range |

Total Consumption Duration | This value indicates the total duration of a consumption request, which is based on metrics such as the request queue duration and local processing duration. At each point in time, the total duration is not equal to the sum of the following five metrics, because each metric is averaged. | This value generally ranges between 500 and 1000 ms (the default fetch.max.wait.ms on the client is 500 ms). It is normal to have a value falling in the range of up to 5000 ms when the data volume is high. |

Request Queue Duration | This value indicates the amount of time a consumption request waits in the request queue. It means that the request packet waits for subsequent processing. | This value generally ranges between 0 and 50 ms. It is normal to have a value falling in the range of up to 200 ms when the data volume is high. No action is required unless this value stays above 200 ms. |

Local Processing Duration | This value indicates the amount of time it takes a consumption request to pull data from the leader broker, i.e., reading data from the local disk. | This value generally ranges between 0 and 500 ms. It is normal to have a value falling in the range of up to 1000 ms when the data volume is high. No action is required unless the value stays above 1000 ms, because the consumer sometimes may read cold data, which will consume a lot of time. |

Consumption Wait Duration | The default fetch.max.wait.ms on the client is 500 ms. This value indicates the amount of time the client allows the server to wait before returning any packet to the client when the client cannot read any data. | This value is generally around 500 ms (the default fetch.max.wait.ms on the client is 500 ms), subject to the client's parameter settings. |

Response Delay | This value indicates the amount of time it takes the system to delay returning a packet to a consumption request. This value will always be 0 as long as the traffic of the instance does not exceed the purchased traffic, and it will be greater than 0 if the traffic is throttled. | This value will be 0 as long as the instance does not exceed the limit. If the limit is exceeded, there will be a delay of 0–5 minutes proportional to the excess; in other words, the maximum value is 5 minutes. |

Response Queue Duration | This value indicates the amount of time a consumption request waits in the response queue. It means that the request packet waits to be sent to the client. | This value generally ranges between 0 and 50 ms. It is normal to have a value falling in the range of up to 200 ms when the data volume is high. No action is required unless this value stays above 200 ms. |

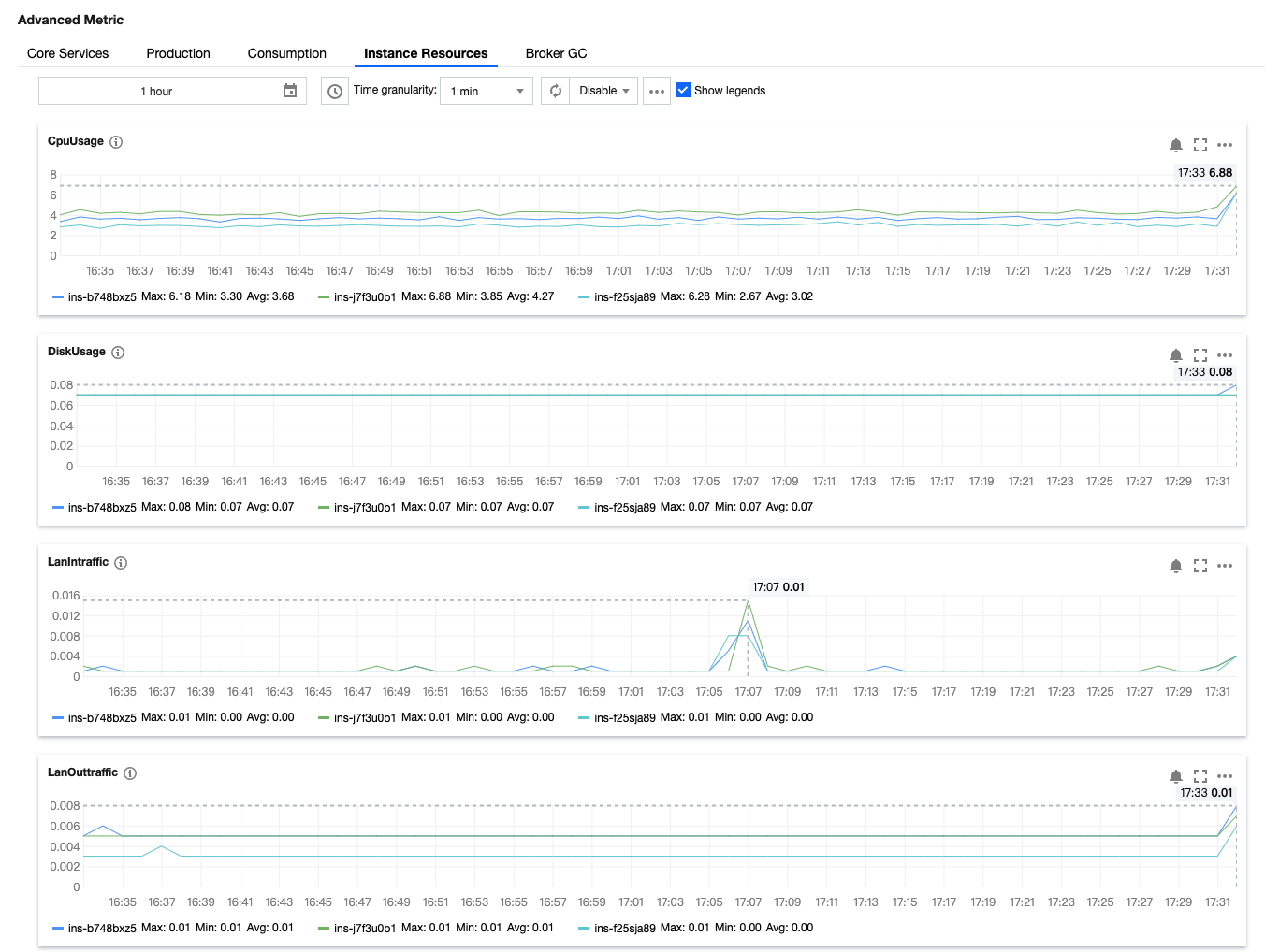

Monitoring Metric | Description | Normal Range |

CPU Utilization (%) | This is the percentage of CPU time used by a process in a period of time to the total CPU time. | This value generally falls between 1 and 100. If it is above 90 in more than 5 consecutive statistical periods, the system load is very high and needs to be handled. |

Disk Utilization (%) | This is the usage of a disk mounted to a CVM instance. | This value generally falls between 0 and 100. If it exceeds 80, capacity expansion is required. |

Private Network Inbound Bandwidth (MB) | This is the bandwidth that a CVM instance can reach for communication in the cluster. It limits the private network bandwidth and packet receiving capabilities according to different specifications. | This value is generally greater than 0 (CVM monitoring in the cluster will generate data). If there is no inbound bandwidth, the CVM service is abnormal or the network is unreachable. |

Private Network Outbound Bandwidth (MB) | This is the bandwidth that a CVM instance can reach for communication in the cluster. It limits the private network bandwidth and packet sending capabilities according to different specifications. | This value is generally greater than 0 (CVM monitoring in the cluster will generate data). If there is no outbound bandwidth, the CVM service is abnormal or the network is unreachable. |

Memory Utilization (%) | This is the percentage of the total memory space minus the used memory space to the total memory space. | This value generally falls between 1 and 100. If it is above 90, the program uses too much memory and some processes need to be handled. |

Public Network Inbound Bandwidth (MB) | This is the bandwidth that CVM can reach in public network communication. The maximum public network bandwidth and packet receiving capabilities vary by specification. | It will be greater than 0 if there is public network inbound traffic; otherwise, it will be 0. |

Public Network Outbound Bandwidth (MB) | This is the bandwidth that CVM can reach in public network communication. The maximum public network bandwidth and packet sending capabilities vary by specification. | It will be greater than 0 if there is public network outbound traffic; otherwise, it will be 0. |

Monitoring Metric | Description | Normal Range |

Young Generation Collections | Young GC count of the broker. | This value generally ranges between 0 and 300. If it stays above 300, the GC parameters need to be adjusted. |

Old Generation Collections | Full GC count of the broker. | This value is generally 0. Actions are required if it is greater than 0. |

Causes of monitoring metric exceptions

The following describes causes of certain monitoring metric exceptions.

Metric | Exception Cause |

CPU Utilization (%) | When you find that it is above 90% in more than 5 consecutive statistical periods, you can first check whether there are message compression and message format conversion. If the client machine has sufficient CPU resources, we recommend that you enable Snappy compression. You can observe the request queue depth at the same time. If this value is too large, it may be because that the request volume is too high, which may also cause a high CPU load. |

Unsynced Replicas (Count) | When this value is above 0, there are unsynced replicas in the cluster. This is usually due to broker node exceptions or network issues. You can troubleshoot through the broker logs. |

Full GC Count (Count) | An occasional old GC occurrence may be caused by disk I/O or CVM issues. You can check to see whether brokers with the same IP have the same issue. If so, submit a ticket for assistance. |

Request Queue Depth (Count) | If the client's production and consumption time out but the CVM load remains normal, it means the request queue length of the CVM instance has reached the upper limit, which is 500 by default. You can submit a ticket to adjust it appropriately according to the purchased resource configuration. |

Yes

Yes

No

No

Was this page helpful?