- Release Notes and Announcements

- Product Introduction

- Purchase Guide

- Getting Started

- EMR on CVM Operation Guide

- Planning Cluster

- Configuring Cluster

- Managing Cluster

- Instance Information

- Node Specification Management

- Checking and Updating Public IP

- Cluster Scale-Out

- Cluster Scale-in

- Auto Scaling

- Repairing Disks

- Graceful Scale-In

- Disk Update Check

- Scaling up Cloud Disks

- Changing Configurations

- Automatic Replacement

- Exporting Software Configuration

- Cluster Scripts

- Cluster Termination

- Operation Logs

- Task Center

- Managing Service

- Managing Users

- Adding Components

- Restarting Service

- Starting/Stopping Services

- WebUI Access

- Resetting WebUI Password

- Software WebUI Entry

- Operation Guide for Access to WebUI over Private Network

- Role Management

- Client Management

- Configuration Management

- YARN Resource Scheduling

- HBase RIT Fixing

- Component Port Information

- Service Operation

- HBase Table-Level Monitoring

- Component Health Status

- Monitoring and Alarms

- Cluster Overview

- Node Status

- Service Status

- Cluster Event

- Log

- Application Analysis

- Cluster Inspection

- Monitoring Metrics

- Node Monitoring Metrics

- HDFS Monitoring Metrics

- YARN Monitoring Metrics

- ZooKeeper Monitoring Metrics

- HBase Monitoring Metrics

- Hive Monitoring Metrics

- Spark Monitoring Metrics

- Presto Monitoring Metrics

- Trino Monitoring Metrics

- ClickHouse Monitoring Metrics

- Druid Monitoring Metrics

- Kudu Monitoring Metrics

- Alluxio Monitoring Metrics

- PrestoSQL Monitoring Metrics

- Impala Monitoring Metrics

- Ranger Monitoring Metrics

- COSRanger Monitoring Metrics

- Doris Monitoring Metrics

- Kylin Monitoring Metrics

- Zeppelin Monitoring Metrics

- Oozie Monitoring Metrics

- Storm Monitoring Metrics

- Livy Monitoring Metrics

- Kyuubi Monitoring Metrics

- StarRocks Monitoring Metrics

- Kafka Monitoring Metrics

- Alarm Configurations

- Alarm Records

- Container-Based EMR

- EMR Development Guide

- Hadoop Development Guide

- HDFS Common Operations

- HDFS Federation Management Development Guide

- HDFS Federation Management

- Submitting MapReduce Tasks

- Automatically Adding Task Nodes Without Assigning ApplicationMasters

- YARN Task Queue Management

- Practices on YARN Label Scheduling

- Hadoop Best Practices

- Using API to Analyze Data in HDFS and COS

- Dumping YARN Job Logs to COS

- Spark Development Guide

- Hbase Development Guide

- Phoenix on Hbase Development Guide

- Hive Development Guide

- Presto Development Guide

- Sqoop Development Guide

- Hue Development Guide

- Oozie Development Guide

- Flume Development Guide

- Kerberos Development Guide

- Knox Development Guide

- Alluxio Development Guide

- Kylin Development Guide

- Livy Development Guide

- Kyuubi Development Guide

- Zeppelin Development Guide

- Hudi Development Guide

- Superset Development Guide

- Impala Development Guide

- ClickHouse Development Guide

- Druid Development Guide

- TensorFlow Development Guide

- Jupyter Development Guide

- Kudu Development Guide

- Ranger Development Guide

- Doris Development Guide

- Kafka Development Guide

- Iceberg Development Guide

- StarRocks Development Guide

- Flink Development Guide

- RSS Development Guide

- Hadoop Development Guide

- Best Practices

- API Documentation

- FAQs

- Service Level Agreement

- Contact Us

- Release Notes and Announcements

- Product Introduction

- Purchase Guide

- Getting Started

- EMR on CVM Operation Guide

- Planning Cluster

- Configuring Cluster

- Managing Cluster

- Instance Information

- Node Specification Management

- Checking and Updating Public IP

- Cluster Scale-Out

- Cluster Scale-in

- Auto Scaling

- Repairing Disks

- Graceful Scale-In

- Disk Update Check

- Scaling up Cloud Disks

- Changing Configurations

- Automatic Replacement

- Exporting Software Configuration

- Cluster Scripts

- Cluster Termination

- Operation Logs

- Task Center

- Managing Service

- Managing Users

- Adding Components

- Restarting Service

- Starting/Stopping Services

- WebUI Access

- Resetting WebUI Password

- Software WebUI Entry

- Operation Guide for Access to WebUI over Private Network

- Role Management

- Client Management

- Configuration Management

- YARN Resource Scheduling

- HBase RIT Fixing

- Component Port Information

- Service Operation

- HBase Table-Level Monitoring

- Component Health Status

- Monitoring and Alarms

- Cluster Overview

- Node Status

- Service Status

- Cluster Event

- Log

- Application Analysis

- Cluster Inspection

- Monitoring Metrics

- Node Monitoring Metrics

- HDFS Monitoring Metrics

- YARN Monitoring Metrics

- ZooKeeper Monitoring Metrics

- HBase Monitoring Metrics

- Hive Monitoring Metrics

- Spark Monitoring Metrics

- Presto Monitoring Metrics

- Trino Monitoring Metrics

- ClickHouse Monitoring Metrics

- Druid Monitoring Metrics

- Kudu Monitoring Metrics

- Alluxio Monitoring Metrics

- PrestoSQL Monitoring Metrics

- Impala Monitoring Metrics

- Ranger Monitoring Metrics

- COSRanger Monitoring Metrics

- Doris Monitoring Metrics

- Kylin Monitoring Metrics

- Zeppelin Monitoring Metrics

- Oozie Monitoring Metrics

- Storm Monitoring Metrics

- Livy Monitoring Metrics

- Kyuubi Monitoring Metrics

- StarRocks Monitoring Metrics

- Kafka Monitoring Metrics

- Alarm Configurations

- Alarm Records

- Container-Based EMR

- EMR Development Guide

- Hadoop Development Guide

- HDFS Common Operations

- HDFS Federation Management Development Guide

- HDFS Federation Management

- Submitting MapReduce Tasks

- Automatically Adding Task Nodes Without Assigning ApplicationMasters

- YARN Task Queue Management

- Practices on YARN Label Scheduling

- Hadoop Best Practices

- Using API to Analyze Data in HDFS and COS

- Dumping YARN Job Logs to COS

- Spark Development Guide

- Hbase Development Guide

- Phoenix on Hbase Development Guide

- Hive Development Guide

- Presto Development Guide

- Sqoop Development Guide

- Hue Development Guide

- Oozie Development Guide

- Flume Development Guide

- Kerberos Development Guide

- Knox Development Guide

- Alluxio Development Guide

- Kylin Development Guide

- Livy Development Guide

- Kyuubi Development Guide

- Zeppelin Development Guide

- Hudi Development Guide

- Superset Development Guide

- Impala Development Guide

- ClickHouse Development Guide

- Druid Development Guide

- TensorFlow Development Guide

- Jupyter Development Guide

- Kudu Development Guide

- Ranger Development Guide

- Doris Development Guide

- Kafka Development Guide

- Iceberg Development Guide

- StarRocks Development Guide

- Flink Development Guide

- RSS Development Guide

- Hadoop Development Guide

- Best Practices

- API Documentation

- FAQs

- Service Level Agreement

- Contact Us

This document describes how to use Hue.

Hive SQL Query

Hue's Beeswax app provides user-friendly and convenient Hive query capabilities, enabling you to select different Hive databases, write HQL statements, submit query tasks, and view results with ease.

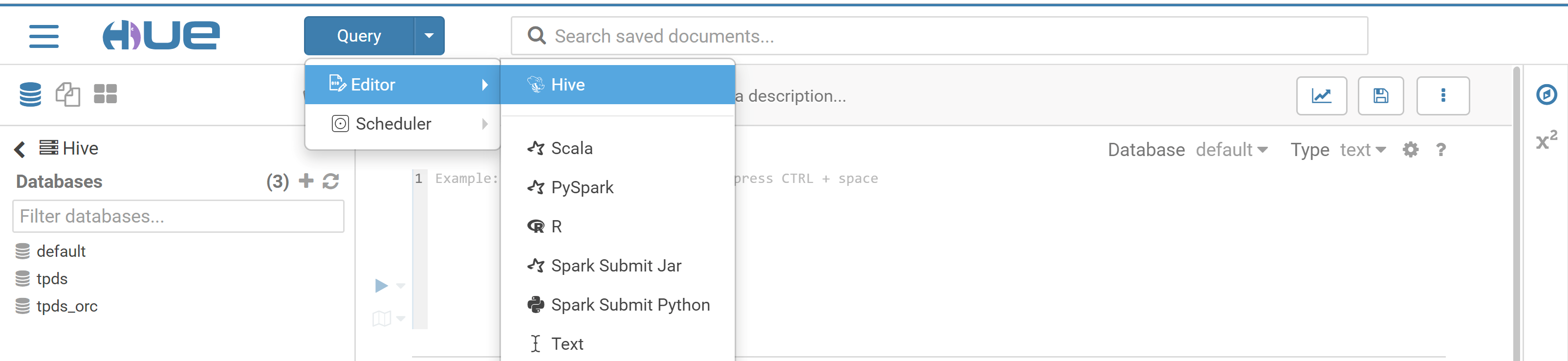

- At the top of the Hue console, select Query > Editor > Hive.

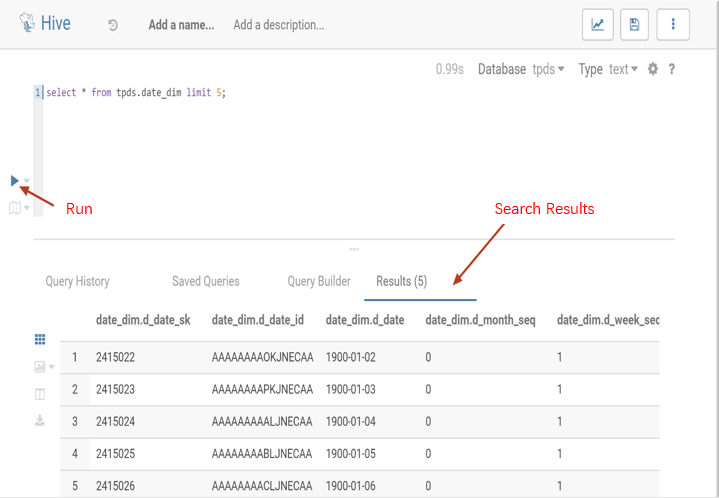

- Enter the statement to be executed in the statement input box and click Run to run it.

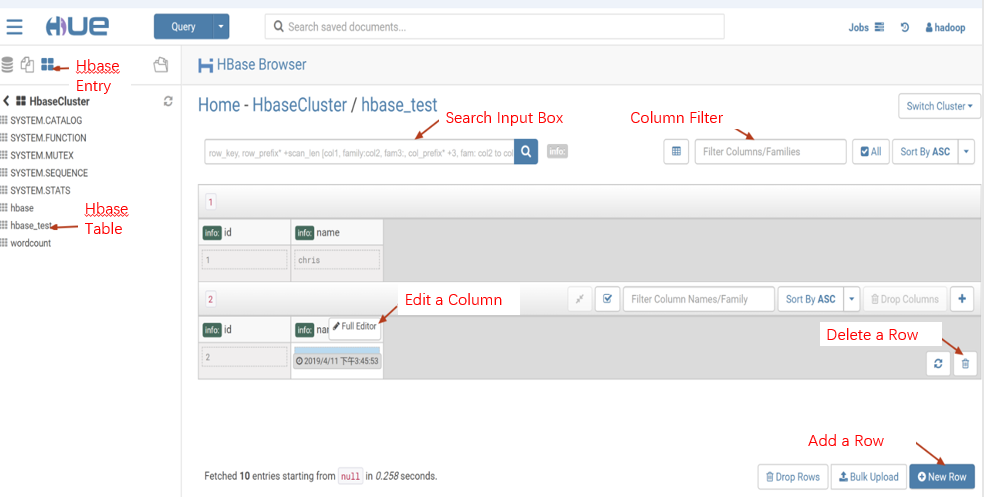

HBase Data Query, Modification, and Display

You can use HBase Browser to query, modify, and display data from tables in an HBase cluster.

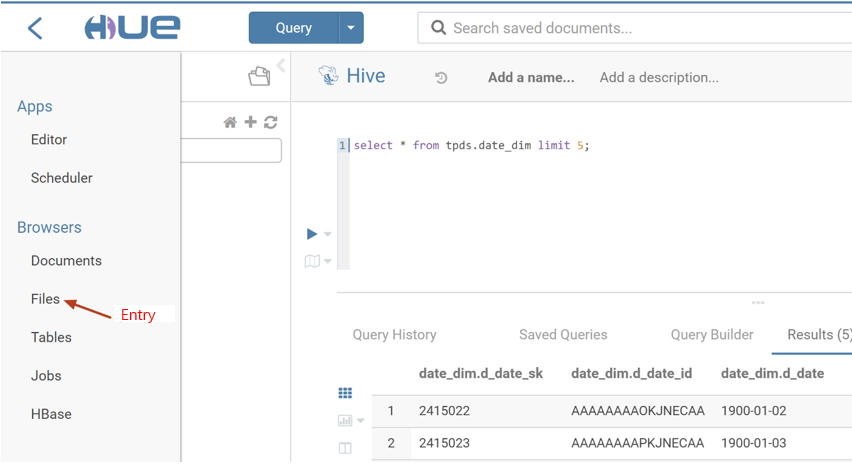

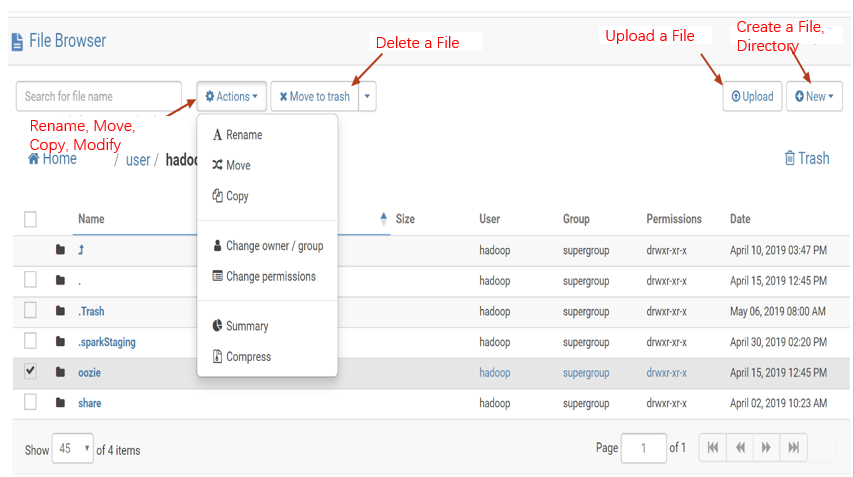

HDFS Access and File Browsing

Hue's web UI makes it easy to view files and folders in HDFS and perform operations such as creation, download, upload, copy, modification, and deletion.

- On the left sidebar in the Hue console, select Browsers > Files to browse HDFS files.

- Perform various operations.

Oozie Job Development

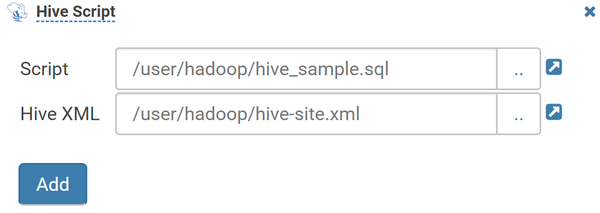

- Prepare workflow data: Hue's job scheduling is based on workflows. First, create a workflow containing a Hive script with the following content:

create database if not exists hive_sample; show databases; use hive_sample; show tables; create table if not exists hive_sample (a int, b string); show tables; insert into hive_sample select 1, "a"; select * from hive_sample;

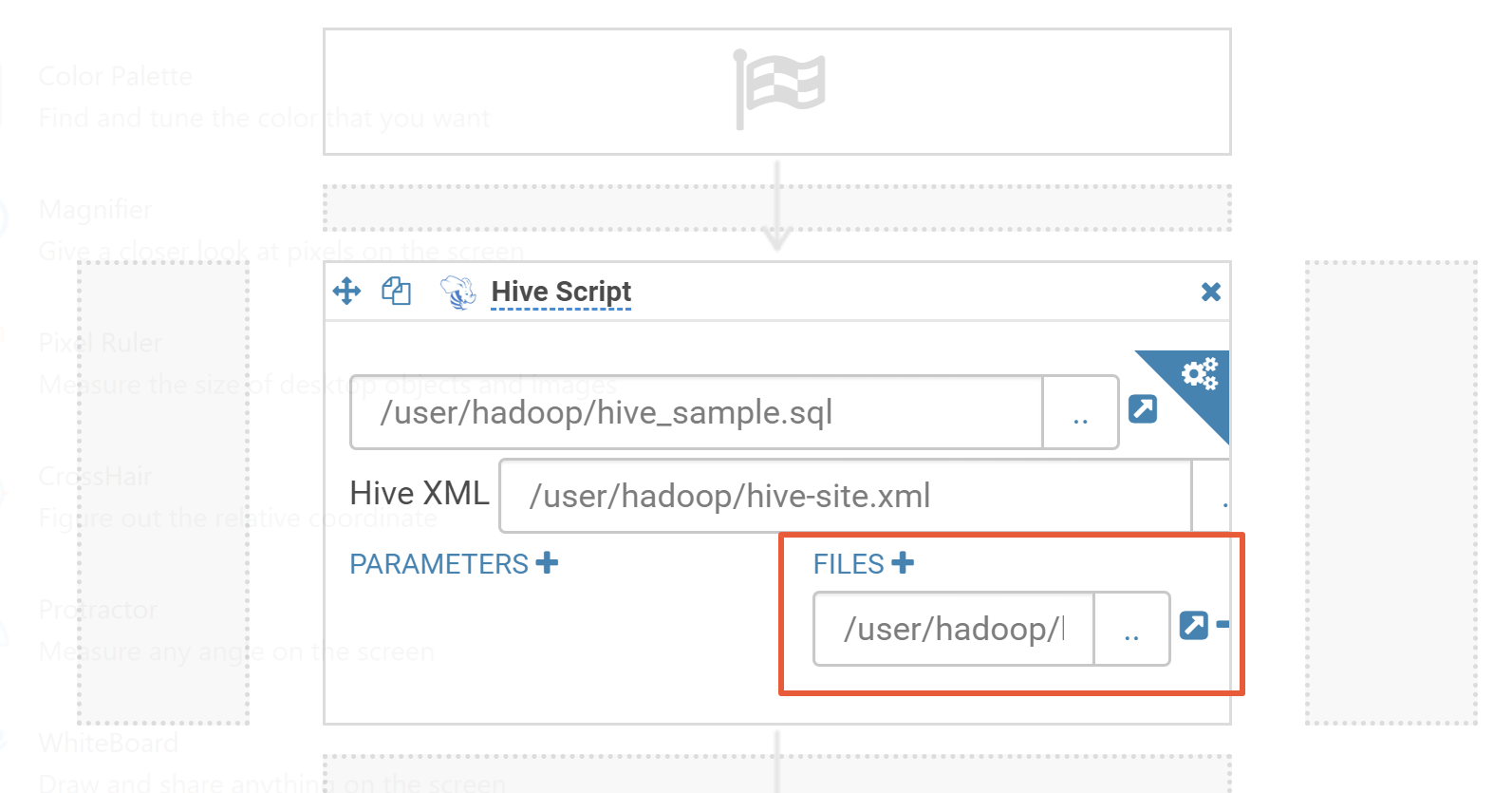

Save the above content as a file named hive_sample.sql. The Hive workflow also requires a hive-site.xml configuration file, which can be found on the cluster node where the Hive component is installed. The specific path is /usr/local/service/hive/conf/hive-site.xml. Copy the hive-site.xml file and then upload the Hive script file and hive-site.xml to a directory in HDFS, such as /user/hadoop.

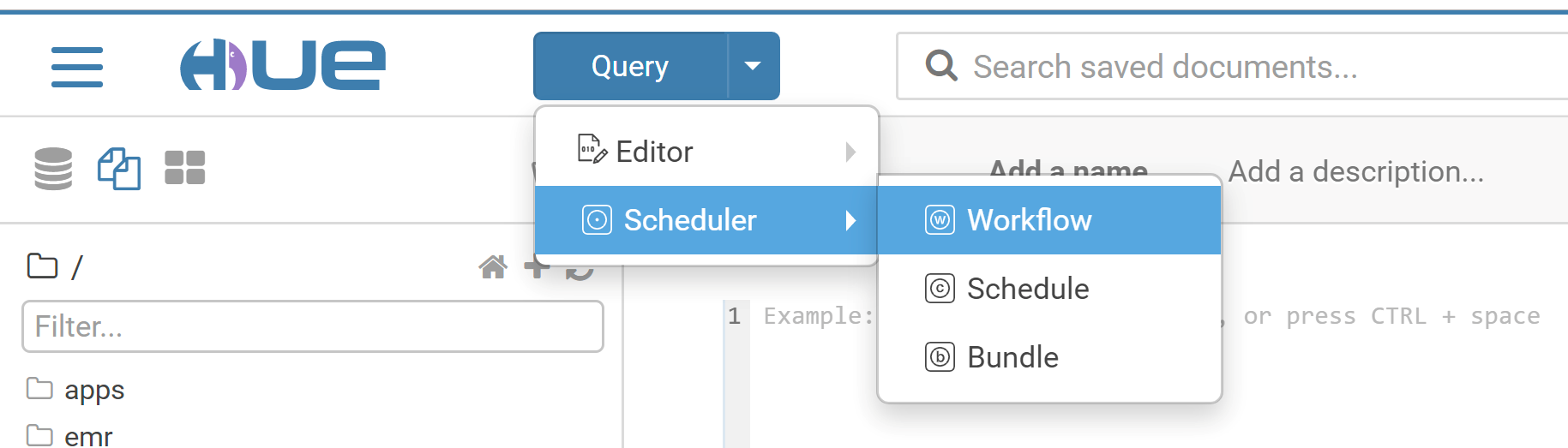

- Create a workflow.

- Switch to the

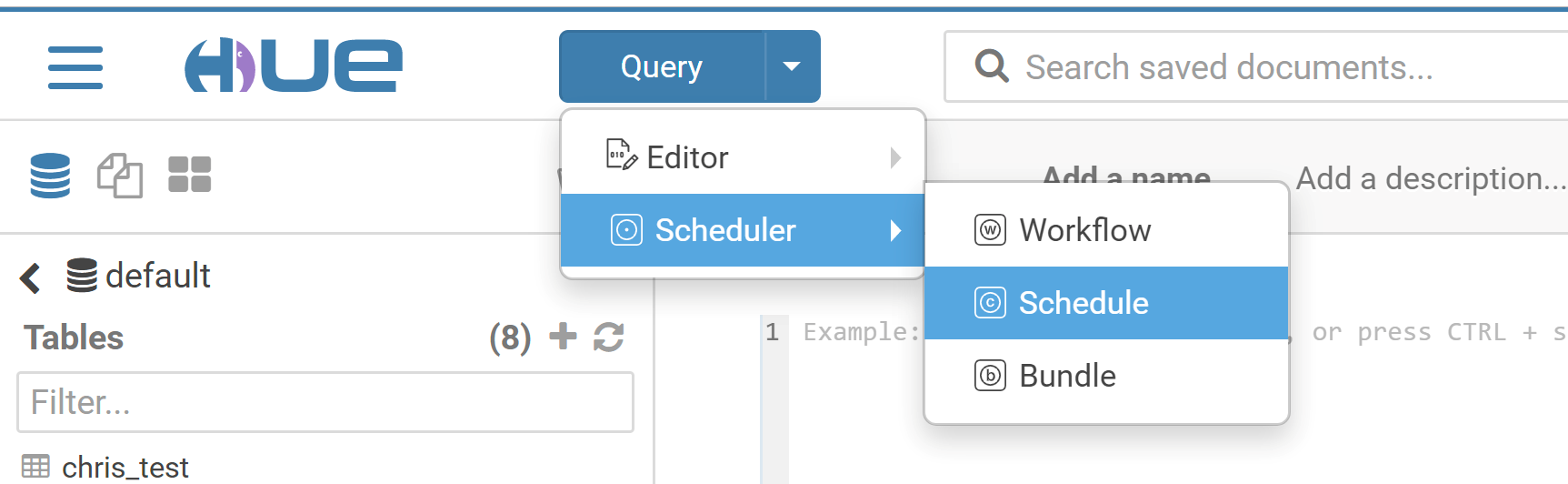

hadoopuser. At the top of the Hue console, select Query > Scheduler > Workflow.

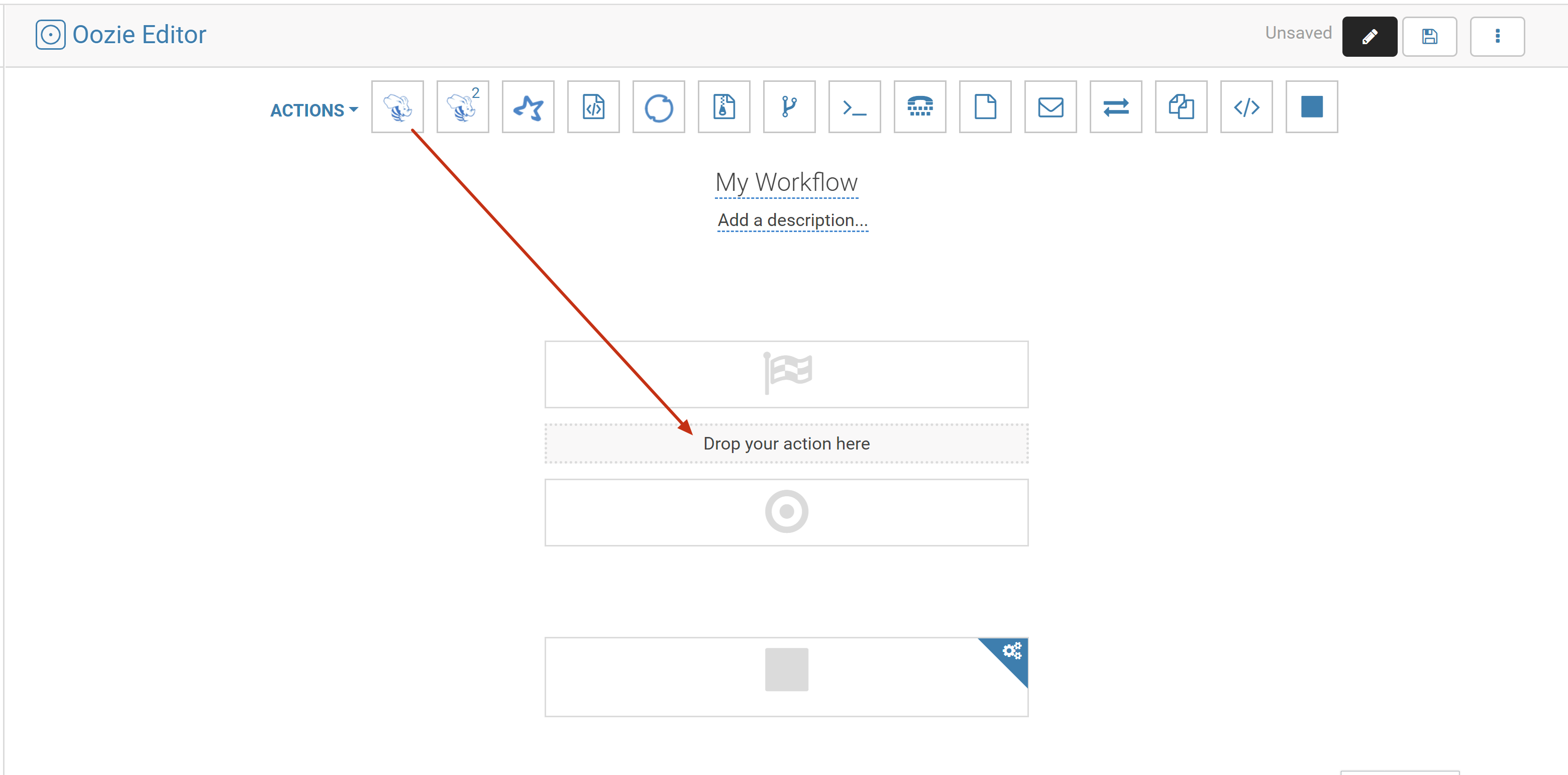

- Drag a Hive script into the workflow editing page.

Note:

This document uses the installation of Hive v1 as an example, and the configuration parameter is

HiveServer1. If it is deployed with other Hive versions (i.e., configuring configuration parameters of other versions), an error will be reported.

- Switch to the

3. Select the Hive script and hive-site.xml files you just uploaded.

4. Click Add and specify the Hive script file in FILES.

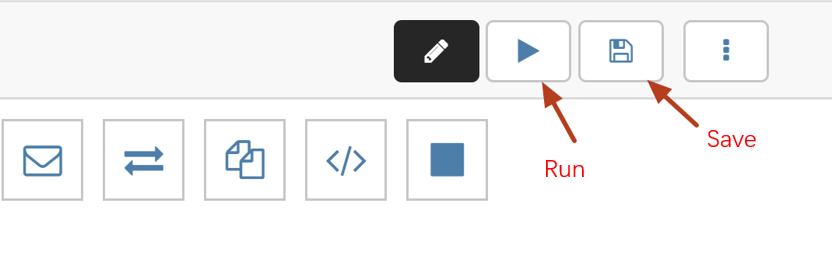

5. Click Save in the top-right corner and then click Run to run the workflow.

Create a scheduled job.

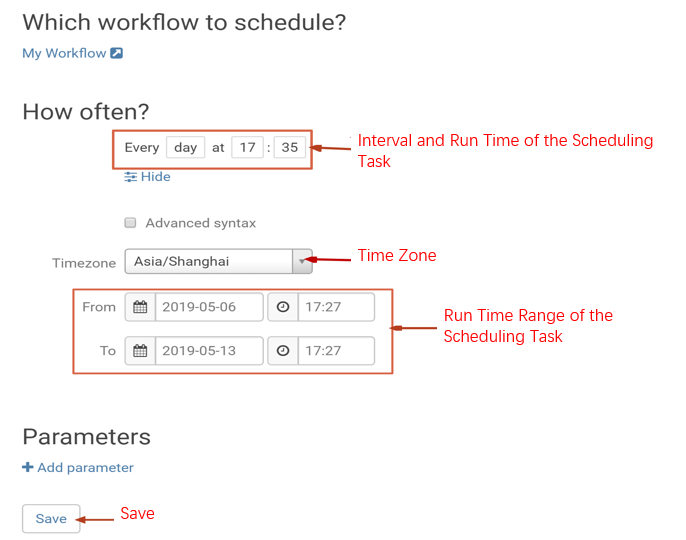

The scheduled job in Hive is "schedule", which is similar to the crontab in Linux. The supported scheduling granularity can be down to the minute level.- Select Query > Scheduler > Schedule to create a schedule.

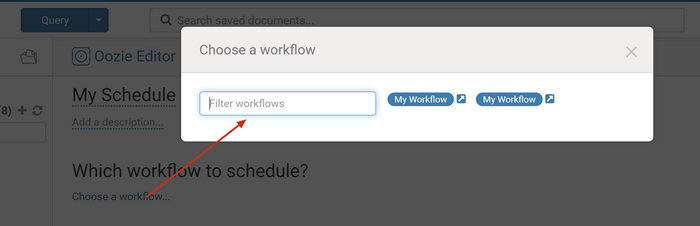

- Click Choose a workflow to select a created workflow.

- Select the execution time, frequency, time zone, start time, and end time of the schedule and click Save.

- Select Query > Scheduler > Schedule to create a schedule.

Create a scheduled job.

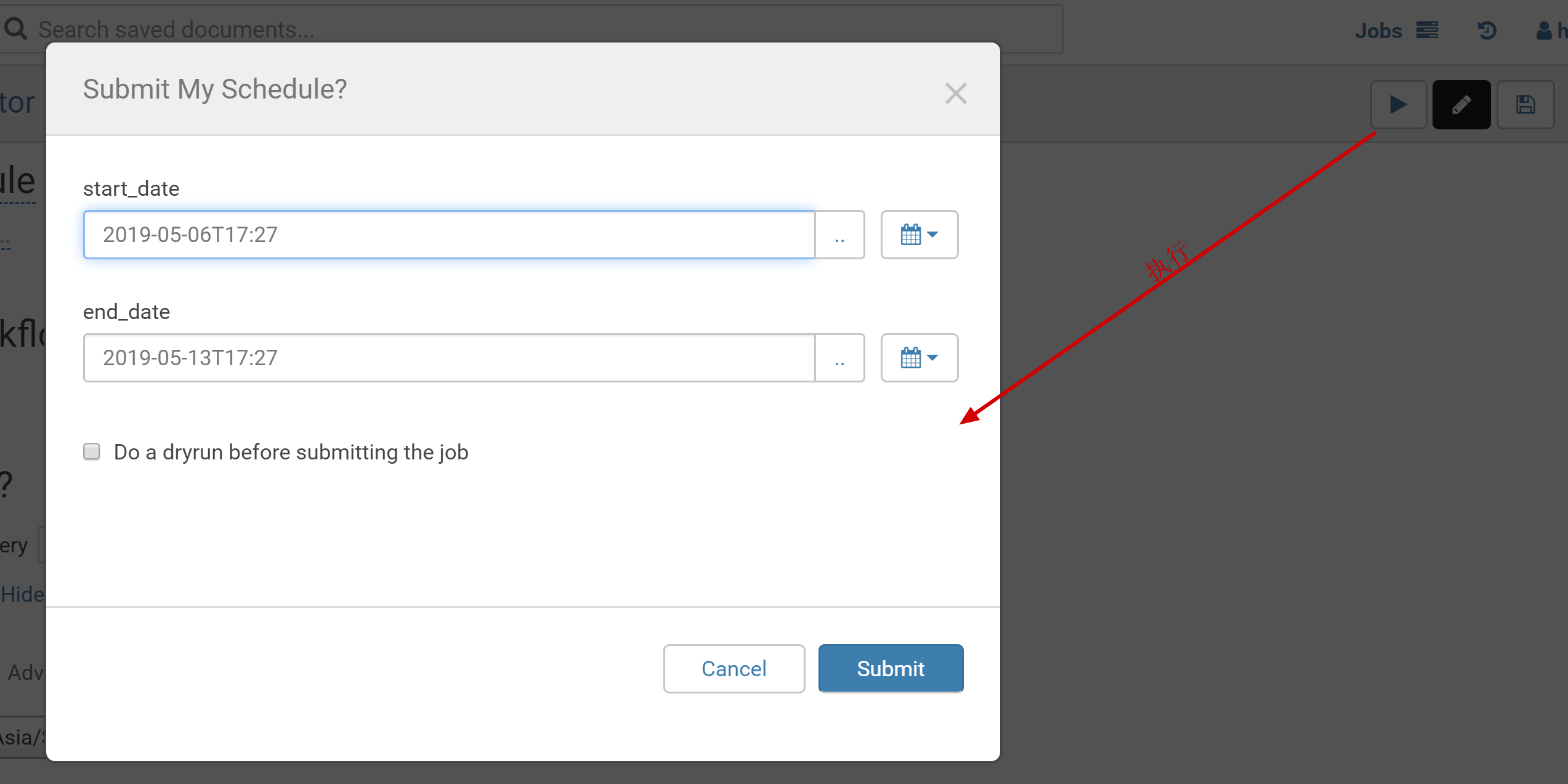

- Click Submit in the top-right corner to submit the schedule.

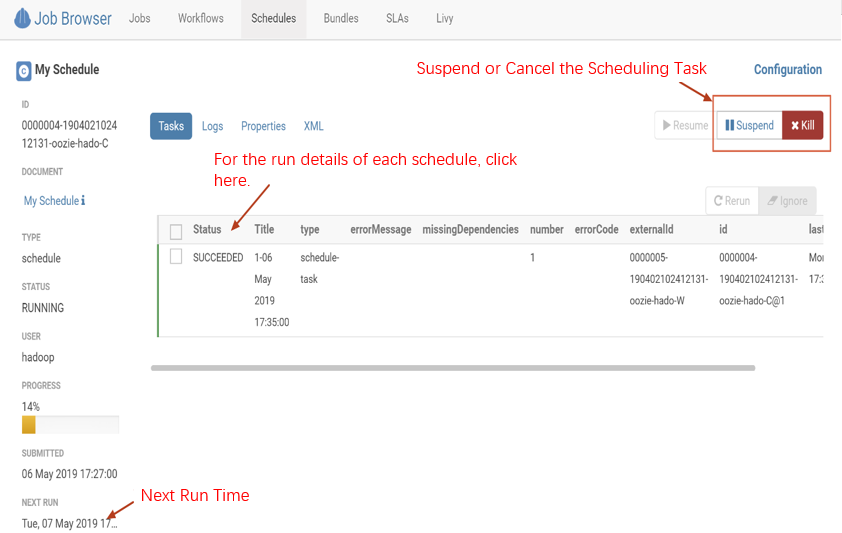

- You can view the scheduling status on the monitoring page of the schedulers.

- Click Submit in the top-right corner to submit the schedule.

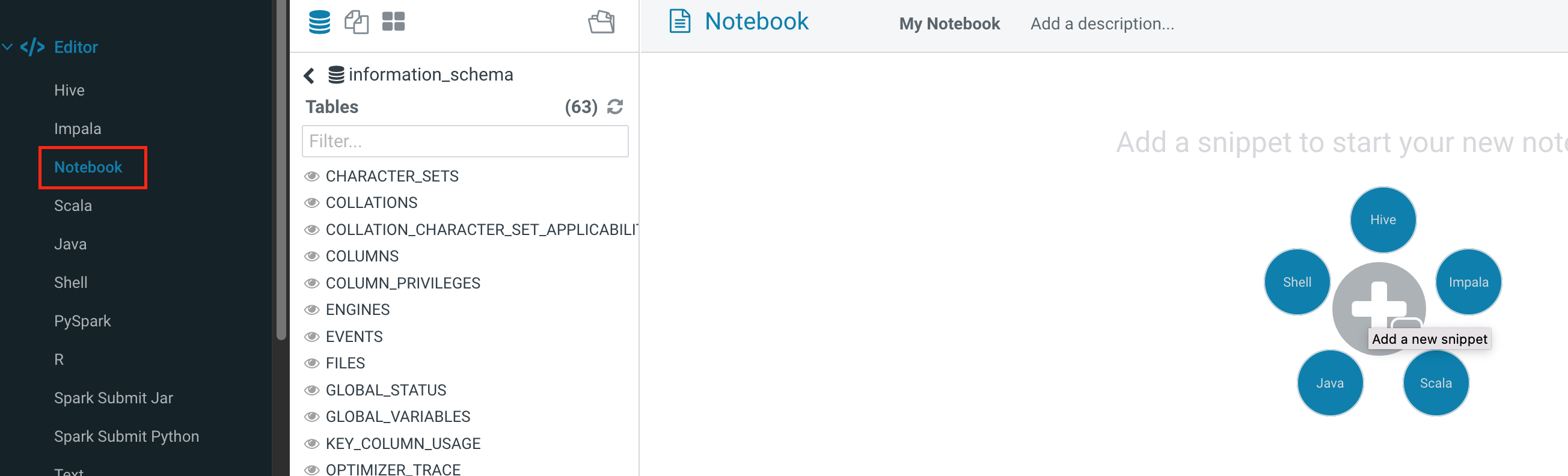

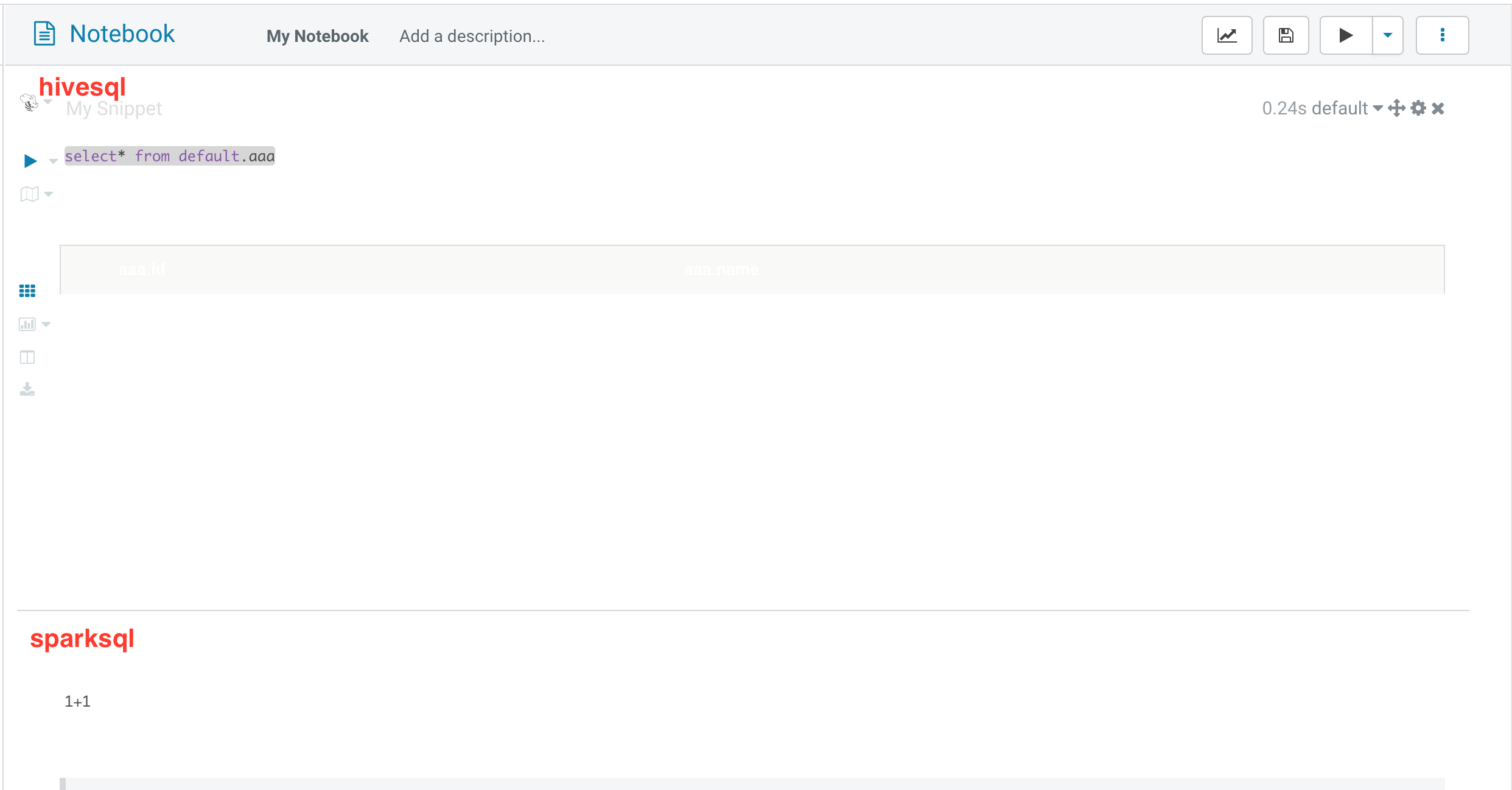

Notebook Query and Comparative Analysis

Notebooks can quickly build access requests and queries and put the query results together for comparative analysis. It supports five types: Hive, Impala, Spark, Java, and Shell.

- Click Editor, Notebook, and + to add the required query.

- Click Save to save the added notebook and click Run to run the entire notebook.

Yes

Yes

No

No

Was this page helpful?