- Release Notes and Announcements

- Release Notes

- TRTC Live (TUILiveKit) Product Launch Announcement

- TRTC Conference Official Editions Launched

- The commercial version of Conference is coming soon

- Terms and Conditions Applicable to $9.9 Starter Package

- Rules for the "First Subscription $100 Discount" Promotion

- Announcement on the Start of Beta Testing for Multi-person Audio and Video Conference

- TRTC Call Official Editions Launched

- License Required for Video Playback in New Version of LiteAV SDK

- TRTC to Offer Monthly Packages

- Product Introduction

- Purchase Guide

- Billing Overview

- RTC-Engine Packages

- TRTC Call Monthly Packages

- TRTC Conference Monthly Packages

- TRTC Live Monthly Packages

- Pay-As-You-Go

- Billing Explanation for Subscription Package Duration

- Billing of On-Cloud Recording

- Billing of MixTranscoding and Relay to CDN

- Free Minutes

- FAQs

- Billing of Monitoring Dashboard

- Billing of Recording Delivery

- Overdue and Suspension Policy

- Refund Policies

- User Tutorial

- Free Demo

- Video Calling (Including UI)

- Overview (TUICallKit)

- Activate the Service(TUICallKit)

- Integration (TUICallKit)

- UI Customization (TUICallKit)

- Offline Call Push (TUICallKit)

- On-Cloud Recording (TUICallKit)

- Additional Features(TUICallKit)

- Server APIs (TUICallKit)

- Client APIs (TUICallKit)

- ErrorCode

- Release Notes (TUICallKit)

- FAQs(TUICallKit)

- Multi-Participant Conference (with UI)

- Overview (TUIRoomKit)

- Activate the Service (TUIRoomKit)

- Integration (TUIRoomKit)

- UI Customization (TUIRoomKit)

- Conference Control (TUIRoomKit)

- Cloud Recording (TUIRoomKit)

- API Documentation(TUIRoomKit)

- In-Conference Chat (TUIRoomKit)

- FAQs (TUIRoomKit)

- Error Code (TUIRoomKit)

- Live Streaming (Including UI)

- Voice Chat Room (with UI)

- Integration (No UI)

- SDK Download

- API Examples

- Integration Guide

- Client APIs

- Advanced Features

- Relay to CDN

- Enabling Advanced Permission Control

- RTMP Streaming with TRTC

- Utilizing Beautification Effects

- Testing Hardware Devices

- Testing Network Quality

- On-Cloud Recording

- Custom Capturing and Rendering

- Custom Audio Capturing and Playback

- Sending and Receiving Messages

- Event Callbacks

- Access Management

- How to push stream to TRTC room with OBS WHIP

- Server APIs

- Console Guide

- Solution

- FAQs

- Legacy Documentation

- Protocols and Policies

- TRTC Policy

- Glossary

- Release Notes and Announcements

- Release Notes

- TRTC Live (TUILiveKit) Product Launch Announcement

- TRTC Conference Official Editions Launched

- The commercial version of Conference is coming soon

- Terms and Conditions Applicable to $9.9 Starter Package

- Rules for the "First Subscription $100 Discount" Promotion

- Announcement on the Start of Beta Testing for Multi-person Audio and Video Conference

- TRTC Call Official Editions Launched

- License Required for Video Playback in New Version of LiteAV SDK

- TRTC to Offer Monthly Packages

- Product Introduction

- Purchase Guide

- Billing Overview

- RTC-Engine Packages

- TRTC Call Monthly Packages

- TRTC Conference Monthly Packages

- TRTC Live Monthly Packages

- Pay-As-You-Go

- Billing Explanation for Subscription Package Duration

- Billing of On-Cloud Recording

- Billing of MixTranscoding and Relay to CDN

- Free Minutes

- FAQs

- Billing of Monitoring Dashboard

- Billing of Recording Delivery

- Overdue and Suspension Policy

- Refund Policies

- User Tutorial

- Free Demo

- Video Calling (Including UI)

- Overview (TUICallKit)

- Activate the Service(TUICallKit)

- Integration (TUICallKit)

- UI Customization (TUICallKit)

- Offline Call Push (TUICallKit)

- On-Cloud Recording (TUICallKit)

- Additional Features(TUICallKit)

- Server APIs (TUICallKit)

- Client APIs (TUICallKit)

- ErrorCode

- Release Notes (TUICallKit)

- FAQs(TUICallKit)

- Multi-Participant Conference (with UI)

- Overview (TUIRoomKit)

- Activate the Service (TUIRoomKit)

- Integration (TUIRoomKit)

- UI Customization (TUIRoomKit)

- Conference Control (TUIRoomKit)

- Cloud Recording (TUIRoomKit)

- API Documentation(TUIRoomKit)

- In-Conference Chat (TUIRoomKit)

- FAQs (TUIRoomKit)

- Error Code (TUIRoomKit)

- Live Streaming (Including UI)

- Voice Chat Room (with UI)

- Integration (No UI)

- SDK Download

- API Examples

- Integration Guide

- Client APIs

- Advanced Features

- Relay to CDN

- Enabling Advanced Permission Control

- RTMP Streaming with TRTC

- Utilizing Beautification Effects

- Testing Hardware Devices

- Testing Network Quality

- On-Cloud Recording

- Custom Capturing and Rendering

- Custom Audio Capturing and Playback

- Sending and Receiving Messages

- Event Callbacks

- Access Management

- How to push stream to TRTC room with OBS WHIP

- Server APIs

- Console Guide

- Solution

- FAQs

- Legacy Documentation

- Protocols and Policies

- TRTC Policy

- Glossary

Android

Last updated: 2022-04-26 15:01:48

Application Scenarios

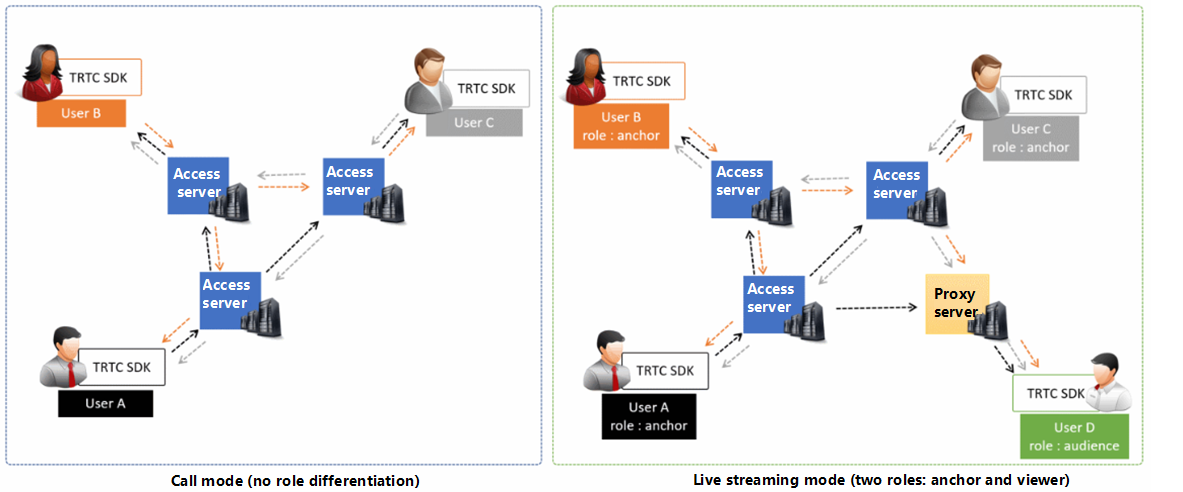

TRTC supports four room entry modes. Video call (VideoCall) and audio call (AudioCall) are the call modes, and interactive video streaming (Live) and interactive audio streaming (VoiceChatRoom) are the live streaming modes.

The call modes allow a maximum of 300 users in each TRTC room, and up to 50 of them can speak at the same time. The call modes are suitable for scenarios such as one-to-one video calls, video conferencing with up to 300 participants, online medical consultation, remote interviews, video customer service, and online Werewolf playing.

How It Works

TRTC services use two types of server nodes: access servers and proxy servers.

- Access server

This type of nodes use high-quality lines and high-performance servers and are better suited to drive low-latency end-to-end calls, but the unit cost is relatively high. - Proxy server

This type of servers use mediocre lines and average-performance servers and are better suited to power high-concurrency stream pulling and playback. The unit cost is relatively low.

In the call modes, all users in a TRTC room are assigned to access servers and are in the role of “anchor”. This means the users can speak to each other at any point during the call (up to 50 users can send data at the same time). This makes the call modes suitable for use cases such as online conferencing, but the number of users in each room is capped at 300.

Sample Code

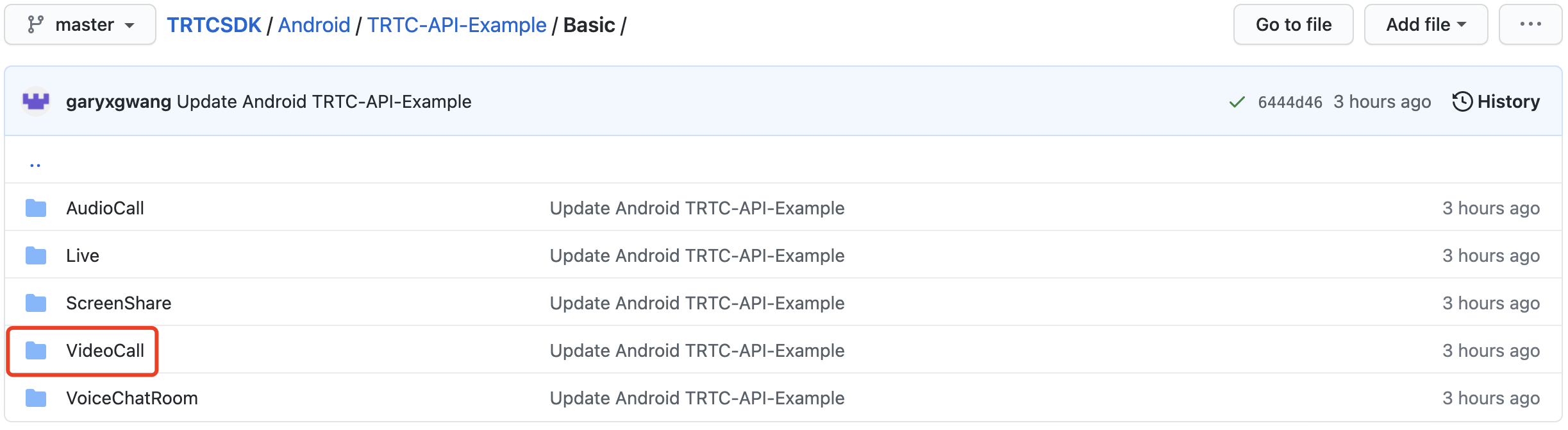

You can visit GitHub to obtain the sample code used in this document.

Note:If your access to GitHub is slow, download the ZIP file here.

Directions

Step 1. Integrate the SDKs

You can integrate the TRTC SDK into your project in the following ways:

Method 1: automatic loading (AAR)

The TRTC SDK has been released to the mavenCentral repository, and you can configure Gradle to download updates automatically.

The TRTC SDK has integrated TRTC-API-Example, which offers sample code for your reference. Use Android Studio to open your project and follow the steps below to modify the app/build.gradle file.

Add the TRTC SDK dependency to

dependencies.dependencies { compile 'com.tencent.liteav:LiteAVSDK_TRTC:latest.release' }In

defaultConfig, specify the CPU architecture to be used by your application.Note:Currently, the TRTC SDK supports armeabi-v7a, and arm64-v8a.

defaultConfig { ndk { abiFilters "armeabi-v7a", "arm64-v8a" } }Click Sync Now to sync the SDK.

If you have no problem connecting to mavenCentral, the SDK will be downloaded and integrated into your project automatically.

Method 2: manual integration

You can directly download the ZIP package and integrate the SDK into your project as instructed in Quick Integration (Android).

Step 2. Configure app permissions.

Add camera, mic, and network permission requests in AndroidManifest.xml.

<uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" />

<uses-permission android:name="android.permission.INTERNET" />

<uses-permission android:name="android.permission.ACCESS_NETWORK_STATE" />

<uses-permission android:name="android.permission.ACCESS_WIFI_STATE" />

<uses-permission android:name="android.permission.WRITE_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.READ_EXTERNAL_STORAGE" />

<uses-permission android:name="android.permission.RECORD_AUDIO" />

<uses-permission android:name="android.permission.CAMERA" />

<uses-permission android:name="android.permission.READ_PHONE_STATE" />

<uses-permission android:name="android.permission.MODIFY_AUDIO_SETTINGS" />

<uses-permission android:name="android.permission.BLUETOOTH" />

<uses-feature android:name="android.hardware.camera" />

<uses-feature android:name="android.hardware.camera.autofocus" />

Step 3. Initialize an SDK instance and configure event callbacks

Call the sharedInstance() API to create a

TRTCCloudinstance.// Create a `TRTCCloud` instance mTRTCCloud = TRTCCloud.sharedInstance(getApplicationContext()); mTRTCCloud.setListener(new TRTCCloudListener(){ // Processing callbacks ... });Set the attributes of

setListenerto subscribe to event callbacks and listen for event and error notifications.// Error notifications indicate that the SDK has stopped working and therefore must be listened for @Override public void onError(int errCode, String errMsg, Bundle extraInfo) { Log.d(TAG, "sdk callback onError"); if (activity != null) { Toast.makeText(activity, "onError: " + errMsg + "[" + errCode+ "]" , Toast.LENGTH_SHORT).show(); if (errCode == TXLiteAVCode.ERR_ROOM_ENTER_FAIL) { activity.exitRoom(); } } }

Step 4. Assemble the room entry parameter TRTCParams

When calling the enterRoom() API, you need to pass in a key parameter TRTCParams, which includes the following required fields:

| Parameter | Type | Description | Example |

|---|---|---|---|

| sdkAppId | Number | Application ID, which you can view in the TRTC console. | 1400000123 |

| userId | String | Can contain only letters (a-z and A-Z), digits (0-9), underscores, and hyphens. We recommend you set it based on your business account system. | test_user_001 |

| userSig | String | userSig is calculated based on userId. For the calculation method, please see UserSig. |

eJyrVareCeYrSy1SslI... |

| roomId | Number | Numeric room ID. For string-type room ID, use strRoomId in TRTCParams. |

29834 |

Note:In TRTC, users with the same

userIDcannot be in the same room at the same time as it will cause a conflict.

Step 5. Create and enter a room

- Call enterRoom() to enter the audio/video room specified by

roomIdin theTRTCParamsparameter. If the room does not exist, the SDK will automatically create it with theroomIdvalue as the room number. - Set the

appSceneparameter according to your actual application scenario. InappropriateappScenevalues may lead to increased lag or decreased clarity.

- For video calls, please set

TRTC_APP_SCENE_VIDEOCALL. - For audio calls, please set

TRTC_APP_SCENE_AUDIOCALL.

- You will receive the

onEnterRoom(result)callback. Ifresultis greater than 0, room entry succeeds, and the value ofresultindicates the time (ms) room entry takes; ifresultis less than 0, room entry fails, and the value is the error code for the failure.

public void enterRoom() {

TRTCCloudDef.TRTCParams trtcParams = new TRTCCloudDef.TRTCParams();

trtcParams.sdkAppId = sdkappid;

trtcParams.userId = userid;

trtcParams.roomId = 908;

trtcParams.userSig = usersig;

mTRTCCloud.enterRoom(trtcParams, TRTC_APP_SCENE_VIDEOCALL);

}

@Override

public void onEnterRoom(long result) {

if (result > 0) {

toastTip("Entered room successfully; the total time used is [\(result)] ms")

} else {

toastTip("Failed to enter the room; the error code is [\(result)]")

}

}

Note:

- If the room entry fails, the SDK will also call back the

onErrorevent and return the parameterserrCode(error code),errMsg(error message), andextraInfo(reserved parameter).- If you are already in a room, you must call

exitRoomto exit the room before entering another room.- The value of

appScenemust be the same on each client. InconsistentappScenemay cause unexpected problems.

Step 6. Subscribe to remote audio/video streams

The SDK supports automatic subscription and manual subscription.

Automatic subscription (default)

In automatic subscription mode, after room entry, the SDK will automatically receive audio streams from other users in the room to achieve the best "instant broadcasting" effect:

- When another user in the room is upstreaming audio data, you will receive the onUserAudioAvailable() event notification, and the SDK will automatically play back the audio of the remote user.

- You can call muteRemoteAudio(userId, true) to mute a specified user (

userId), or muteAllRemoteAudio(true) to mute all remote users. The SDK will stop pulling the audio data of the user(s). - When another user in the room is upstreaming video data, you will receive the onUserVideoAvailable() event notification; however, since the SDK has not received instructions on how to display the video data at this time, video data will not be processed automatically. You need to associate the video data of the remote user with the display

viewby calling the startRemoteView(userId, view) method. - Call setRemoteViewFillMode() to specify the display mode of a remote video.

Fill: aspect fill. The image may be scaled up and cropped, but there are no black bars.Fit: aspect fit. The image may be scaled down to ensure that it’s displayed in its entirety, and there may be black bars.

- Call stopRemoteView(userId) to block the video data of a specified user (

userId) or stopAllRemoteView() to block the video data of all remote users. The SDK will stop pulling the video data of the user(s).

@Override

public void onUserVideoAvailable(String userId, boolean available) {

TXCloudVideoView remoteView = remoteViewDic[userId];

if (available) {

mTRTCCloud.startRemoteView(userId, remoteView);

mTRTCCloud.setRemoteViewFillMode(userId, TRTC_VIDEO_RENDER_MODE_FIT);

} else {

mTRTCCloud.stopRemoteView(userId);

}

}

Note:If you do not call

startRemoteView()to subscribe to the video stream immediately after receiving theonUserVideoAvailable()event callback, the SDK will stop pulling the remote video within 5 seconds.

Manual subscription

You can call setDefaultStreamRecvMode() to switch the SDK to the manual subscription mode. In this mode, the SDK will not pull the data of other users in the room automatically. You have to start the process manually via APIs.

- Before room entry, call the setDefaultStreamRecvMode(false, false) API to set the SDK to manual subscription mode.

- If other users in the room are sending audio data, you will receive the onUserAudioAvailable() notification, and you need to call muteRemoteAudio(userId, false) to manually subscribe to the users’ audio. The SDK will decode and play the audio data received.

- If a remote user in the room is sending video data, you will receive the onUserVideoAvailable() notification, and you need to call startRemoteView(userId, remoteView) to manually subscribe to the user's video data. The SDK will decode and play the video data received.

Step 7. Publish the local stream

- Call startLocalAudio() to enable local mic capturing and encode and send the audio captured.

- Call startLocalPreview() to enable local camera capturing and encode and send the video captured.

- Call setLocalViewFillMode() to set the display mode of the local video:

Fill: aspect fill. The image may be scaled up and cropped, but there are no black bars.Fit: aspect fit. The image may be scaled down to ensure that it’s displayed in its entirety, and there may be black bars.

- Call setVideoEncoderParam() to set the encoding parameter of the local video, which determines the image quality of the video watched by other users in the room.

// Sample code: publish the local audio/video stream mTRTCCloud.setLocalViewFillMode(TRTC_VIDEO_RENDER_MODE_FIT); mTRTCCloud.startLocalPreview(mIsFrontCamera, mLocalView); mTRTCCloud.startLocalAudio();

Step 8. Exit the room

Call exitRoom() to exit the room. The SDK disables and releases devices such as cameras and mics during room exit. Therefore, room exit is not an instant process. It completes only after the onExitRoom() callback is received.

// Please wait for the `onExitRoom` callback after calling the room exit API.

mTRTCCloud.exitRoom()

@Override

public void onExitRoom(int reason) {

Log.i(TAG, "onExitRoom: reason = " + reason);

}

Note:If your application integrates multiple audio/video SDKs, please wait after you receive the

onExitRoomcallback to start other SDKs; otherwise, the device busy error may occur.

Yes

Yes

No

No

Was this page helpful?