- Release Notes and Announcements

- Announcements

- Notification on Service Suspension Policy Change in Case of Overdue Payment for COS Pay-As-You-Go (Postpaid)

- Implementation Notice for Security Management of COS Bucket Domain (Effective January 2024)

- Notification of Price Reduction for COS Retrieval and Storage Capacity Charges

- Daily Billing for COS Storage Usage, Request, and Data Retrieval

- COS Will Stop Supporting New Default CDN Acceleration Domains

- Release Notes

- Announcements

- Product Introduction

- Purchase Guide

- Getting Started

- Console Guide

- Console Overview

- Bucket Management

- Bucket Overview

- Creating Bucket

- Deleting Buckets

- Querying Bucket

- Clearing Bucket

- Setting Access Permission

- Setting Bucket Encryption

- Setting Hotlink Protection

- Setting Origin-Pull

- Setting Cross-Origin Resource Sharing (CORS)

- Setting Versioning

- Setting Static Website

- Setting Lifecycle

- Setting Logging

- Accessing Bucket List Using Sub-Account

- Adding Bucket Policies

- Setting Log Analysis

- Setting INTELLIGENT TIERING

- Setting Inventory

- Domain Name Management

- Setting Bucket Tags

- Setting Log Retrieval

- Setting Cross-Bucket Replication

- Enabling Global Acceleration

- Setting Object Lock

- Object Management

- Uploading an Object

- Downloading Objects

- Copying Object

- Previewing or Editing Object

- Viewing Object Information

- Searching for Objects

- Sorting and Filtering Objects

- Direct Upload to ARCHIVE

- Modifying Storage Class

- Deleting Incomplete Multipart Uploads

- Setting Object Access Permission

- Setting Object Encryption

- Custom Headers

- Deleting Objects

- Restoring Archived Objects

- Folder Management

- Data Extraction

- Setting Object Tag

- Exporting Object URLs

- Restoring Historical Object Version

- Batch Operation

- Monitoring Reports

- Data Processing

- Content Moderation

- Smart Toolbox User Guide

- Data Processing Workflow

- Application Integration

- User Tools

- Tool Overview

- Installation and Configuration of Environment

- COSBrowser

- COSCLI (Beta)

- COSCLI Overview

- Download and Installation Configuration

- Common Options

- Common Commands

- Generating and Modifying Configuration Files - config

- Creating Buckets - mb

- Deleting Buckets - rb

- Tagging Bucket - bucket-tagging

- Querying Bucket/Object List - ls

- Obtaining Statistics on Different Types of Objects - du

- Uploading/Downloading/Copying Objects - cp

- Syncing Upload/Download/Copy - sync

- Deleting Objects - rm

- Getting File Hash Value - hash

- Listing Incomplete Multipart Uploads - lsparts

- Clearing Incomplete Multipart Uploads - abort

- Retrieving Archived Files - restore

- Getting Pre-signed URL - signurl

- FAQs

- COSCMD

- COS Migration

- FTP Server

- Hadoop

- COSDistCp

- Hadoop-cos-DistChecker

- HDFS TO COS

- Online Auxiliary Tools

- Diagnostic Tool

- Best Practices

- Overview

- Access Control and Permission Management

- ACL Practices

- CAM Practices

- Granting Sub-Accounts Access to COS

- Authorization Cases

- Working with COS API Authorization Policies

- Security Guidelines for Using Temporary Credentials for Direct Upload from Frontend to COS

- Generating and Using Temporary Keys

- Authorizing Sub-Account to Get Buckets by Tag

- Descriptions and Use Cases of Condition Keys

- Granting Bucket Permissions to a Sub-Account that is Under Another Root Account

- Performance Optimization

- Data Migration

- Accessing COS with AWS S3 SDK

- Data Disaster Recovery and Backup

- Domain Name Management Practice

- Image Processing

- Audio/Video Practices

- Workflow

- Direct Data Upload

- Content Moderation

- Data Security

- Data Verification

- Big Data Practice

- Using COS in the Third-party Applications

- Use the general configuration of COS in third-party applications compatible with S3

- Storing Remote WordPress Attachments to COS

- Storing Ghost Attachment to COS

- Backing up Files from PC to COS

- Using Nextcloud and COS to Build Personal Online File Storage Service

- Mounting COS to Windows Server as Local Drive

- Setting up Image Hosting Service with PicGo, Typora, and COS

- Managing COS Resource with CloudBerry Explorer

- Developer Guide

- Creating Request

- Bucket

- Object

- Data Management

- Data Disaster Recovery

- Data Security

- Cloud Access Management

- Batch Operation

- Global Acceleration

- Data Workflow

- Monitoring and Alarms

- Data Lake Storage

- Cloud Native Datalake Storage

- Metadata Accelerator

- Metadata Acceleration Overview

- Migrating HDFS Data to Metadata Acceleration-Enabled Bucket

- Using HDFS to Access Metadata Acceleration-Enabled Bucket

- Mounting a COS Bucket in a Computing Cluster

- Accessing COS over HDFS in CDH Cluster

- Using Hadoop FileSystem API Code to Access COS Metadata Acceleration Bucket

- Using DataX to Sync Data Between Buckets with Metadata Acceleration Enabled

- Big Data Security

- GooseFS

- Data Processing

- Troubleshooting

- API Documentation

- Introduction

- Common Request Headers

- Common Response Headers

- Error Codes

- Request Signature

- Action List

- Service APIs

- Bucket APIs

- Basic Operations

- Access Control List (acl)

- Cross-Origin Resource Sharing (cors)

- Lifecycle

- Bucket Policy (policy)

- Hotlink Protection (referer)

- Tag (tagging)

- Static Website (website)

- Intelligent Tiering

- Bucket inventory(inventory)

- Versioning

- Cross-Bucket Replication(replication)

- Log Management(logging)

- Global Acceleration (Accelerate)

- Bucket Encryption (encryption)

- Custom Domain Name (Domain)

- Object Lock (ObjectLock)

- Origin-Pull (Origin)

- Object APIs

- Batch Operation APIs

- Data Processing APIs

- Image Processing

- Basic Image Processing

- Scaling

- Cropping

- Rotation

- Converting Format

- Quality Change

- Gaussian Blurring

- Adjusting Brightness

- Adjusting Contrast

- Sharpening

- Grayscale Image

- Image Watermark

- Text Watermark

- Obtaining Basic Image Information

- Getting Image EXIF

- Obtaining Image’s Average Hue

- Metadata Removal

- Quick Thumbnail Template

- Limiting Output Image Size

- Pipeline Operators

- Image Advanced Compression

- Persistent Image Processing

- Image Compression

- Blind Watermark

- Basic Image Processing

- AI-Based Content Recognition

- Media Processing

- File Processing

- File Processing

- Image Processing

- Job and Workflow

- Common Request Headers

- Common Response Headers

- Error Codes

- Workflow APIs

- Workflow Instance

- Job APIs

- Media Processing

- Canceling Media Processing Job

- Querying Media Processing Job

- Media Processing Job Callback

- Video-to-Animated Image Conversion

- Audio/Video Splicing

- Adding Digital Watermark

- Extracting Digital Watermark

- Getting Media Information

- Noise Cancellation

- Video Quality Scoring

- SDRtoHDR

- Remuxing (Audio/Video Segmentation)

- Intelligent Thumbnail

- Frame Capturing

- Stream Separation

- Super Resolution

- Audio/Video Transcoding

- Text to Speech

- Video Montage

- Video Enhancement

- Video Tagging

- Voice/Sound Separation

- Image Processing

- Multi-Job Processing

- AI-Based Content Recognition

- Sync Media Processing

- Media Processing

- Template APIs

- Media Processing

- Creating Media Processing Template

- Creating Animated Image Template

- Creating Splicing Template

- Creating Top Speed Codec Transcoding Template

- Creating Screenshot Template

- Creating Super Resolution Template

- Creating Audio/Video Transcoding Template

- Creating Professional Transcoding Template

- Creating Text-to-Speech Template

- Creating Video Montage Template

- Creating Video Enhancement Template

- Creating Voice/Sound Separation Template

- Creating Watermark Template

- Creating Intelligent Thumbnail Template

- Deleting Media Processing Template

- Querying Media Processing Template

- Updating Media Processing Template

- Updating Animated Image Template

- Updating Splicing Template

- Updating Top Speed Codec Transcoding Template

- Updating Screenshot Template

- Updating Super Resolution Template

- Updating Audio/Video Transcoding Template

- Updating Professional Transcoding Template

- Updating Text-to-Speech Template

- Updating Video Montage Template

- Updating Video Enhancement Template

- Updating Voice/Sound Separation Template

- Updating Watermark Template

- Updating Intelligent Thumbnail Template

- Creating Media Processing Template

- AI-Based Content Recognition

- Media Processing

- Batch Job APIs

- Callback Content

- Appendix

- Content Moderation APIs

- Submitting Virus Detection Job

- SDK Documentation

- SDK Overview

- Preparations

- Android SDK

- Getting Started

- Android SDK FAQs

- Quick Experience

- Bucket Operations

- Object Operations

- Uploading an Object

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Restoring Archived Objects

- Querying Object Metadata

- Generating Pre-Signed URLs

- Configuring Preflight Requests for Cross-origin Access

- Server-Side Encryption

- Single-Connection Bandwidth Limit

- Extracting Object Content

- Remote Disaster Recovery

- Data Management

- Cloud Access Management

- Data Verification

- Image Processing

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- C SDK

- C++ SDK

- .NET(C#) SDK

- Getting Started

- .NET (C#) SDK

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Restoring Archived Objects

- Querying Object Metadata

- Object Access URL

- Getting Pre-Signed URLs

- Configuring Preflight Requests for Cross-Origin Access

- Server-Side Encryption

- Single-URL Speed Limits

- Extracting Object Content

- Cross-Region Disaster Recovery

- Data Management

- Cloud Access Management

- Image Processing

- Content Moderation

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Backward Compatibility

- SDK for Flutter

- Go SDK

- iOS SDK

- Getting Started

- iOS SDK

- Quick Experience

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Listing Objects

- Copying and Moving Objects

- Extracting Object Content

- Checking Whether an Object Exists

- Deleting Objects

- Restoring Archived Objects

- Querying Object Metadata

- Server-Side Encryption

- Object Access URL

- Generating Pre-Signed URL

- Configuring CORS Preflight Requests

- Cross-region Disaster Recovery

- Data Management

- Cloud Access Management

- Image Processing

- Content Recognition

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Java SDK

- Getting Started

- FAQs

- Bucket Operations

- Object Operations

- Uploading Object

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Querying Object Metadata

- Modifying Object Metadata

- Object Access URL

- Generating Pre-Signed URLs

- Restoring Archived Objects

- Server-Side Encryption

- Client-Side Encryption

- Single-URL Speed Limits

- Extracting Object Content

- Uploading/Downloading Object at Custom Domain Name

- Data Management

- Cross-Region Disaster Recovery

- Cloud Access Management

- Image Processing

- Content Moderation

- File Processing

- Media Processing

- AI-Based Content Recognition

- Troubleshooting

- Setting Access Domain Names (CDN/Global Acceleration)

- JavaScript SDK

- Node.js SDK

- PHP SDK

- Python SDK

- Getting Started

- Python SDK FAQs

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Querying Object Metadata

- Modifying Object Metadata

- Object Access URL

- Getting Pre-Signed URLs

- Restoring Archived Objects

- Extracting Object Content

- Server-Side Encryption

- Client-Side Encryption

- Single-URL Speed Limits

- Cross-Region Disaster Recovery

- Data Management

- Cloud Access Management

- Content Recognition

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Image Processing

- React Native SDK

- Mini Program SDK

- Getting Started

- FAQs

- Bucket Operations

- Object Operations

- Uploading an Object

- Downloading Objects

- Listing Objects

- Deleting Objects

- Copying and Moving Objects

- Restoring Archived Objects

- Querying Object Metadata

- Checking Whether an Object Exists

- Object Access URL

- Generating Pre-Signed URL

- Configuring CORS Preflight Requests

- Single-URL Speed Limits

- Server-Side Encryption

- Remote disaster-tolerant

- Data Management

- Cloud Access Management

- Data Verification

- Content Moderation

- Setting Access Domain Names (CDN/Global Acceleration)

- Image Processing

- Troubleshooting

- Error Codes

- FAQs

- Service Level Agreement

- Appendices

- Glossary

- Release Notes and Announcements

- Announcements

- Notification on Service Suspension Policy Change in Case of Overdue Payment for COS Pay-As-You-Go (Postpaid)

- Implementation Notice for Security Management of COS Bucket Domain (Effective January 2024)

- Notification of Price Reduction for COS Retrieval and Storage Capacity Charges

- Daily Billing for COS Storage Usage, Request, and Data Retrieval

- COS Will Stop Supporting New Default CDN Acceleration Domains

- Release Notes

- Announcements

- Product Introduction

- Purchase Guide

- Getting Started

- Console Guide

- Console Overview

- Bucket Management

- Bucket Overview

- Creating Bucket

- Deleting Buckets

- Querying Bucket

- Clearing Bucket

- Setting Access Permission

- Setting Bucket Encryption

- Setting Hotlink Protection

- Setting Origin-Pull

- Setting Cross-Origin Resource Sharing (CORS)

- Setting Versioning

- Setting Static Website

- Setting Lifecycle

- Setting Logging

- Accessing Bucket List Using Sub-Account

- Adding Bucket Policies

- Setting Log Analysis

- Setting INTELLIGENT TIERING

- Setting Inventory

- Domain Name Management

- Setting Bucket Tags

- Setting Log Retrieval

- Setting Cross-Bucket Replication

- Enabling Global Acceleration

- Setting Object Lock

- Object Management

- Uploading an Object

- Downloading Objects

- Copying Object

- Previewing or Editing Object

- Viewing Object Information

- Searching for Objects

- Sorting and Filtering Objects

- Direct Upload to ARCHIVE

- Modifying Storage Class

- Deleting Incomplete Multipart Uploads

- Setting Object Access Permission

- Setting Object Encryption

- Custom Headers

- Deleting Objects

- Restoring Archived Objects

- Folder Management

- Data Extraction

- Setting Object Tag

- Exporting Object URLs

- Restoring Historical Object Version

- Batch Operation

- Monitoring Reports

- Data Processing

- Content Moderation

- Smart Toolbox User Guide

- Data Processing Workflow

- Application Integration

- User Tools

- Tool Overview

- Installation and Configuration of Environment

- COSBrowser

- COSCLI (Beta)

- COSCLI Overview

- Download and Installation Configuration

- Common Options

- Common Commands

- Generating and Modifying Configuration Files - config

- Creating Buckets - mb

- Deleting Buckets - rb

- Tagging Bucket - bucket-tagging

- Querying Bucket/Object List - ls

- Obtaining Statistics on Different Types of Objects - du

- Uploading/Downloading/Copying Objects - cp

- Syncing Upload/Download/Copy - sync

- Deleting Objects - rm

- Getting File Hash Value - hash

- Listing Incomplete Multipart Uploads - lsparts

- Clearing Incomplete Multipart Uploads - abort

- Retrieving Archived Files - restore

- Getting Pre-signed URL - signurl

- FAQs

- COSCMD

- COS Migration

- FTP Server

- Hadoop

- COSDistCp

- Hadoop-cos-DistChecker

- HDFS TO COS

- Online Auxiliary Tools

- Diagnostic Tool

- Best Practices

- Overview

- Access Control and Permission Management

- ACL Practices

- CAM Practices

- Granting Sub-Accounts Access to COS

- Authorization Cases

- Working with COS API Authorization Policies

- Security Guidelines for Using Temporary Credentials for Direct Upload from Frontend to COS

- Generating and Using Temporary Keys

- Authorizing Sub-Account to Get Buckets by Tag

- Descriptions and Use Cases of Condition Keys

- Granting Bucket Permissions to a Sub-Account that is Under Another Root Account

- Performance Optimization

- Data Migration

- Accessing COS with AWS S3 SDK

- Data Disaster Recovery and Backup

- Domain Name Management Practice

- Image Processing

- Audio/Video Practices

- Workflow

- Direct Data Upload

- Content Moderation

- Data Security

- Data Verification

- Big Data Practice

- Using COS in the Third-party Applications

- Use the general configuration of COS in third-party applications compatible with S3

- Storing Remote WordPress Attachments to COS

- Storing Ghost Attachment to COS

- Backing up Files from PC to COS

- Using Nextcloud and COS to Build Personal Online File Storage Service

- Mounting COS to Windows Server as Local Drive

- Setting up Image Hosting Service with PicGo, Typora, and COS

- Managing COS Resource with CloudBerry Explorer

- Developer Guide

- Creating Request

- Bucket

- Object

- Data Management

- Data Disaster Recovery

- Data Security

- Cloud Access Management

- Batch Operation

- Global Acceleration

- Data Workflow

- Monitoring and Alarms

- Data Lake Storage

- Cloud Native Datalake Storage

- Metadata Accelerator

- Metadata Acceleration Overview

- Migrating HDFS Data to Metadata Acceleration-Enabled Bucket

- Using HDFS to Access Metadata Acceleration-Enabled Bucket

- Mounting a COS Bucket in a Computing Cluster

- Accessing COS over HDFS in CDH Cluster

- Using Hadoop FileSystem API Code to Access COS Metadata Acceleration Bucket

- Using DataX to Sync Data Between Buckets with Metadata Acceleration Enabled

- Big Data Security

- GooseFS

- Data Processing

- Troubleshooting

- API Documentation

- Introduction

- Common Request Headers

- Common Response Headers

- Error Codes

- Request Signature

- Action List

- Service APIs

- Bucket APIs

- Basic Operations

- Access Control List (acl)

- Cross-Origin Resource Sharing (cors)

- Lifecycle

- Bucket Policy (policy)

- Hotlink Protection (referer)

- Tag (tagging)

- Static Website (website)

- Intelligent Tiering

- Bucket inventory(inventory)

- Versioning

- Cross-Bucket Replication(replication)

- Log Management(logging)

- Global Acceleration (Accelerate)

- Bucket Encryption (encryption)

- Custom Domain Name (Domain)

- Object Lock (ObjectLock)

- Origin-Pull (Origin)

- Object APIs

- Batch Operation APIs

- Data Processing APIs

- Image Processing

- Basic Image Processing

- Scaling

- Cropping

- Rotation

- Converting Format

- Quality Change

- Gaussian Blurring

- Adjusting Brightness

- Adjusting Contrast

- Sharpening

- Grayscale Image

- Image Watermark

- Text Watermark

- Obtaining Basic Image Information

- Getting Image EXIF

- Obtaining Image’s Average Hue

- Metadata Removal

- Quick Thumbnail Template

- Limiting Output Image Size

- Pipeline Operators

- Image Advanced Compression

- Persistent Image Processing

- Image Compression

- Blind Watermark

- Basic Image Processing

- AI-Based Content Recognition

- Media Processing

- File Processing

- File Processing

- Image Processing

- Job and Workflow

- Common Request Headers

- Common Response Headers

- Error Codes

- Workflow APIs

- Workflow Instance

- Job APIs

- Media Processing

- Canceling Media Processing Job

- Querying Media Processing Job

- Media Processing Job Callback

- Video-to-Animated Image Conversion

- Audio/Video Splicing

- Adding Digital Watermark

- Extracting Digital Watermark

- Getting Media Information

- Noise Cancellation

- Video Quality Scoring

- SDRtoHDR

- Remuxing (Audio/Video Segmentation)

- Intelligent Thumbnail

- Frame Capturing

- Stream Separation

- Super Resolution

- Audio/Video Transcoding

- Text to Speech

- Video Montage

- Video Enhancement

- Video Tagging

- Voice/Sound Separation

- Image Processing

- Multi-Job Processing

- AI-Based Content Recognition

- Sync Media Processing

- Media Processing

- Template APIs

- Media Processing

- Creating Media Processing Template

- Creating Animated Image Template

- Creating Splicing Template

- Creating Top Speed Codec Transcoding Template

- Creating Screenshot Template

- Creating Super Resolution Template

- Creating Audio/Video Transcoding Template

- Creating Professional Transcoding Template

- Creating Text-to-Speech Template

- Creating Video Montage Template

- Creating Video Enhancement Template

- Creating Voice/Sound Separation Template

- Creating Watermark Template

- Creating Intelligent Thumbnail Template

- Deleting Media Processing Template

- Querying Media Processing Template

- Updating Media Processing Template

- Updating Animated Image Template

- Updating Splicing Template

- Updating Top Speed Codec Transcoding Template

- Updating Screenshot Template

- Updating Super Resolution Template

- Updating Audio/Video Transcoding Template

- Updating Professional Transcoding Template

- Updating Text-to-Speech Template

- Updating Video Montage Template

- Updating Video Enhancement Template

- Updating Voice/Sound Separation Template

- Updating Watermark Template

- Updating Intelligent Thumbnail Template

- Creating Media Processing Template

- AI-Based Content Recognition

- Media Processing

- Batch Job APIs

- Callback Content

- Appendix

- Content Moderation APIs

- Submitting Virus Detection Job

- SDK Documentation

- SDK Overview

- Preparations

- Android SDK

- Getting Started

- Android SDK FAQs

- Quick Experience

- Bucket Operations

- Object Operations

- Uploading an Object

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Restoring Archived Objects

- Querying Object Metadata

- Generating Pre-Signed URLs

- Configuring Preflight Requests for Cross-origin Access

- Server-Side Encryption

- Single-Connection Bandwidth Limit

- Extracting Object Content

- Remote Disaster Recovery

- Data Management

- Cloud Access Management

- Data Verification

- Image Processing

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- C SDK

- C++ SDK

- .NET(C#) SDK

- Getting Started

- .NET (C#) SDK

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Restoring Archived Objects

- Querying Object Metadata

- Object Access URL

- Getting Pre-Signed URLs

- Configuring Preflight Requests for Cross-Origin Access

- Server-Side Encryption

- Single-URL Speed Limits

- Extracting Object Content

- Cross-Region Disaster Recovery

- Data Management

- Cloud Access Management

- Image Processing

- Content Moderation

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Backward Compatibility

- SDK for Flutter

- Go SDK

- iOS SDK

- Getting Started

- iOS SDK

- Quick Experience

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Listing Objects

- Copying and Moving Objects

- Extracting Object Content

- Checking Whether an Object Exists

- Deleting Objects

- Restoring Archived Objects

- Querying Object Metadata

- Server-Side Encryption

- Object Access URL

- Generating Pre-Signed URL

- Configuring CORS Preflight Requests

- Cross-region Disaster Recovery

- Data Management

- Cloud Access Management

- Image Processing

- Content Recognition

- Setting Custom Headers

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Java SDK

- Getting Started

- FAQs

- Bucket Operations

- Object Operations

- Uploading Object

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Querying Object Metadata

- Modifying Object Metadata

- Object Access URL

- Generating Pre-Signed URLs

- Restoring Archived Objects

- Server-Side Encryption

- Client-Side Encryption

- Single-URL Speed Limits

- Extracting Object Content

- Uploading/Downloading Object at Custom Domain Name

- Data Management

- Cross-Region Disaster Recovery

- Cloud Access Management

- Image Processing

- Content Moderation

- File Processing

- Media Processing

- AI-Based Content Recognition

- Troubleshooting

- Setting Access Domain Names (CDN/Global Acceleration)

- JavaScript SDK

- Node.js SDK

- PHP SDK

- Python SDK

- Getting Started

- Python SDK FAQs

- Bucket Operations

- Object Operations

- Uploading Objects

- Downloading Objects

- Copying and Moving Objects

- Listing Objects

- Deleting Objects

- Checking Whether Objects Exist

- Querying Object Metadata

- Modifying Object Metadata

- Object Access URL

- Getting Pre-Signed URLs

- Restoring Archived Objects

- Extracting Object Content

- Server-Side Encryption

- Client-Side Encryption

- Single-URL Speed Limits

- Cross-Region Disaster Recovery

- Data Management

- Cloud Access Management

- Content Recognition

- Setting Access Domain Names (CDN/Global Acceleration)

- Troubleshooting

- Image Processing

- React Native SDK

- Mini Program SDK

- Getting Started

- FAQs

- Bucket Operations

- Object Operations

- Uploading an Object

- Downloading Objects

- Listing Objects

- Deleting Objects

- Copying and Moving Objects

- Restoring Archived Objects

- Querying Object Metadata

- Checking Whether an Object Exists

- Object Access URL

- Generating Pre-Signed URL

- Configuring CORS Preflight Requests

- Single-URL Speed Limits

- Server-Side Encryption

- Remote disaster-tolerant

- Data Management

- Cloud Access Management

- Data Verification

- Content Moderation

- Setting Access Domain Names (CDN/Global Acceleration)

- Image Processing

- Troubleshooting

- Error Codes

- FAQs

- Service Level Agreement

- Appendices

- Glossary

This document describes how to quickly deploy GooseFS on a local device, perform debugging, and use COS as remote storage.

Prerequisites

Before using GooseFS, you need to:

1. Create a bucket in COS as remote storage. For detailed directions, please see Getting Started With the Console.

2. Install Java 8 or a later version.

3. Install SSH, ensure that you can connect to the LocalHost using SSH, and log in remotely.

4. Purchase a CVM instance as instructed in Getting Started and make sure that the disk has been mounted to the instance.

Downloading and Configuring GooseFS

1. Create and enter a local directory (you can also choose another directory as needed), and then download goosefs-1.4.2-bin.tar.gz。

$ cd /usr/local$ mkdir /service$ cd /service$ wget https://downloads.tencentgoosefs.cn/goosefs/1.4.2/release/goosefs-1.4.2-bin.tar.gz

2. Run the following command to decompress the installation package and enter the extracted directory:

$ tar -zxvf goosefs-1.4.2-bin.tar.gz$ cd goosefs-1.4.2

After the decompression, the home directory of GooseFS

goosefs-1.4.2 will be generated. This document uses ${GOOSEFS_HOME} as the absolute path of this home directory.3. Create the

conf/goosefs-site.properties configuration file in ${GOOSEFS_HOME}/conf. GooseFS provides configuration templates for AI and big data scenarios, and you can choose an appropriate one as needed. Then, enter the editing mode to modify the configuration:

(1) Use the AI template. For more information, see GooseFS Configuration Practice for a Production Environment in the AI Scenario.$ cp conf/goosefs-site.properties.ai_template conf/goosefs-site.properties$ vim conf/goosefs-site.properties

(2) Use the big data template. For more information, see GooseFS Configuration Practice for a Production Environment in the Big Data Scenario.

$ cp conf/goosefs-site.properties.bigdata_template conf/goosefs-site.properties$ vim conf/goosefs-site.properties

4. Modify the following configuration items in the configuration file

conf/goosefs-site.properties:# Common properties# Modify the master node's host informationgoosefs.master.hostname=localhostgoosefs.master.mount.table.root.ufs=${goosefs.work.dir}/underFSStorage# Security properties# Modify the permission configurationgoosefs.security.authorization.permission.enabled=truegoosefs.security.authentication.type=SIMPLE# Worker properties# Modify the worker node configuration to specify the local cache medium, cache path, and cache sizegoosefs.worker.ramdisk.size=1GBgoosefs.worker.tieredstore.levels=1goosefs.worker.tieredstore.level0.alias=SSDgoosefs.worker.tieredstore.level0.dirs.path=/datagoosefs.worker.tieredstore.level0.dirs.quota=80G# User properties# Specify the cache policies for file reads and writesgoosefs.user.file.readtype.default=CACHEgoosefs.user.file.writetype.default=MUST_CACHE

Note:

Before configuring the path parameter

goosefs.worker.tieredstore.level0.dirs.path, you need to create the path first.Running GooseFS

1. Before starting GooseFS, you need to enter the GooseFS directory and run the startup command:

$ cd /usr/local/service/goosefs-1.4.2$ ./bin/goosefs-start.sh all

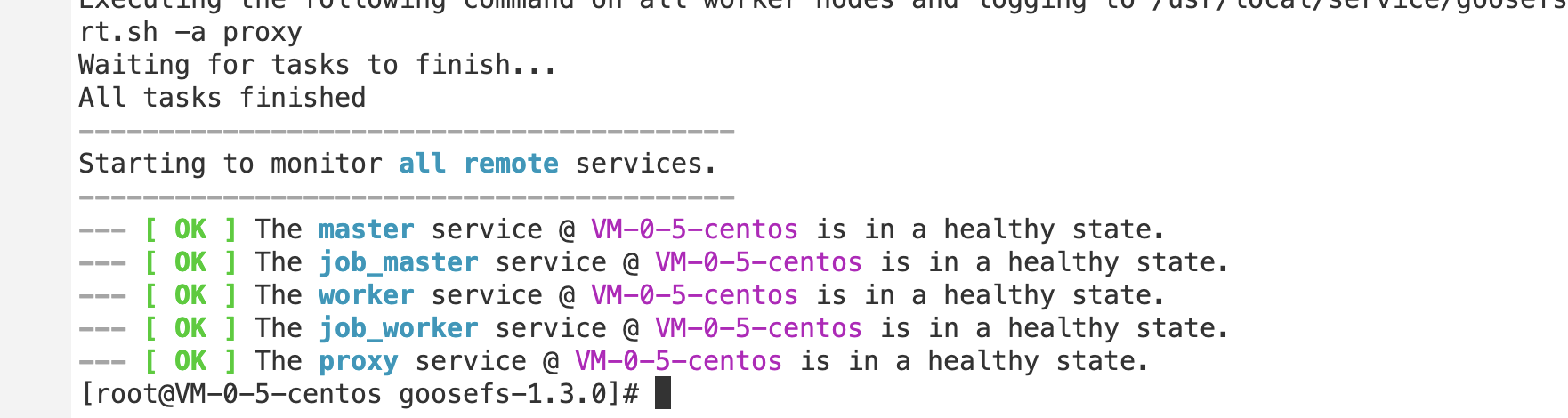

After running this command, you can see the following page:

After the command above is executed, you can access

http://localhost:9201 and http://localhost:9204 to view the running status of the master and the worker, respectively.Mounting COS or Tencent Cloud HDFS to GooseFS

To mount COS or Tencent Cloud HDFS to the root directory of GooseFS, configure the required parameters of COSN/CHDFS (including but not limited to

fs.cosn.impl, fs.AbstractFileSystem.cosn.impl, fs.cosn.userinfo.secretId, and fs.cosn.userinfo.secretKey) in conf/core-site.xml, as shown below:<!-- COSN related configurations --><property><name>fs.cosn.impl</name><value>org.apache.hadoop.fs.CosFileSystem</value></property><property><name>fs.AbstractFileSystem.cosn.impl</name><value>com.qcloud.cos.goosefs.CosN</value></property><property><name>fs.cosn.userinfo.secretId</name><value></value></property><property><name>fs.cosn.userinfo.secretKey</name><value></value></property><property><name>fs.cosn.bucket.region</name><value></value></property><!-- CHDFS related configurations --><property><name>fs.AbstractFileSystem.ofs.impl</name><value>com.qcloud.chdfs.fs.CHDFSDelegateFSAdapter</value></property><property><name>fs.ofs.impl</name><value>com.qcloud.chdfs.fs.CHDFSHadoopFileSystemAdapter</value></property><property><name>fs.ofs.tmp.cache.dir</name><value>/data/chdfs_tmp_cache</value></property><!--appId--><property><name>fs.ofs.user.appid</name><value>1250000000</value></property>

Note:

For the complete configuration of COSN, please see Hadoop.

For the complete configuration of CHDFS, see Mounting CHDFS Instance.

The following describes how to create a namespace to mount COS or CHDFS.

1. Create a namespace and mount COS:

$ goosefs ns create myNamespace cosn://bucketName-1250000000/ \\--secret fs.cosn.userinfo.secretId=AKXXXXXXXXXXX \\--secret fs.cosn.userinfo.secretKey=XXXXXXXXXXXX \\--attribute fs.cosn.bucket.region=ap-xxx \\

Note:

When creating the namespace that mounts COSN, you must use the

–-secret parameter to specify the key, and use --attribute to specify all required parameters of Hadoop-COS (COSN). For the required parameters, please see Hadoop.When you create the namespace, if there is no read/write policy (rPolicy/wPolicy) specified, the read/write type set in the configuration file, or the default value (CACHE/CACHE_THROUGH) will be used.

Likewise, create a namespace to mount Tencent Cloud HDFS:

goosefs ns create MyNamespaceCHDFS ofs://xxxxx-xxxx.chdfs.ap-guangzhou.myqcloud.com/ \\--attribute fs.ofs.user.appid=1250000000--attribute fs.ofs.tmp.cache.dir=/tmp/chdfs

2. After the namespaces are created, run the

ls command to list all namespaces created in the cluster:$ goosefs ns lsnamespace mountPoint ufsPath creationTime wPolicy rPolicy TTL ttlActionmyNamespace /myNamespace cosn://bucketName-125xxxxxx/3TB 03-11-2021 11:43:06:239 CACHE_THROUGH CACHE -1 DELETEmyNamespaceCHDFS /myNamespaceCHDFS ofs://xxxxx-xxxx.chdfs.ap-guangzhou.myqcloud.com/3TB 03-11-2021 11:45:12:336 CACHE_THROUGH CACHE -1 DELETE

3. Run the following command to specify the namespace information:

$ goosefs ns stat myNamespaceNamespaceStatus{name=myNamespace, path=/myNamespace, ttlTime=-1, ttlAction=DELETE, ufsPath=cosn://bucketName-125xxxxxx/3TB, creationTimeMs=1615434186076, lastModificationTimeMs=1615436308143, lastAccessTimeMs=1615436308143, persistenceState=PERSISTED, mountPoint=true, mountId=4948824396519771065, acl=user::rwx,group::rwx,other::rwx, defaultAcl=, owner=user1, group=user1, mode=511, writePolicy=CACHE_THROUGH, readPolicy=CACHE}

Information recorded in the metadata is as follows:

No. | Parameter | Description |

1 | name | Name of the namespace |

2 | path | Path of the namespace in GooseFS |

3 | ttlTime | TTL period of files and directories in the namespace |

4 | ttlAction | TTL action to handle files and directories in the namespace. Valid values: FREE (default), DELETE |

5 | ufsPath | Mount path of the namespace in the UFS |

6 | creationTimeMs | Time when the namespace is created, in milliseconds |

7 | lastModificationTimeMs | Time when files or directories in the namespace is last modified, in milliseconds |

8 | persistenceState | Persistence state of the namespace |

9 | mountPoint | Whether the namespace is a mount point. The value is fixed to true. |

10 | mountId | Mount point ID of the namespace |

11 | acl | ACL of the namespace |

12 | defaultAcl | Default ACL of the namespace |

13 | owner | Owner of the namespace |

14 | group | Group where the namespace owner belongs |

15 | mode | POSIX permission of the namespace |

16 | writePolicy | Write policy for the namespace |

17 | readPolicy | Read policy for the namespace |

Loading Table Data to GooseFS

1. You can load Hive table data to GooseFS. Before the loading, attach the database to GooseFS using the following command:

$ goosefs table attachdb --db test_db hive thrift://172.16.16.22:7004 test_for_demo

Note:

Replace

thrift in the command with the actual Hive Metastore address.2. After the database is attached, run the

ls command to view information about the attached database and table:$ goosefs table ls test_db web_pageOWNER: hadoopDBNAME.TABLENAME: testdb.web_page (wp_web_page_sk bigint,wp_web_page_id string,wp_rec_start_date string,wp_rec_end_date string,wp_creation_date_sk bigint,wp_access_date_sk bigint,wp_autogen_flag string,wp_customer_sk bigint,wp_url string,wp_type string,wp_char_count int,wp_link_count int,wp_image_count int,wp_max_ad_count int,)PARTITIONED BY ()LOCATION (gfs://172.16.16.22:9200/myNamespace/3000/web_page)PARTITION LIST ({partitionName: web_pagelocation: gfs://172.16.16.22:9200/myNamespace/3000/web_page})

3. Run the

load command to load table data:$ goosefs table load test_db web_pageAsynchronous job submitted successfully, jobId: 1615966078836

The loading of table data is asynchronous. Therefore, a job ID will be returned. You can run the

goosefs job stat <Job Id> command to view the loading progress. When the status becomes "COMPLETED", the loading succeeds.Using GooseFS for Uploads/Downloads

1. GooseFS supports most file system−related commands. You can run the following command to view the supported commands:

$ goosefs fs

2. Run the

ls command to list files in GooseFS. The following example lists all files in the root directory:$ goosefs fs ls /

3. Run the

copyFromLocal command to copy a local file to GooseFS:$ goosefs fs copyFromLocal LICENSE /LICENSECopied LICENSE to /LICENSE$ goosefs fs ls /LICENSE-rw-r--r-- hadoop supergroup 20798 NOT_PERSISTED 03-26-2021 16:49:37:215 0% /LICENSE

4. Run the

cat command to view the file content:$ goosefs fs cat /LICENSEApache LicenseVersion 2.0, January 2004http://www.apache.org/licenses/TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION...

5. By default, GooseFS uses the local disk as the underlying file system. The default file system path is

./underFSStorage. You can run the persist command to store files to the local system persistently as follows:$ goosefs fs persist /LICENSEpersisted file /LICENSE with size 26847

Using GooseFS to Accelerate Uploads/Downloads

1. Check the file status to determine whether a file is cached. The file status

PERSISTED indicates that the file is in the memory, and NOT_PERSISTED indicates not.$ goosefs fs ls /data/cos/sample_tweets_150m.csv-r-x------ staff staff 157046046 NOT_PERSISTED 01-09-2018 16:35:01:002 0% /data/cos/sample_tweets_150m.csv

2. Count how many times “tencent” appeared in the file and calculate the time consumed:

$ time goosefs fs cat /data/s3/sample_tweets_150m.csv | grep-c tencent889real 0m22.857suser 0m7.557ssys 0m1.181s

3. Caching data in memory can effectively speed up queries. An example is as follows:

$ goosefs fs ls /data/cos/sample_tweets_150m.csv-r-x------ staff staff 157046046ED 01-09-2018 16:35:01:002 0% /data/cos/sample_tweets_150m.csv$ time goosefs fs cat /data/s3/sample_tweets_150m.csv | grep-c tencent889real 0m1.917suser 0m2.306ssys 0m0.243s

The data above shows that the system delay is reduced from 1.181s to 0.243s, achieving a 10-times improvement.

Shutting Down GooseFS

Run the following command to shut down GooseFS:

$ ./bin/goosefs-stop.sh local

Yes

Yes

No

No

Was this page helpful?